Hey mates, I was trying to build a speech recognition model in tensorflow. I downloaded the Tensorflow speech recognition dataset from kaggle and using the yamnet pre-trained model , I build spectrograms of the respective audio signals. Problem comes here that I want to convert each spectrogram into a file and add into my directory for train and test and further create a ImageDataGenerator to load the images inside my model. Any ideas how can I convert my spectrogram to an Image file to store it in a directory ??

Thanks .

Have you checked this:

Assuming you don’t mind using Librosa and matplotlib, which can be awesome for audio pipelines, maybe you could try what this user (a researcher) did here Ability to save Spectrogram as image · Issue #1313 · librosa/librosa · GitHub. First, it appears that they encode the audio waveforms into (mel) spectrograms (before using them as inputs for the network), which can be done with Librosa, for example with librosa.feature.melspectrogram . Then, it looks like they use librosa.display.specshow (API doc) and matplotlib.pyplot.savefig (API doc) to display and save each figure.

Alternatively, check out these answers on StackOverflow: audio - Store the Spectrogram as Image in Python - Stack Overflow (with Librosa, matplotlib) and python - How can I save a Librosa spectrogram plot as a specific sized image? - Stack Overflow (with Librosa, skimage.io).

Finally, there are a couple of proposals on Kaggle that you may find useful: Converting sounds into images: a general guide | Kaggle. The 1st one uses Librosa, while the 2nd one utilizes the fastai library.

Other potentially useful resources:

- SpecAugment, with the LibriSpeech dataset: Google AI Blog: SpecAugment: A New Data Augmentation Method for Automatic Speech Recognition.

- How to Extract Spectrograms from Audio with Python - YouTube

- GitHub - musikalkemist/DeepLearningForAudioWithPython: Code and slides for the "Deep Learning (For Audio) With Python" course on TheSoundOfAI Youtube channel.

- GitHub - musikalkemist/AudioSignalProcessingForML: Code and slides of my YouTube series called "Audio Signal Proessing for Machine Learning"

- And more:

Check also this simple audio tutorial:

Hi,

YAMNet is not the best for speech recognition but there’s this tutorial: tutorial and blog post about it

For a more modern model for fine tuning, you can try the recently published on TFHub wav2vec2: https://twitter.com/TensorFlow/status/1433520168605519875

It has a tutorial on how to use it.

+1. wav2vec 2.0 is one of the state-of-the-art models for modern ASR at the moment. You can find it on TF Hub: wav2vec2 and wav2vec2-960h.

However, do note that, as mentioned in the wav2vec 2.0 paper, in the original experiment, the authors appeared to have used raw data as inputs, instead of visual representations like (log-mel) spectrograms (which is what this experiment used during pretraining instead, as mentioned further below):

“In this paper, we present a framework for self-supervised learning of representations from raw audio data. Our approach encodes speech audio via a multi-layer convolutional neural network and then masks spans of the resulting latent speech representations [26, 56], similar to masked language modeling.”

It’s also mentioned in the wav2vec-U (unsupervised wav2vec for ASR) paper:

“Wav2vec 2.0 consists of a convolutional feature encoder f : X 7→ Z that maps a raw audio sequence X to latent speech representations z1, . . . , zT , which a Transformer g : Z 7→ C then turns into context representations c1, . . . , cT (Baevski et al., 2020b,a).”

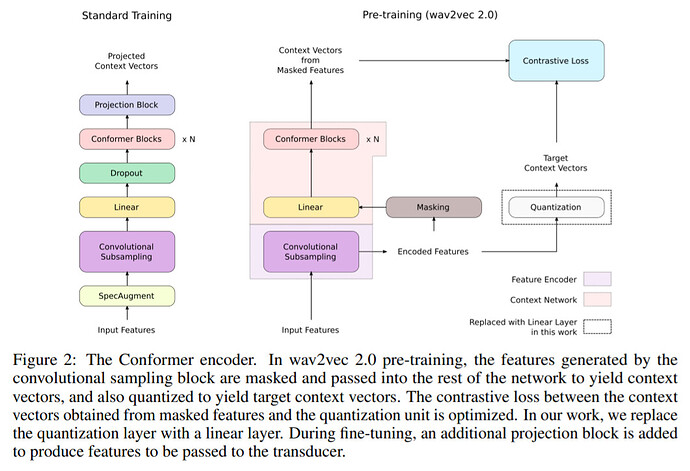

There’s another model called Conformer: Convolution-augmented Transformer for Speech Recognition (TF Hub has a TF Lite quantized version). In the paper, the authors describe the experiment’s data preprocessing step as follows:

“We evaluate the proposed model on the LibriSpeech [26] dataset… We extracted 80-channel filterbanks features computed from a 25ms window with a stride of 10ms. We use SpecAugment [27, 28] with mask parameter (F = 27), and ten time masks with maximum time-mask ratio (pS = 0.05), where the maximum-size of the time mask is set to pS times the length of the utterance.”

(where “SpecAugment applies an augmentation policy directly to the audio spectrogram” - source: https://ai.googleblog.com/2019/04/specaugment-new-data-augmentation.html.)

Another ASR/Conformer-related method: Pushing the Limits of Semi-Supervised Learning for Automatic Speech Recognition, which uses scaled up versions of the network called Conformer XL and Conformer XXL with up to 1 billion parameters:

"• Model: We use Conformers [5] (and slight variants thereof) as the model architecture.

“• Pre-training: We use wav2vec 2.0 pre-training [6] to pre-train the encoders of the networks.

" • Iterative Self-Training: We use noisy student training (NST) [7, 8] with adaptive SpecAugment [9, 10] as our iterative self-training pipeline.”

And, this experiment appeared to have used log-mel spectrograms:

“…We pre-train the Conformer encoder akin to wav2vec 2.0 pre-training [6] with utterances from the “unlab-60k” subset of Libri-Light [2]. Unlike in the original work which takes raw waveforms as input, we use log-mel spectrograms.”

Another interesting post about the Conformer:

Thanks a ton community for your insightful ideas. I will surely look into each of the replies and improve my understanding as much as possible. Thanks.

Hey community. I realized I want to build a custom keyword detection system. A system which understands single words like stop , onn, off etc. I used the speech dataset converted them to spectrograms and passed them through a network of conv1d and lstm. but my model fails to have a good accuracy. Can anyone suggest some article or blog for me to learn regarding the same of working with spectrograms. Thanks

Try this tutorial: Reconocimiento de audio simple: reconocimiento de palabras clave | TensorFlow Core

Hey @lgusm , I tried the above article but my model was not showing any good results. I tried to make some changes but it showed no good. Could you please recommend something different.

why are you using LSTM for keyword detection?

I read some article which explained to convert audio samples to spectrograms and using those to pass through conv1d and lstm. I’m confused as to what is the idea approach for solving this problem. I tried to read the tensorflow tutorials but it is quite tensorflow centric and not showing good results. Could we have something which uses other python libraries. Thanks

There’s a difference between speech recognition to keyword recognition.

When we talk about speech, we expect longer sentences and that’s why someone might think about LSTM as there’s a sequence that needs to be take into account.

For keyword detection, since it’s a short sound, a couple of seconds, there’s no need for LSTM, you can just use the spectrogram and an image classifier on it (as shown on the tutorial I posted earlier and this is also the idea behind YAMNet mentioned on the first post).

For speech, maybe this tutorial can help you: Google Colab

Hey @lgusm , Thanks for your detailed reply. I was quite confused between the two regarding their literature and implementation. I will surely look at the keyword recognition example from above. Thanks.

I’d start here. AFAIK, librosa is the Python library of choice for audio processing.

The GitHub repos should have accompanying lessons on YouTube. Hope this helps.