Hi!

I have trained my first custom object detection model with 2 classes and 100 test/500 train images and have some questions.

Nothing has been pretrained. I’m running efficientdet_d0_coco17_tpu-32 with my own labeled images created with LabelImg.

-

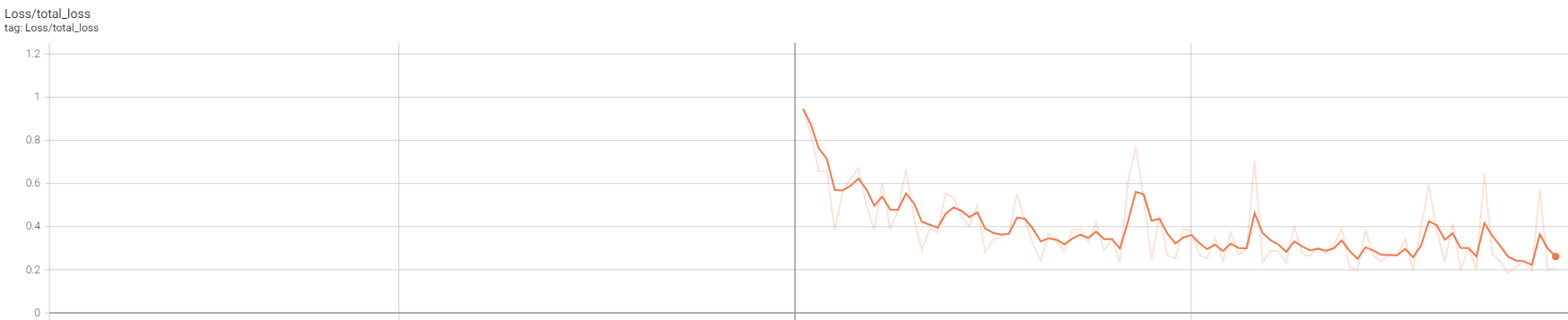

Low initial total loss from first step (starts from 1.5)

-

Total loss down to 0.2 after just 2000 steps, wth? After that loss is increased if continued

-

Low score when predicting

-

Should you resize your train/test images to one size or should they be the original size?

-

Should initial total loss not go up when you retrain with new train/test images? Started at last checkpoint with old images removed, right now it continues on last value (0.2)

-

My goal is to detect vehicles/license plates, is 500 images not enough for this?

-

I’m using my model in react-native (expo eas), the following has been done:

- Clone tensorflow models

github/tensorflow/models - Setup my configuration files, (using efficientdet_d0_coco17_tpu-32)

- Train model untill loss is no longer decreasing

- Export using exporter_main_v2

- Converted my saved model to tensorflowjs model

github.com/tensorflow/tfjs-converter/blob/0.8.x/README.json.md - Loaded my model using bundleResourceIO

@tensorflow/tfjs-react-native - npm

Is this “the correct way” if you want to use your own custom object detection model in react-native? Maybe I should be using tflite instead?

Really appreciate the help!