I don’t have a problem when creating my own serving image in docker using 1 model but when I try to build a serving image with multiple models it doesn’t work.

Here’s the command I used

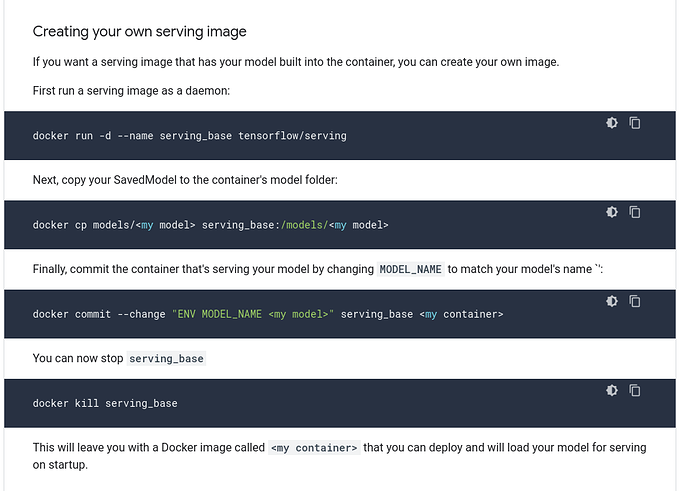

Create Serving Image

docker run -d --name serving_base tensorflow/serving

Copy SavedModel

docker cp /home/model1 serving_base:/models/model1

docker cp /home/model2 serving_base:/models/model2

docker cp /home/model.config serving_base:/models/model.config

Commit

docker commit serving_base acvision

Stop Serving Base

docker kill serving base

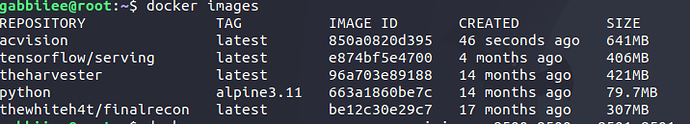

Checking docker image

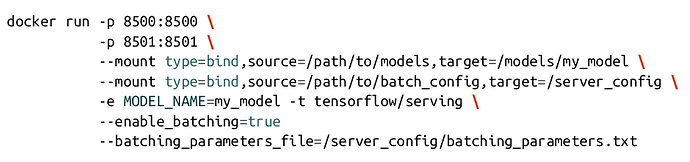

docker run --rm --name serve_acvision -p8500:8500 -p8501:8501 -d acvision

Checking the 1st model if working

![]()