Hi there!

I am trying to learn tensorflow and use it for signal preprocessing and object detection - to train and use neural network using Python.

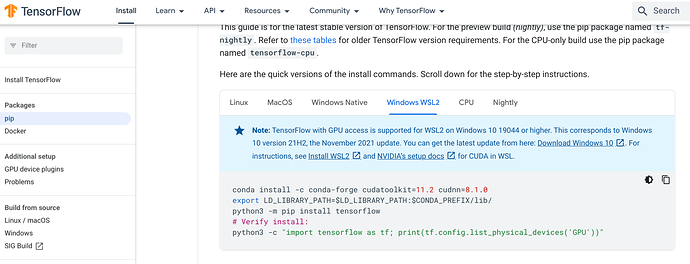

I am using Windows 11, WSL 2, Ubuntu:

uname -m && cat /etc/*release

x86_64

DISTRIB_ID=Ubuntu

DISTRIB_RELEASE=22.04

DISTRIB_CODENAME=jammy

DISTRIB_DESCRIPTION="Ubuntu 22.04.1 LTS"

PRETTY_NAME="Ubuntu 22.04.1 LTS"

NAME="Ubuntu"

VERSION_ID="22.04"

VERSION="22.04.1 LTS (Jammy Jellyfish)"

VERSION_CODENAME=jammy

ID=ubuntu

ID_LIKE=debian

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

UBUNTU_CODENAME=jammy

First step is to install tensorflow - I have done it using pip install routine and I have semi-succeeded.

By using this guide (CUDA Installation Guide for Linux).

But when I am trying to run lspci to list all devices I can’t see any Nvidia devices…

$ lspci

1e5b:00:00.0 3D controller: Microsoft Corporation Basic Render Driver

34c3:00:00.0 System peripheral: Red Hat, Inc. Virtio file system (rev 01)

4034:00:00.0 SCSI storage controller: Red Hat, Inc. Virtio filesystem (rev 01)

54e8:00:00.0 3D controller: Microsoft Corporation Basic Render Driver

6691:00:00.0 SCSI storage controller: Red Hat, Inc. Virtio filesystem (rev 01)

8cad:00:00.0 SCSI storage controller: Red Hat, Inc. Virtio filesystem (rev 01)

fe48:00:00.0 SCSI storage controller: Red Hat, Inc. Virtio console (rev 01)

When I tried to use Tensorflow simple test, it returned the info that I have not some libraries that CUDA requires…

for simple python script:

print(tf.reduce_sum(tf.random.normal([1000, 1000]))) # to see if the tf is working

print(tf.config.list_physical_devices('GPU')) # to see the list of GPU

See the output:

2022-12-25 20:28:49.080528: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX_VNNI FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-12-25 20:28:49.429094: I tensorflow/core/util/port.cc:104] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2022-12-25 20:28:50.386852: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory

2022-12-25 20:28:50.387255: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory

2022-12-25 20:28:50.387342: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

WARNING:root:Limited tf.compat.v2.summary API due to missing TensorBoard installation.

2022-12-25 20:28:52.964517: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:967] could not open file to read NUMA node: /sys/bus/pci/devices/0000:01:00.0/numa_node

Your kernel may have been built without NUMA support.

2022-12-25 20:28:53.297342: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1934] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...

tf.Tensor(-43.878326, shape=(), dtype=float32)

[]

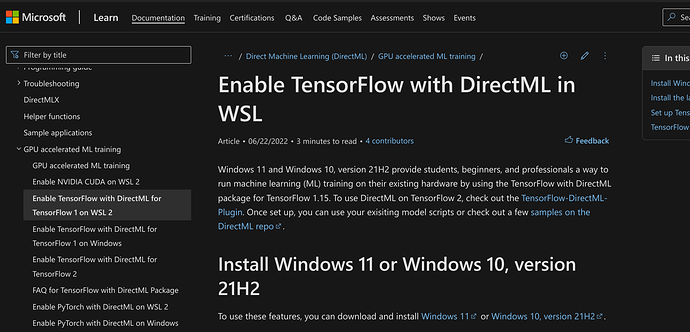

How to enable TF to work with the GPU I have under WSL?

I can provide any required additional information - but I don’t know what is needed indeed.