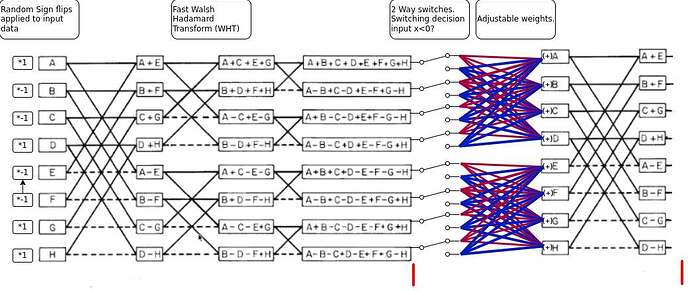

Most fast transforms are one-to-all where a change in one input causes some change in all the outputs. That and other properties make them good for combining things.

You could use them to combine multiple small width neural layers into one wide layer.

A dense width 65536 layer needs 6553665536 fused add multiplies to compute.

16384 width 4 layers need 1638416 fused add multiplies and can be combined to a 65536 layer. Of course there is the combining cost of around nlog2(n) which is 65536*16 operations.

Then you can make nets like this:https://ai462qqq.blogspot.com/2023/04/switch-net-4-switch-net-n-combine.html