Hello!

I have custom padding layer that fills the inputs/activations of my model with constant_values = -numpy.inf instead of 0 to then act with maxpooling2d (basically compare between -inf and the activation value). Once converted the tflite model to int8 (full-integer post-training quantization) and load the model with the tflite interpreter I get the following output

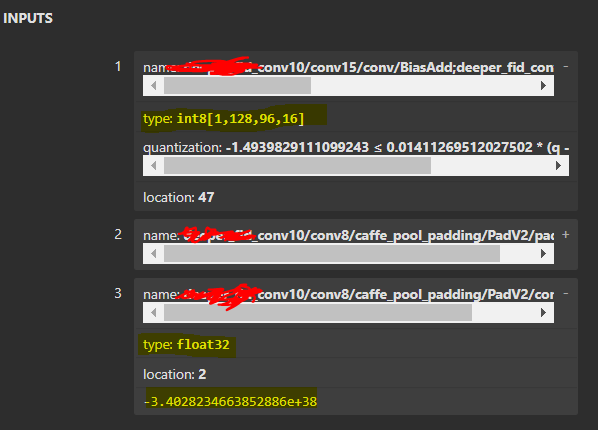

RuntimeError: tensorflow/lite/kernels/pad.cc:118 op_context.input->type != op_context.constant_values->type (INT8 != FLOAT32)Node number 3 (PADV2) failed to prepare.

I guess this problem is due to the fact that padding cannot fill with -inf an input that is int8, I would appreciate if you can give me some advice regarding this, how can I fill an int8 input to compare its values with -inf in a quantized way

Thank you in advance

==== Status 23.09.21 ====

After changing the constant values of -numpy.inf in the custom padding layer in favor of x.dtype.min:

def call(self, x):

paddings = [[0, 0]] + self._explicit_pad + [[0, 0]]

return tf.pad(x, paddings=paddings, mode='CONSTANT',

constant_values= 0 if self.layer_type == 'conv' else x.dtype.min)

for pooling layers the tflite quantized model is still comparing and int8 input with a float → x.dtype.min and when calling tf.lite.Interpreter.allocate_tensors():