Hi I am a beginner in RL and I fell like my DDQN agent is not learning at all and almost always choosing the same action. I have no idea what I am doing wrong. If you have an idea what I can be doing wrong or have any advice what I can try to make it work better, I will appreciate it a lot.

About the project:

Envirment

- board is a rectangle of given width and height with randomly placed food

- you can move STRAIGHT LEFT or RIGHT depend on the snake head position,

- if snake head collide with food, then you are getting positive points and snake body is growing

- if you ate all foods, you are wining

- if you hit a snake tail or board edge with a head, you are loosing

Points for action

- if collide with FOOD, gain 100 POINTS

- if collide with BLANK field, gain -100 POINTS

- if collide with TAIL, gain -10 000 POINTS

- if collide with WALL, gain -10 000 POINTS

- if all foods have been eaten gain 1000 POINTS

DQN input

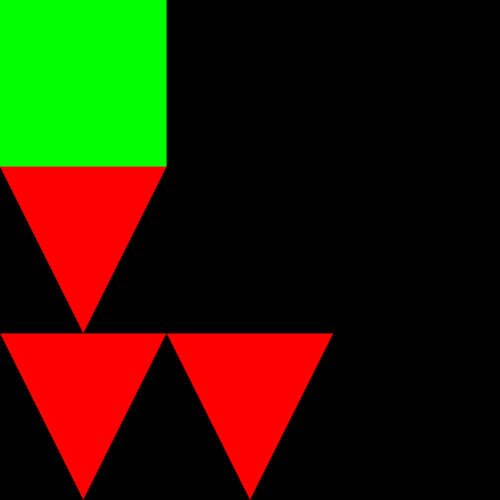

Let’s say I have created 3x3 board with 3 foods which looks like that

Then input of my DQNNetowrk is a vector with 9 values where each value represent current state of board cell, where:

-

Snake Head is 3, 4, 5, 6 depends on direction (

BOTTOM = 3, LEFT = 4, RIGHT = 5, TOP = 6) - FOOD is 2

- Snake Body Part different than head is 1

So for above board input vector will be:

[

5, 0, 0

2, 0, 0

2, 2, 0

]

Then before I put this to my DQN I am converting this vector to Tensor of rank 2 and shape [1, 9]. When i am training on replay memory, then I am having a Tensor of rank 2 and shape [batchSize , 9] .

DQN Output

My DQN output size is equal to the total number of actions I can take in this scenario 3 (STRAIGHT, RIGHT, LEFT)

Implementation

Every time before I start to use my DDQN agent, first I am creating onlineNetwork and targetNetwork and filling ReplayMemory buffer. Then at the beginning of every epoch I reset my Enviroment and then I then my agent is playing in environment until lose or win. After every action agent will take, I am learning on replay memories batch and increasing copyWeights counter (once this counter is a multiple of updateWeightsIndicator). At the end of every epoch, I am adding new game score to my buffer with 100 last results and decreasing epsilon.

What I have tried

- normalize my input vector to values between 0 and 1

- create a vector with only foods marked

- create a vector where I have used rewards to replace snake boy parts positions and food

- add two additional columns and rows and fill them with WALL reward

- changing size of hidden dense layers

None of the above helped

Attachments

Under this link: GitHub - kaczor6418/snake-game: Simple snake game created to learn some about WebGL and reinforcement learning algorithms, you can find my GitHub repository. I think the most possible places I have made a mistake is ReinforcementAgent class or DoubleDeepQLearningAgent class inside src/agents directory. In App.ts runSnakeGameWithDDQLearningAgent you can find configuration of my DDQN Agent.

To use this repository:

git clone https://github.com/kaczor6418/snake-game.git

cd ./snake-game

npm install

npm run serve

Or maybe I am just not patient enough and 10k epochs(10k played game is not enough to learn such environment ?)

If you need any additional explanation, please let me know in comments and I will answer