After running into OOM errors using Tensorflow Object Detection API with an NVIDIA 3080 (10GB) I bought a 4090 (24GB). I am currently running both together, but I noticed that in high batch size runs, I’m using almost all the 3080 but varying amounts of the 4090. Ideally I’d like to use all of both cards to push the batch size as high as possible. I can’t seem to find a way to change the strategy so that the GPUs can take different loads. The Mirrored strategies seem to give each GPU the same amount of data to process during each split. Is there a way that one GPU can have more and the other less?

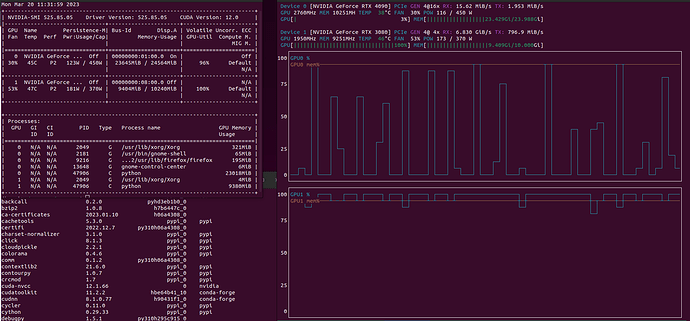

My machine and environment specs are as follows:

OS = Ubuntu 22.04

GPUs [0: 4090, 1: 3080]

python = 3.10.9

cudatoolkit = 11.2.2 (installed through anaconda)

cudnn = 8.1.0.77 (installed through anaconda)

I’m fairly new to this, so any help is appreciated. If I’ve left out any useful information, please let me know and I’ll edit the post accordingly. Thanks in advance.

Derek