The scale of data with which we operate at Carted requires us to think outside the box. In pursuit of doing that, today we’re sharing recipes to significantly boost the training time of sequence models in TensorFlow.

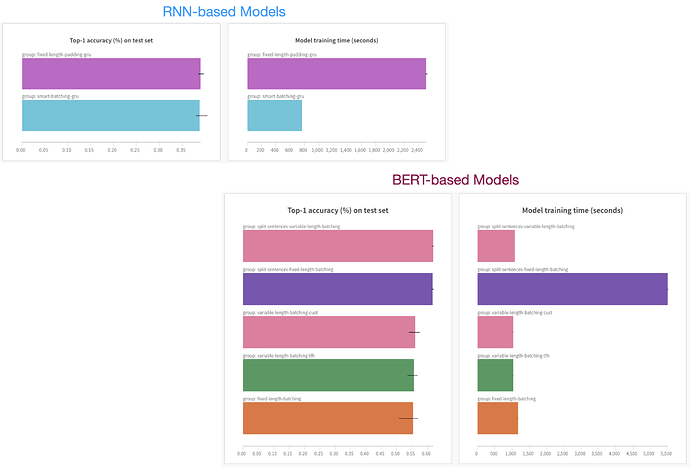

We tested our recipes on RNN-based as well as BERT-based models to confirm their effectiveness. Central to these recipes is the idea of dynamic padding in batches which we found to be quite non-trivial in TensorFlow. But now, we have a solution! Here’s a summary of the gains we were able to obtain with the techniques we implemented:

Code, notebooks, articles are all here:

Work done at Carted by @anon1529149 and myself