Hey I fine tuned a wav2vec using tensorflow and exported it to my drive . I want to import it back and add a signature but I can’t find out how to add input and ouputs .

Any help would be welcome .

To specify a signature of the model, you can read it here: Using the SavedModel format | TensorFlow Core

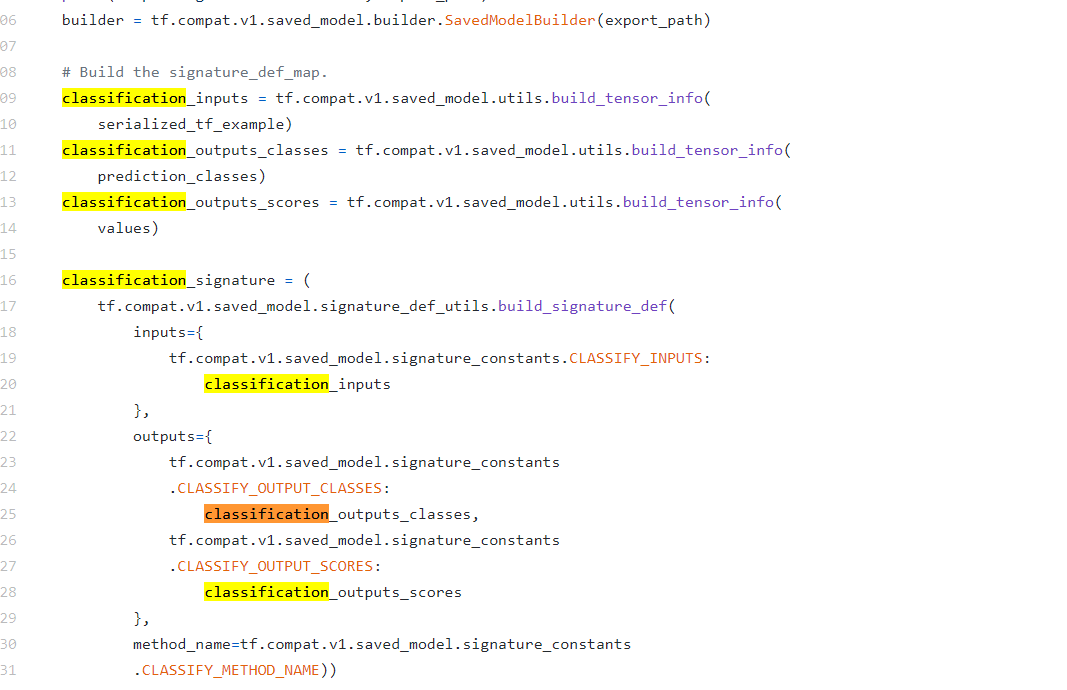

Hey i went through it but it didn’t show how to make a signature like this :

I want to do the same but for wav2vec . I am having problems finding out what should go in the inputs and outputs dict

This is a more complex signature. What I’d do is give one step back and understand how signature work

maybe this post can help: A Tour of SavedModel Signatures — The TensorFlow Blog

I read the blogs and a few others and found out how to inspect my current signature and my saved model gave me this, what would I need to change to just pass a read sound file and get the predictions back?

Maybe what I am trying to say is how would I talk to the input and output tensors given this is my default signature?

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['__saved_model_init_op']:

The given SavedModel SignatureDef contains the following input(s):

The given SavedModel SignatureDef contains the following output(s):

outputs['__saved_model_init_op'] tensor_info:

dtype: DT_INVALID

shape: unknown_rank

name: NoOp

Method name is:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['input_1'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 246000)

name: serving_default_input_1:0

The given SavedModel SignatureDef contains the following output(s):

outputs['output_1'] tensor_info:

dtype: DT_FLOAT

shape: (-1, 768, 32)

name: StatefulPartitionedCall:0

Method name is: tensorflow/serving/predict

WARNING: Logging before flag parsing goes to stderr.

W0810 10:24:07.810713 140706217662336 deprecation.py:506] From /usr/local/lib/python2.7/dist-packages/tensorflow_core/python/ops/resource_variable_ops.py:1786: calling __init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

Defined Functions:

Function Name: '__call__'

Option #1

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2

DType: bool

Value: False

Option #2

Callable with:

Argument #1

input_1: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'input_1')

Argument #2

DType: bool

Value: False

Option #3

Callable with:

Argument #1

input_1: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'input_1')

Argument #2

DType: bool

Value: True

Option #4

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2

DType: bool

Value: True

Function Name: '_default_save_signature'

Option #1

Callable with:

Argument #1

input_1: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'input_1')

Function Name: 'call'

Option #1

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2

DType: bool

Value: True

Option #2

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2

DType: bool

Value: False

Function Name: 'call_and_return_all_conditional_losses'

Option #1

Callable with:

Argument #1

input_1: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'input_1')

Argument #2

DType: bool

Value: False

Option #2

Callable with:

Argument #1

input_1: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'input_1')

Argument #2

DType: bool

Value: True

Option #3

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2

DType: bool

Value: True

Option #4

Callable with:

Argument #1

speech: TensorSpec(shape=(None, 246000), dtype=tf.float32, name=u'speech')

Argument #2also question if like signatures are functions of a saved model then if this works

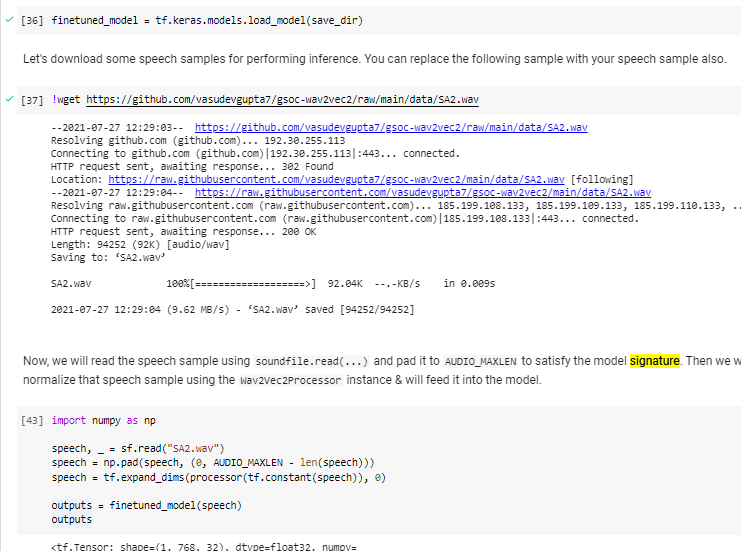

does that mean that the predefined signature is all I need? cause my final goal is to be able to load this model in scala therefore i need to define a signature which allows me to do the processing shown here in scala

For audio data, usually it is loaded as a float array outside the model and the input is the array probably normalized to [-1, 1] values.

here’s is an example of this: Sound classification with YAMNet | TensorFlow Hub

you can also look YAMNet’s signature to get some inspiration.

okay thanks i will update you soon

okay so here is what i got :

from my understanding a signature is basically a function we attach to a mode so that we can use it when we reload it , over here I have a signature already defined inside my model :

from my understanding it takes in the padded float array with the shape mentioned but now my problem is that why is it (-1,246000) instead of (1,24600) and why am i having issues using this also from what I understood is u can assighn funcions as signatures so should i just add this processing inside the serving signature like would it be possible ?:

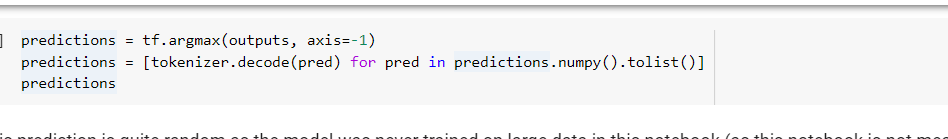

I have to use this to convert tokenize the outputs from the model and I thought it would be cool if the signature could handle this to .

look forward to your response

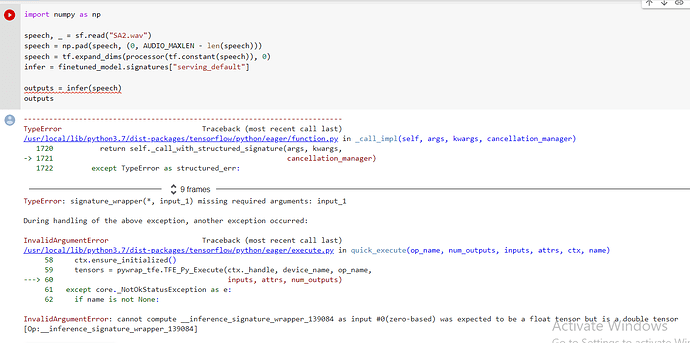

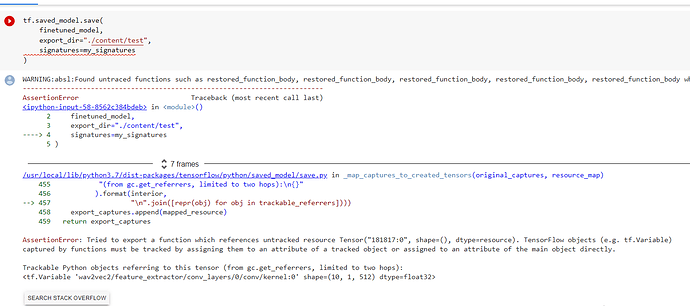

here is a draft of my signature i plan to use and the problem I face now :

@tf.function()

def my_predict(my_prediction_inputs):

inputs = {

'input_1': my_prediction_inputs,

}

prediction = model(inputs)

return {"output_2": prediction}

my_signatures = my_predict.get_concrete_function(

my_prediction_inputs=speech

)

I found this that can help you: TensorFlow Hub

The model was published on TFHub and maybe using that directly would be easier

hey thanks, I was already using this and I found out what the problem was, the data type was being messed up so changing it to 64 made it work for now at least

Thanks for all your help I really appreciate it