I have time-series dataset with different input types (numeric & ID’s/geographic grouping variables) that I want to put into the same model using embeddings and LSTM’s.

I tried using the tensorflow documentation here to build a dummy model with the model features that I want but am having issues with the training/validation input shapes.

import geopandas as gpd

import numpy as np

import pandas as pd

from urllib import request as rq

import tensorflow as tf

from tensorflow import keras

from keras import layers

from keras import Input

rq.urlretrieve("https://raw.githubusercontent.com/Nowosad/spData/dd7634092a150e5d77d4d9b5d28fe77b739643ad/inst/shapes/boston_tracts.dbf", "~boston_tracts.dbf")

bstn = gpd.read_file(filename="~boston_tracts.dbf")

bs2 = bstn.copy()

bs2[["CRIM", "ZN", "INDUS", "NOX", "RM", "AGE", "DIS", "RAD", "TAX", "PTRATIO", "LSTAT", "units", "cu5k", "c5_7_5", "C7_5_10", "C10_15", "C15_20", "C20_25", "C25_35", "C35_50", "co50k", "median", "BB", "POP", "LAT", "LON"]] = bs2[["CRIM", "ZN", "INDUS", "NOX", "RM", "AGE", "DIS", "RAD", "TAX", "PTRATIO", "LSTAT", "units", "cu5k", "c5_7_5", "C7_5_10", "C10_15", "C15_20", "C20_25", "C25_35", "C35_50", "co50k", "median", "BB", "POP", "LAT", "LON"]].apply(np.float32)

bs2 = pd.concat(objs=[bs2, pd.get_dummies(data=bs2.censored, prefix="censored")], axis=1)

bs2[["NOX_ID", "TOWNNO"]] = bs2[["NOX_ID", "TOWNNO"]].astype(int).astype(str)

bs2[["CHAS", "censored_left", "censored_right"]] = bs2[["CHAS", "censored_left", "censored_right"]].apply(np.int8)

bs2 = bs2.drop(labels=["censored", "censored_no", "LAT", "LON", "geometry", "poltract", "CMEDV", "MEDV", "TOWN", "B", "TRACT"], axis=1).dropna()

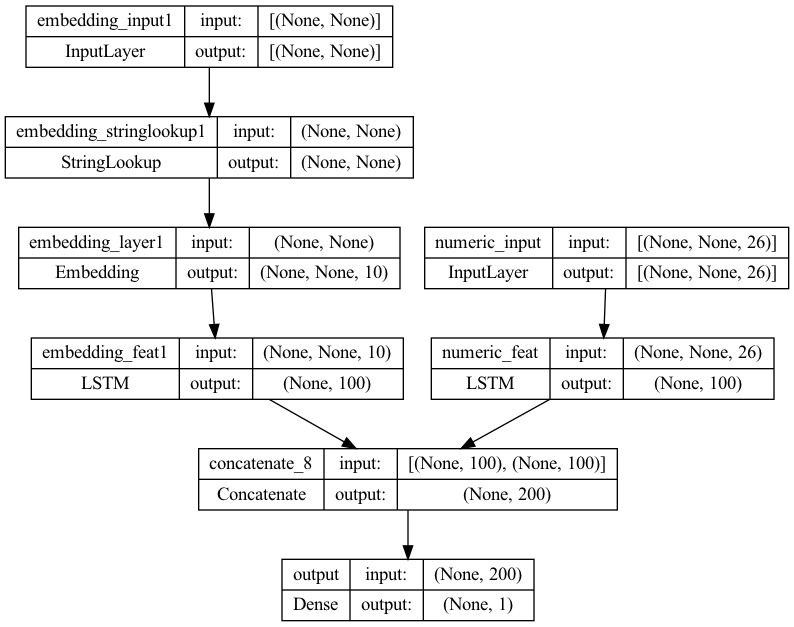

embedding_input1 = Input(shape=(None,), name="embedding_input1")

embedding_feat1 = layers.StringLookup(vocabulary=bs2.TOWNNO.unique(), name="embedding_stringlookup1")(embedding_input1)

embedding_feat1 = layers.Embedding(input_dim=len(bs2.TOWNNO.unique()), output_dim=10, name="embedding_layer1")(embedding_feat1)

embedding_feat1 = layers.LSTM(units=100, name="embedding_feat1")(embedding_feat1)

numeric_input = Input(shape=(None, 26), name="numeric_input")

numeric_feat = layers.LSTM(units=100, name="numeric_feat")(numeric_input)

x = layers.concatenate(inputs=[numeric_feat, embedding_feat1])

outputs = layers.Dense(units=1, name="output")(x)

model = keras.Model(inputs=[numeric_input, embedding_input1], outputs=outputs, name="multi_input_model")

keras.utils.plot_model(model, "multi_input_and_output_model.png", show_shapes=True)

model.compile(

loss=keras.losses.mean_squared_error,

optimizer=keras.optimizers.RMSprop(),

metrics=["mean_squared_error"]

)

history = model.fit(

x={

"numeric_input":bs2.drop(columns=["median", "TOWNNO", "NOX_ID"]).values.reshape(-1, 489, 26),

"embedding_input1":bs2["TOWNNO"].values.reshape(-1,489,1),

},

y={"outputs":bs2[["median"]].values.reshape(-1,489,1)},

batch_size=30,

epochs=10,

validation_split=0.2

)

ValueError: Training data contains 1 samples, which is not sufficient to split it into a validation and training set as specified by 'validation_split=0.2'. Either provide more data, or a different value for the 'validation_split' argument.