I downloaded the csv data of boston house price to practice tensorflow; the csv data have 13 columns and the number 13 column is the price;

I processed the data and send them into the model to tranining;

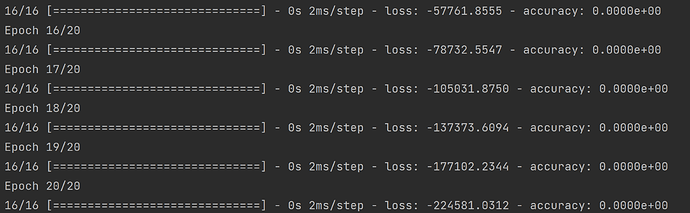

But the training result is that the loss is a negative number and the absolute value of loss is become bigger and bigger per epoch. And the accuracy is always 0 simutaneously.

So is there any wrong with my code when process the csv data or create the model?

please help me!

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

f = open('bostonHouse.csv')

df = pd.read_csv(f)

data = np.array(df)

plt.figure()

plt.plot(data)

plt.show()

normalize_data = (data - np.mean(data)) / np.std(data)

normalize_data = normalize_data[:, np.newaxis]

train_x, train_y = [], []

for i in range(len(normalize_data)):

x = normalize_data[i][0][:12]

y = normalize_data[i][0][12]

train_x.append(x.tolist())

train_y.append(y.tolist())

print("train_x data:{}".format(train_x[:1]))

print("train_y data:{}".format(train_y[:1]))

print('train x len', len(train_x[0]))

model = tf.keras.Sequential([

tf.keras.layers.Dense(128, activation='relu', input_shape=(12,)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(

loss="binary_crossentropy",

optimizer='adam',

metrics=['accuracy'],

)

model.summary()

model.fit(x=train_x, y=train_y, epochs=20)