Hello, I am attempting to integrate a transfer-learned model with some html code over REACT by using the tensorflow-serving docker container for a university project. I believe I have the container properly set up (was usable with the test files) but am having issues when posting to predict over JSON. Whenever I try to post to the url, I get a 404 error, even though curling the url works fine.

I believe this is an issue to do with my SavedModel, since both the test files work in my tf-serving docker container and the model.predict() function works in colab.

I have been searching over the past few days for a solution to my issue, but was unable to find any topics covering my exact issue, though I feel like I have gotten close I just cannot find what is wrong.

My model is transfer-learned from MobileNetV2, with a few added layers.

This is what the model summary shows:

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

mobilenetv2_1.00_224 (Funct (None, 1280) 2257984

ional)

dense_3 (Dense) (None, 512) 655872

dropout_2 (Dropout) (None, 512) 0

dense_4 (Dense) (None, 256) 131328

dropout_3 (Dropout) (None, 256) 0

dense_5 (Dense) (None, 5) 1285

=================================================================

Total params: 3,046,469

Trainable params: 788,485

Non-trainable params: 2,257,984

And I have it saved (from a checkpoint) by calling this code:

MODEL_DIR = '/my_model'

version = 4

export_path = os.path.join(MODEL_DIR, str(version))

tf.keras.models.save_model(

model,

export_path,

overwrite=True,

include_optimizer=True,

save_format=None,

signatures=None,

options=None

)

loaded = tf.saved_model.load(export_path)

print(list(loaded.signatures.keys())) # ["serving_default"]

infer = loaded.signatures["serving_default"]

print(infer.structured_outputs)

I have also tried to use tf.saved_model.save(), however that produced the same results.

The output from the print statements above are so:

['serving_default']

{'dense_5': TensorSpec(shape=(None, 5), dtype=tf.float32, name='dense_5')}

I have noticed that in other implementations, where ‘dense_5’ is there is normally the output name ‘prediction’.

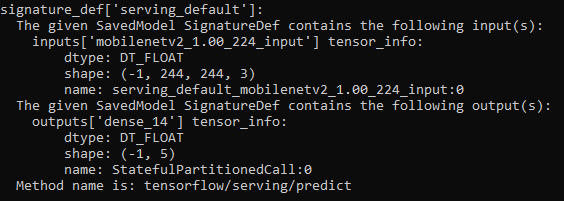

The image attached below is the saved_model_cli information

Please forgive me if this is a simple issue, as I am quite new to Tensorflow and tf-serving, Thank you in advance.