Sorry, I had to elaborate a little bit further.

I’m trying to predict bounding boxes, so it’s a regression problem (I think?), so the activation function shouldn’t be the problem.

Ah, I didn’t know VGG was used for classification.

I updated my model, like so:

model = keras.models.Sequential([

keras.layers.Input((IMAGE_HEIGHT, IMAGE_WIDTH, 3)),

keras.layers.Conv2D(64, 3, activation="relu"),

keras.layers.MaxPooling2D(),

keras.layers.Conv2D(32, 3, activation="relu"),

keras.layers.MaxPooling2D(),

keras.layers.Conv2D(16, 3, activation="relu"),

keras.layers.MaxPooling2D(),

keras.layers.Flatten(),

keras.layers.Dense(256, activation="relu"),

keras.layers.Dropout(0.2),

keras.layers.Dense(128, activation="relu"),

keras.layers.Dense(64, activation="relu"),

keras.layers.Dense(32, activation="relu"),

keras.layers.Dense(4, activation="sigmoid"),

])

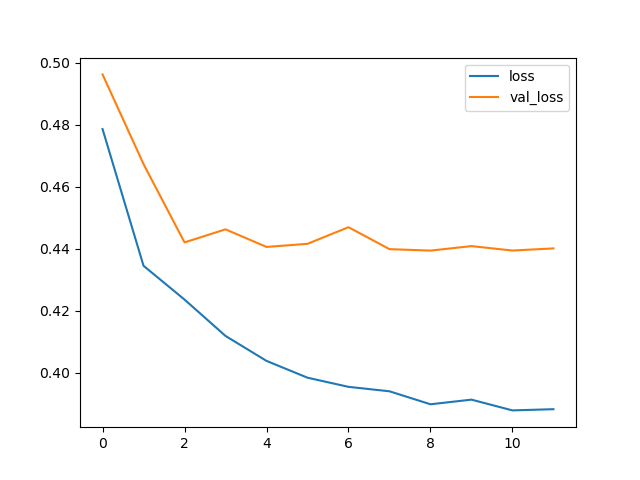

Which yielded a somewhat healthier learning curve.

Yet, I still have the same problem of the predictions being off.

It makes me think I’m doing something wrong with calculating the bounding boxes.

I calculate the bboxes like this:

During training, I downscale the image using keras:

img = tf.keras.preprocessing.image.load_img(img_path, target_size=(IMAGE_HEIGHT, IMAGE_WIDTH))

Then, since I downscaled the image, I need to scale the bboxes as well:

(h, w) = IMAGE_HEIGHT, IMAGE_WIDTH

top = float(row["top"]) / h

left = float(row["left"]) / w

height = float(row["height"]) / h

width = float(row["width"]) / w

(The coords are in format: top, left, height, width)

Then, when I predict:

def make_prediction(img):

model = tf.keras.models.load_model("my_model")

img = preprocess_image(img)

data = np.array(img, dtype="float32") / 255.0

data = np.expand_dims(data, axis=0)

pred = model.predict(data)[0]

left = int(pred[0] * ORIGINAL_IMAGE_WIDTH)

width = int(pred[1] * ORIGINAL_IMAGE_WIDTH)

top = int(pred[2] * ORIGINAL_IMAGE_HEIGHT)

height = int(pred[3] * ORIGINAL_IMAGE_HEIGHT)

coords = {

"left": left,

"top": top,

"width": width,

"height": height

}

return coords

Which, in my mind, would explain the prediction being off by a small amount.