[ not a contribution ]

Hi,

Tensorflow version : TF 2.6

LSTM Layer : tf.keras.layers.LSTM | TensorFlow v2.14.0

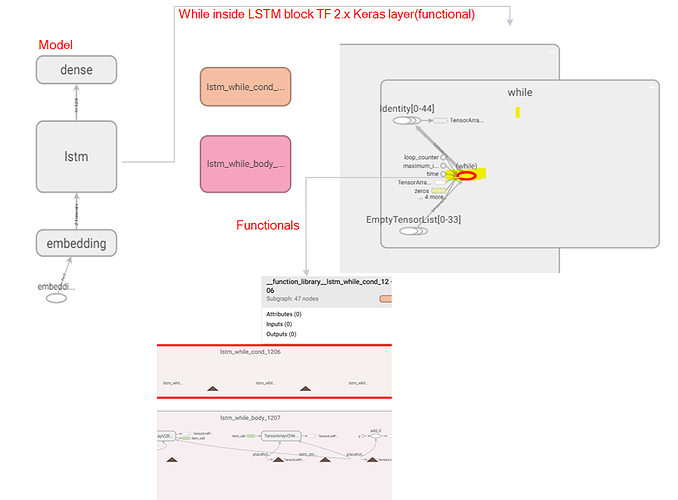

With TF 2.x, the conditional blocks - namely “while” block inside an RNN is implemented as a functional - https://github.com/tensorflow/tensorflow/blob/fc4504edb1ab419ae59b0ebb9ff8d943beb61117/tensorflow/core/ops/functional_ops.cc#L117 (please see attached snapshot from TensorBoard). We need to modify the sub-graph executed within the while context, to include some additional TF operations. What would be the recommended way to achieve this?

In TF 1.x, we were able to access the sub-graph within while context and modify it to include additional operations in while block’s execution context. With TF 2.x, we don’t seem to have access to this internal sub-graph anymore. Could you please advise.

Example code block with Keras LSTM layer:

– Code Block –

def test_lstm():

tf.compat.v1.reset_default_graph()

with tf.device(‘/cpu:0’):

model = tf.keras.Sequential()

# Add an Embedding layer expecting input vocab of size 1000, and

# output embedding dimension of size 64.

model.add(tf.keras.layers.Embedding(input_dim=1000, output_dim=64))

# Add a LSTM layer with 128 internal units.

model.add(tf.keras.layers.LSTM(128))

# Add a Dense layer with 10 unit…

model.add(tf.keras.layers.Dense(10))

model.summary()

session = tf.compat.v1.Session()

writer = tf.compat.v1.summary.FileWriter(‘./lstm’, session.graph)

–code block –

LSTM Layer with conditionals :

Thanks,

Sangeetha