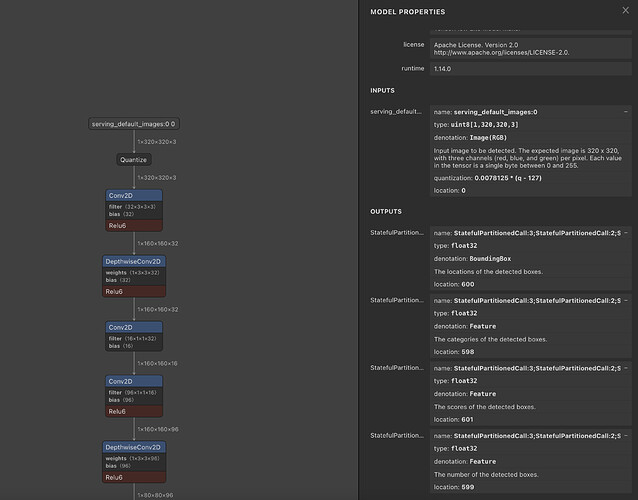

I viewed the 2 files in Netron. They are exactly the same like:

BUT when you print the details of the output tensorsyou have:

Your file:

[{‘name’: ‘StatefulPartitionedCall:3;StatefulPartitionedCall:2;StatefulPartitionedCall:1;StatefulPartitionedCall:02’, ‘index’: 600, ‘shape’: array([ 1, 25], dtype=int32), ‘shape_signature’: array([ 1, 25], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}}, {‘name’: ‘StatefulPartitionedCall:3;StatefulPartitionedCall:2;StatefulPartitionedCall:1;StatefulPartitionedCall:0’, ‘index’: 598, ‘shape’: array([ 1, 25, 4], dtype=int32), ‘shape_signature’: array([ 1, 25, 4], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}}, {‘name’: ‘StatefulPartitionedCall:3;StatefulPartitionedCall:2;StatefulPartitionedCall:1;StatefulPartitionedCall:03’, ‘index’: 601, ‘shape’: array([1], dtype=int32), ‘shape_signature’: array([1], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}},

{‘name’: ‘StatefulPartitionedCall:3;StatefulPartitionedCall:2;StatefulPartitionedCall:1;StatefulPartitionedCall:01’, ‘index’: 599, ‘shape’: array([ 1, 25], dtype=int32), ‘shape_signature’: array([ 1, 25], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}}]

and old working file:

[{‘name’: ‘StatefulPartitionedCall:31’, ‘index’: 598, ‘shape’: array([ 1, 25, 4], dtype=int32), ‘shape_signature’: array([ 1, 25, 4], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}},

{‘name’: ‘StatefulPartitionedCall:32’, ‘index’: 599, ‘shape’: array([ 1, 25], dtype=int32), ‘shape_signature’: array([ 1, 25], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}},

{‘name’: ‘StatefulPartitionedCall:33’, ‘index’: 600, ‘shape’: array([ 1, 25], dtype=int32), ‘shape_signature’: array([ 1, 25], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}},

{‘name’: ‘StatefulPartitionedCall:34’, ‘index’: 601, ‘shape’: array([1], dtype=int32), ‘shape_signature’: array([1], dtype=int32), ‘dtype’: <class ‘numpy.float32’>, ‘quantization’: (0.0, 0), ‘quantization_parameters’: {‘scales’: array([], dtype=float32), ‘zero_points’: array([], dtype=int32), ‘quantized_dimension’: 0}, ‘sparsity_parameters’: {}}]

I think the output arrays order has been changed. You can go here

and change the order to:

outputMap.put(0, outputScores);

outputMap.put(1, outputLocations);

outputMap.put(2, numDetections);

outputMap.put(3, outputClasses);

and I think your project will work again!

I do not know why this change happened but I feel like we have to tag @khanhlvg and @Yuqi_Li to shed some light or just to inform them.

If you need more help tag me.

It finally worked with tensorflow 2.5.0 and PyYaml Version 5.1.

It finally worked with tensorflow 2.5.0 and PyYaml Version 5.1.