I am designing a program to detect objects in bird’s eye drone images. I have trained the YOLO model with my dataset and the classes I want and it works fine, but I have a shortcoming. What I want is to create a mechanism that checks whether the landing zones are available for 2 classes and how I don’t know what to do. I’m using OpenCV. I’m giving a few examples for better understanding.(I have 4 classes )

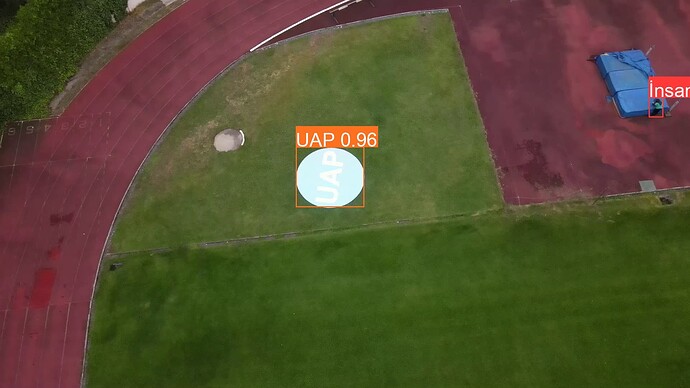

In the following image, there are two objects named “UAP” and “İnsan” in the image, and since “UAP” is our landing area, the landing area is available, I should get a feedback called “Landing area is available”:

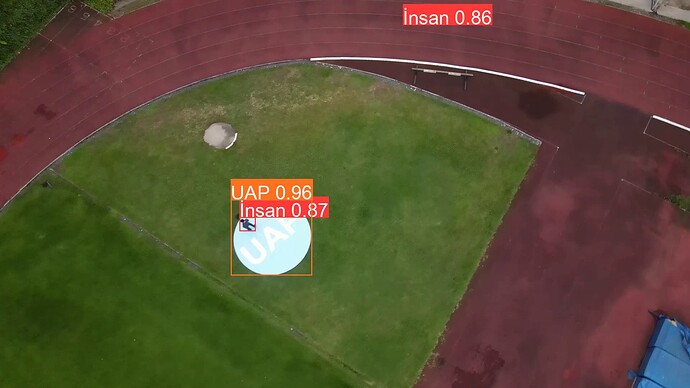

In the other image, the object named “İnsan” rests on the region named “UAP” and I should get a feedback called “Landing area is not suitable”:

I want the same feedback that will apply to the “UAP” class to be valid for the “UAİ” class:

It would be more helpful if you could post a directive or document because I want to understand the principle. Or you can write the code directly, I can examine it.

My experimental half OpenCV code is here:

import numpy as np

import cv2

step 1 - load the model

net = cv2.dnn.readNet(‘best.onnx’)

step 2 - feed a 640x640 image to get predictions

def format_yolov5(frame):

row, col, _ = frame.shape

_max = max(col, row)

result = np.zeros((_max, _max, 3), np.uint8)

result[0:row, 0:col] = frame

return result

image = cv2.imread(‘datasets/Test/frame_014348.jpg’)

input_image = format_yolov5(image) # making the image square

blob = cv2.dnn.blobFromImage(input_image , 1/255.0, (640, 640), swapRB=True)

net.setInput(blob)

predictions = net.forward()

step 3 - unwrap the predictions to get the object detections

class_ids = []

confidences = []

boxes = []

output_data = predictions[0]

image_width, image_height, _ = input_image.shape

x_factor = image_width / 640

y_factor = image_height / 640

for r in range(25200):

row = output_data[r]

confidence = row[4]

if confidence >= 0.4:

classes_scores = row[5:]

_, _, _, max_indx = cv2.minMaxLoc(classes_scores)

class_id = max_indx[1]

if (classes_scores[class_id] > .25):

confidences.append(confidence)

class_ids.append(class_id)

x, y, w, h = row[0].item(), row[1].item(), row[2].item(), row[3].item()

left = int((x - 0.5 * w) * x_factor)

top = int((y - 0.5 * h) * y_factor)

width = int(w * x_factor)

height = int(h * y_factor)

box = np.array([left, top, width, height])

boxes.append(box)

class_list = []

with open(“config.txt”, “r”) as f:

class_list = [cname.strip() for cname in f.readlines()]

indexes = cv2.dnn.NMSBoxes(boxes, confidences, 0.25, 0.45)

result_class_ids = []

result_confidences = []

result_boxes = []

for i in indexes:

result_confidences.append(confidences[i])

result_class_ids.append(class_ids[i])

result_boxes.append(boxes[i])

for i in range(len(result_class_ids)):

box = result_boxes[i]

class_id = result_class_ids[i]

cv2.rectangle(image, box, (0, 255, 255), 2)

cv2.rectangle(image, (box[0], box[1] - 20), (box[0] + box[2], box[1]), (0, 255, 255), -1)

cv2.putText(image, class_list[class_id], (box[0], box[1] - 10), cv2.FONT_HERSHEY_SIMPLEX, .5, (0,0,0))

print(box,class_id)

if class_id >= 2:

print("İniş alanı tespit edildi")

elif class_id <= 1:

print("İniş alanı değil")

cv2.imwrite(“Saving/image.png”, image)

cv2.imshow(“output”, image)

cv2.waitKey()