### System information

- **Have I written custom code (as opposed to using a st…ock example script provided in TensorFlow)**: No.

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**: Ubuntu 16.04

- **TensorFlow installed from (source or binary)**: Source

- **TensorFlow version (use command below)**: Master

- **Python version**: 2.7.13

- **Bazel version (if compiling from source)**: 0.4.5

- **CUDA/cuDNN version**: 8.0/5.1

- **GPU model and memory**: GTX 860M

- **Exact command to reproduce**:

`bazel build -c opt --cxxopt='-std=c++11' --linkopt='-lm' --cpu=armeabi-v7a --host_crosstool_top=@bazel_tools//tools/cpp:toolchain --crosstool_top=//external:android/crosstool //tensorflow/compiler/aot:inception_v3 --verbose_failures`

### Describe the problem

I am currently trying to use `tfcompile` to compile a quantized inception_v3 model for android, following the instructions given in the documentation [here](https://www.tensorflow.org/performance/xla/tfcompile), but I have gotten this error below:

```

INFO: Found 1 target...

ERROR: /home/kwotsin/Android/tensorflow/tensorflow/compiler/aot/BUILD:11:1: Executing genrule //tensorflow/compiler/aot:gen_inception_v3 failed: bash failed: error executing command

(cd /home/kwotsin/.cache/bazel/_bazel_kwotsin/655cf5567faa2deb9e3725ec794eb35d/execroot/tensorflow && \

exec env - \

LD_LIBRARY_PATH=:/usr/local/cuda/lib64:/usr/local/cuda/lib64 \

PATH=/usr/local/cuda/bin:/usr/local/cuda/bin:/home/kwotsin/bin:/home/kwotsin/.local/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/home/kwotsin/Android/Sdk/tools:/home/kwotsin/Android/Sdk/platform-tools \

PYTHON_BIN_PATH=/usr/bin/python \

PYTHON_LIB_PATH=/usr/local/lib/python2.7/dist-packages \

TF_NEED_CUDA=0 \

TF_NEED_OPENCL=0 \

/bin/bash -c 'source external/bazel_tools/tools/genrule/genrule-setup.sh; bazel-out/host/bin/tensorflow/compiler/aot/tfcompile --graph=tensorflow/compiler/aot/inception_v3.pb --config=tensorflow/compiler/aot/inception_v3.config.pbtxt --entry_point=__tensorflow_compiler_aot__inception_v3 --cpp_class=inception_v3_cpp --target_triple=armv7-none-android --out_header=bazel-out/arm-linux-androideabi-4.9-v7a-gnu-libstdcpp-opt/genfiles/tensorflow/compiler/aot/inception_v3.h --out_object=bazel-out/arm-linux-androideabi-4.9-v7a-gnu-libstdcpp-opt/genfiles/tensorflow/compiler/aot/inception_v3.o '): com.google.devtools.build.lib.shell.AbnormalTerminationException: Process terminated by signal 6.

2017-07-19 19:53:26.407268: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2017-07-19 19:53:26.407310: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-07-19 19:53:26.407326: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-07-19 19:53:26.407330: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-07-19 19:53:26.407333: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

2017-07-19 19:53:26.424064: I tensorflow/compiler/xla/service/platform_util.cc:58] platform Host present with 8 visible devices

2017-07-19 19:53:26.426662: E tensorflow/core/common_runtime/executor.cc:644] Executor failed to create kernel. Not found: No registered 'Const' OpKernel for XLA_CPU_JIT devices compatible with node InceptionV3/Conv2d_1a_3x3/weights/read/_224__cf__224_quantized_const = Const[dtype=DT_QUINT8, value=Tensor<type: quint8 shape: [3,3,3,32] values: [[[97 115 118]]]...>]()

(OpKernel was found, but attributes didn't match)

. Registered: device='XLA_CPU_JIT'; dtype in [DT_FLOAT, DT_DOUBLE, DT_INT32, DT_INT64, DT_BOOL]

device='XLA_GPU_JIT'; dtype in [DT_FLOAT, DT_DOUBLE, DT_INT32, DT_INT64, DT_BOOL]

device='CPU'

[[Node: InceptionV3/Conv2d_1a_3x3/weights/read/_224__cf__224_quantized_const = Const[dtype=DT_QUINT8, value=Tensor<type: quint8 shape: [3,3,3,32] values: [[[97 115 118]]]...>]()]]

2017-07-19 19:53:26.429093: F tensorflow/compiler/aot/tfcompile_main.cc:154] Non-OK-status: status status: Not found: No registered 'Const' OpKernel for XLA_CPU_JIT devices compatible with node InceptionV3/Conv2d_1a_3x3/weights/read/_224__cf__224_quantized_const = Const[dtype=DT_QUINT8, value=Tensor<type: quint8 shape: [3,3,3,32] values: [[[97 115 118]]]...>]()

(OpKernel was found, but attributes didn't match)

. Registered: device='XLA_CPU_JIT'; dtype in [DT_FLOAT, DT_DOUBLE, DT_INT32, DT_INT64, DT_BOOL]

device='XLA_GPU_JIT'; dtype in [DT_FLOAT, DT_DOUBLE, DT_INT32, DT_INT64, DT_BOOL]

device='CPU'

[[Node: InceptionV3/Conv2d_1a_3x3/weights/read/_224__cf__224_quantized_const = Const[dtype=DT_QUINT8, value=Tensor<type: quint8 shape: [3,3,3,32] values: [[[97 115 118]]]...>]()]]

/bin/bash: line 1: 6904 Aborted (core dumped) bazel-out/host/bin/tensorflow/compiler/aot/tfcompile --graph=tensorflow/compiler/aot/inception_v3.pb --config=tensorflow/compiler/aot/inception_v3.config.pbtxt --entry_point=__tensorflow_compiler_aot__inception_v3 --cpp_class=inception_v3_cpp --target_triple=armv7-none-android --out_header=bazel-out/arm-linux-androideabi-4.9-v7a-gnu-libstdcpp-opt/genfiles/tensorflow/compiler/aot/inception_v3.h --out_object=bazel-out/arm-linux-androideabi-4.9-v7a-gnu-libstdcpp-opt/genfiles/tensorflow/compiler/aot/inception_v3.o

Target //tensorflow/compiler/aot:inception_v3 failed to build

INFO: Elapsed time: 0.339s, Critical Path: 0.18s

```

Despite the "SSE4.1 etc." instructions that popped up, I made sure that I configured the tensorflow installation with XLA enabled, so it shouldn't have popped up.

Also, my quantized graph was created using the Graph Transform Tool with the following command, producing a graph that worked exactly as expected:

```

/home/kwotsin/tensorflow/bazel-bin/tensorflow/tools/graph_transforms/transform_graph \

--in_graph=./frozen_model.pb \

--out_graph=./quantized_model.pb \

--inputs='Placeholder_only' \

--outputs='InceptionV3/Predictions/Softmax' \

--transforms='

add_default_attributes

strip_unused_nodes(type=float, shape="1,299,299,3")

fold_constants(ignore_errors=true)

fold_batch_norms

fold_old_batch_norms

quantize_weights

strip_unused_nodes

sort_by_execution_order'

```

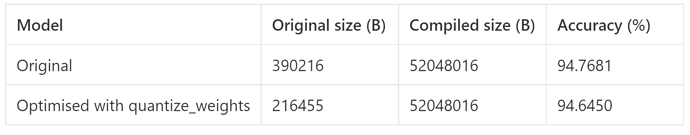

Is XLA AOT compilation of quantized models currently supported? Because when I tried to build with a frozen, non-quantized graph, I got the correct output - a cpp object file and a header file. I thought it would be nice if XLA AOT could be used concurrently with a quantized model to obtain the maximum level of mobile optimization.