arXiv: The Efficiency Misnomer (Google Research)

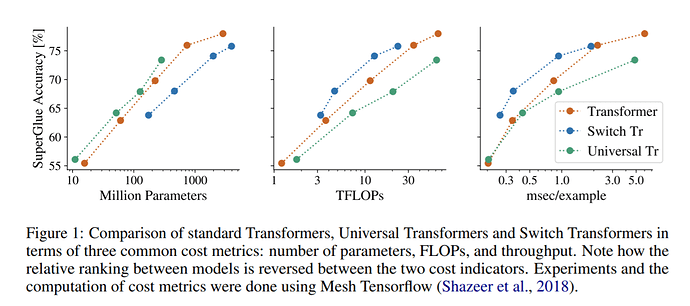

Model efficiency is a critical aspect of developing and deploying machine learning models. Inference time and latency directly affect the user experience, and some applications have hard requirements. In addition to inference costs, model training also have direct financial and environmental impacts. Although there are numerous well-established metrics (cost indicators) for measuring model efficiency, researchers and practitioners often assume that these metrics are correlated with each other and report only few of them. In this paper, we thoroughly discuss common cost indicators, their advantages and disadvantages, and how they can contradict each other. We demonstrate how incomplete reporting of cost indicators can lead to partial conclusions and a blurred or incomplete picture of the practical considerations of different models. We further present suggestions to improve reporting of efficiency metrics.

A primer on cost indicators

One of the main considerations in designing neural network architectures is quality-cost tradeoff… In almost all cases, the more computational budget is given to a method, the better the quality of its outcome will be. To account for such a trade-off, several cost indicators are used in the literature of machine learning and its applications to showcase the efficiency of different models. These indicators take different points of view to the computational costs.

FLOPs: A widely used metric as the proxy for the computational cost of a model is the number of floating-point multiplication-and-addition operations… Alternative to FLOPs, the number of multiply-accumulate (MAC1) as a single unit of operation is also used in the literature (Johnson, 2018). Reported FLOPs are usually calculated using theoretical values. Note that theoretical FLOPs ignores practical factors, like which parts of the model can be parallelized.

Number of Parameters: Number of trainable parameters is also used as an indirect indicator of

computational complexity as well as memory usage (during inference)… Many research works that study the scaling law…, especially in the NLP domain, use the number of parameters as the primary cost indicator…Speed: Speed is one the most informative indicator for comparing the efficiency of different models… In some setups, when measuring speed, the cost of “pipeline” is also taken into account which better reflects the efficiency in a real-world scenario. Note that speed strongly depends on hardware and implementation, so keeping the hardware fixed or normalizing based on the amount of resources used is the key for a fair comparison. Speed is often reported in various forms:

• Throughput refers to the number of examples (or tokens) that are processed within a specific

period of time, e.g., “examples (or tokens) per second”.

• Latency usually refers to the inference time (forward pass) of the model given an example or

batch of examples, and is usually presented as “seconds per forward pass”. The main point

about latency is that compared to throughput, it ignores parallelism introduced by batching

examples. As an example, when processing a batch of 100 examples in 1 second, throughput

is 100 examples per second, while latency is 1 second. Thus, latency is an important factor

for real-time systems that require user input.

• Wall-clock time/runtime measures the time spent to process a fixed set of examples by the

model. Usually, this is used to measure the training cost, for instance the total training time

up to convergence.

• Pipeline bubble is the time that computing devices are idle at the start and end of every

batch… This indirectly measures the speed of the non-pipeline parts

of the process.

• Memory Access Cost (MAC) corresponds to the number of memory accesses. It typically

makes up a large portion of runtime and is the actual bottleneck when running on modern

platforms with strong computational power such as GPUs and TPUs…

…