Pathways Autoregressive Text-to-Image model (Parti)

Site: https://parti.research.google/

Research paper: https://arxiv.org/abs/2206.10789

A blog post about Parti and Imagen: How AI creates photorealistic images from text

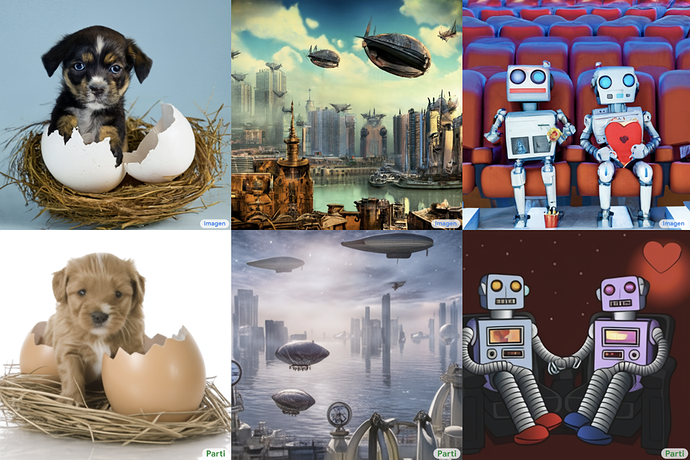

… an autoregressive text-to-image generation model that achieves high-fidelity photorealistic image generation and supports content-rich synthesis involving complex compositions and world knowledge. Recent advances with diffusion models for text-to-image generation, such as Google’s Imagen, have also shown impressive capabilities and state-of-the-art performance on research benchmarks. Parti and Imagen are complementary in exploring two different families of generative models – autoregressive and diffusion, respectively – opening exciting opportunities for combinations of these two powerful models.

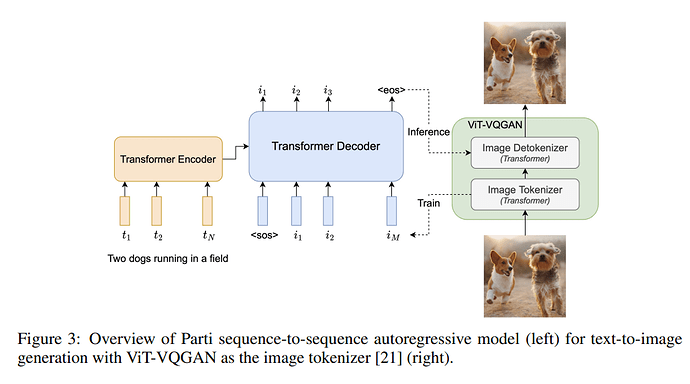

Parti treats text-to-image generation as a sequence-to-sequence modeling problem, analogous to machine translation – this allows it to benefit from advances in large language models, especially capabilities that are unlocked by scaling data and model sizes. In this case, the target outputs are sequences of image tokens instead of text tokens in another language. Parti uses the powerful image tokenizer, ViT-VQGAN, to encode images as sequences of discrete tokens, and takes advantage of its ability to reconstruct such image token sequences as high quality, visually diverse images.

Parti is implemented in Lingvo and scaled with GSPMD on TPU v4 hardware for both training and inference, which allowed us to train a 20B parameter model that achieves record performance on multiple benchmarks.

From the paper:

… Our approach is simple: First, Parti uses a Transformer-based image tokenizer, ViT-VQGAN, to encode images as sequences of discrete tokens. Second, we achieve consistent quality improvements by scaling the encoder-decoder Transformer model up to 20B parameters, with a new state-of-the-art zero-shot FID score of 7.23 and finetuned FID score of 3.22 on MS-COCO. Our detailed analysis on Localized Narratives as well as PartiPrompts (P2), a new holistic benchmark of over 1600 English prompts, demonstrate the effectiveness of Parti across a wide variety of categories and difficulty aspects. We also explore and highlight limitations of our models in order to define and exemplify key areas of focus for further improvements…

Similar to DALL-E [2], CogView [3], and Make-A-Scene [10], Parti is a two-stage model, composed of an image tokenizer and an autoregressive model, as highlighted in Figure 3. The first stage involves training a tokenizer that turns an image into a sequence of discrete visual tokens for training and reconstructs an image at inference time. The second stage trains an autoregressive sequence-tosequence model that generates image tokens from text tokens.

The encoder-decoder architecture also decouples text encoding from image-token generation, so it is straightforward to explore warm-starting the model with a pretrained text encoder. Intuitively, a text encoder with representations based on generic language training should be more capable at handling visually-grounded prompts. We pretrain the text encoder on two datasets: the Colossal Clean Crawled Corpus (C4) [35] with BERT [36] pretraining objective, and our image-text data (see Section 4.1) with a contrastive learning objective (image encoder from the contrastive pretraining is not used). After pretraining, we continue training both encoder and decoder for text-to-image generation with softmax cross-entropy loss on a vocabulary of 8192 discrete image tokens.

From How AI creates photorealistic images from text - some Imagen and Parti examples: