Closely related to:

https://tensorflow-prod.ospodiscourse.com/t/model-checkpointing-best-practices-when-using-train-step

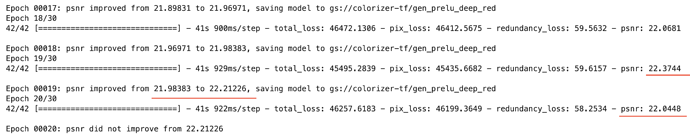

When I am using this the callback is unable to keep track of the metric values correctly:

I notice something similar in the SimSiam example I linked in my previous post as well. Am I missing out on?

1 Like

Bhack

#2

Are these metrics outputs both before or after the weight update step?

Cause if not these printed values are not the same

1 Like

Bhack

#3

Also have you implemted def metrics(self) or called reset_states() if you have used a custom model with a custom train loop?

1 Like

Refer to this example: Self-supervised contrastive learning with SimSiam. It’s all laid out there.

1 Like

@Bhack you are actually right. I probably need to implement a tracker for this to work. I will do that and update here. Sorry.

1 Like