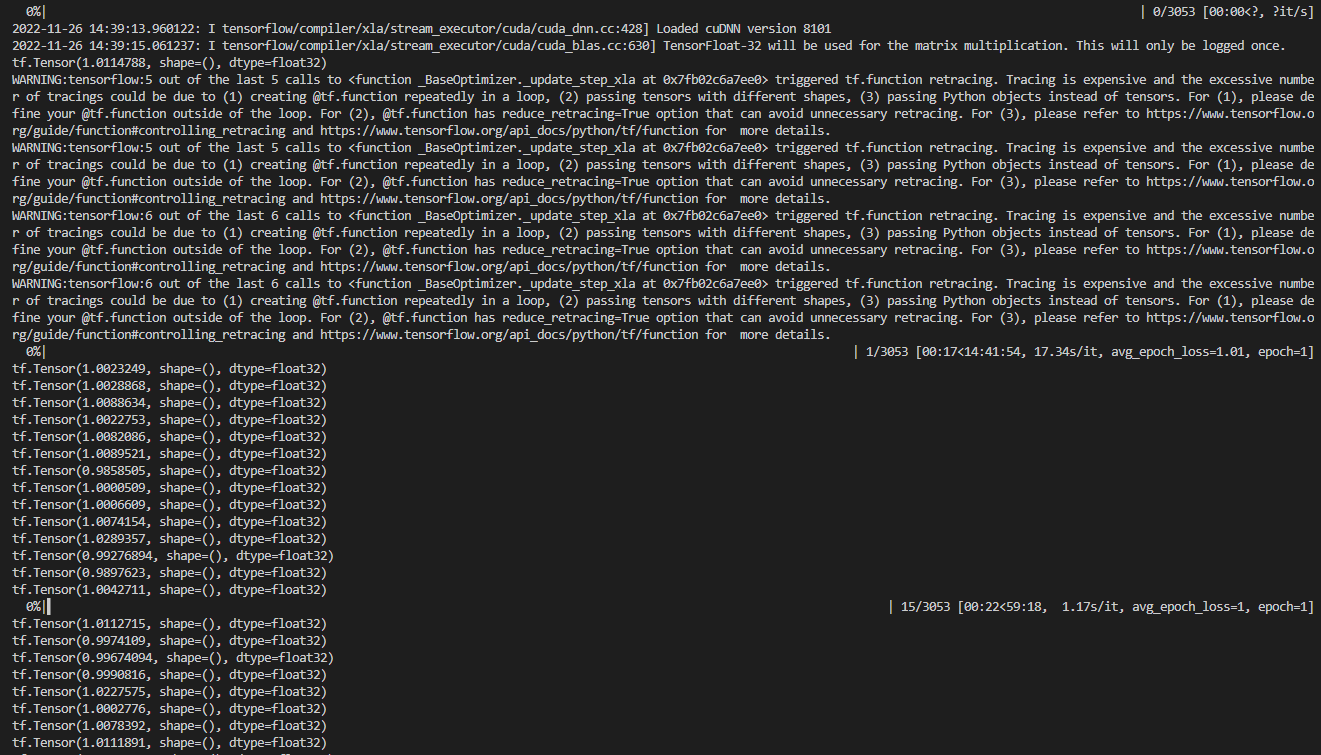

WARNING:tensorflow:5 out of the last 5 calls to <function _BaseOptimizer._update_step_xla at 0x7fb02c6a7ee0> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has reduce_retracing=True option that can avoid unnecessary retracing. For (3), please refer to Better performance with tf.function | TensorFlow Core and tf.function | TensorFlow v2.11.0 for more details.

Welcome to The Tensorflow Forum!

This warning occurs when a TF function is retraced because its input signatures (i.e shape or dtype) are changed for Tensors. Thank you!

Thanks for your answer.

How can I trace the location for the wrong code?

The warning didn’t give the line number, it seems related to the optimizer function.

Please can you share standalone code to replicate above issue?

Hello, can you replicate the warning?