Hi @khanhlvg

Thanks for your tutorials and colab notebook.

Since I was experimenting with using your android_figurine tflite model in a browser, I extracted it as SAVED_MODEL as shown below.

Because

ExportFormat.TFJSis not allowed for object detection model yet…

I’ve tried to use this flow: Model maker → SAVED_MODEL → TFJS

Step1: Model maker → SAVED_MODEL (Success)

// model.export(export_dir='.', tflite_filename='android.tflite') // original code

model.export(export_dir="/js_export/", export_format=[ExportFormat.SAVED_MODEL])

!zip -r /js_export/ModelFiles.zip /js_export/

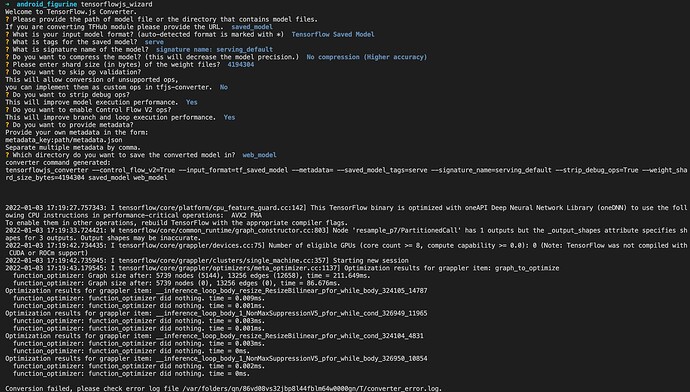

Step 2 SAVED_MODEL → TFJS (Failed)

Error log

Traceback (most recent call last):

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/wizard.py", line 619, in run

converter.convert(arguments)

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/converter.py", line 804, in convert

weight_shard_size_bytes, metadata_map)

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/converter.py", line 533, in _dispatch_converter

metadata=metadata_map)

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/tf_saved_model_conversion_v2.py", line 771, in convert_tf_saved_model

metadata=metadata)

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/tf_saved_model_conversion_v2.py", line 724, in _convert_tf_saved_model

metadata=metadata)

File "/Users/judepark/Sites/TensorFlow/.direnv/python-3.6.8/lib/python3.6/site-packages/tensorflowjs/converters/tf_saved_model_conversion_v2.py", line 157, in optimize_graph

', '.join(unsupported))

ValueError: Unsupported Ops in the model before optimization

TensorListConcatV2