Hello TF-fans!

I am new to TF and Machine Learning and trying to understand the basics. For this I am using the JavaScript API.

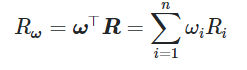

I have a vector of weight w and a vector of returns R where each element in R is a random variable, and each weight w_i is the corresponding weight of its random variable R_i.

My question is, wow do I multiply each weight in w with each random variable in R? I.e.,

This is my code:

const assetsReturns = [

{

name: "AAPL",

R: [

0.37, 0.58, 0.66, -0.02, 0.11, -0.18, -0.07, 0.59, 0.22, 0.19, -0.33,

-0.16, 0.25, 0.38, 0.06, 0.2, 0.14, 0.54, -0.04, -0.33, 0.03,

],

},

{

name: "TSLA",

R: [

0.29, -0.1, 0.43, 0.22, 0.25, 0.16, -0.7, 0.4, -0.35, 0.58, 0.1, -0.25,

-0.02, 0.1, 0.38, 0.2, 0.01, 0.04, -0.54, -0.62, -0.67,

],

},

];

// Initialize portfolio asset weights

function initializeWeightVector(assets) {

let arr: number[] = [];

for (let i = 0; i < assets.length; i++) {

arr.push(Math.random());

}

// normalize asset weights

let sumOfWeights = arr.reduce((acc, w) => acc + w, 0);

arr.map((w) => (w /= sumOfWeights));

console.log(arr);

return tf.tensor(arr);

}

let w = initializeWeightVector(assetsReturns);

// w = tensor([[ 0.5625502154236828, 0.5801456476968492 ]]);

let R = tf.tensor(assetsReturns.map((asset) => asset.R));

/**

* R = tensor(

[

0.37, 0.58, 0.66, -0.02, 0.11, -0.18, -0.07, 0.59, 0.22, 0.19, -0.33,

-0.16, 0.25, 0.38, 0.06, 0.2, 0.14, 0.54, -0.04, -0.33, 0.03,

],

[

0.29, -0.1, 0.43, 0.22, 0.25, 0.16, -0.7, 0.4, -0.35, 0.58, 0.1, -0.25,

-0.02, 0.1, 0.38, 0.2, 0.01, 0.04, -0.54, -0.62, -0.67,

],

)

*/

console.log(R.shape[0]); //2

console.log(w.shape[0]); //2

console.log(R.shape[1]); //21

console.log(w.shape[1]); //undefined

Thank you for your help!