i have trained a model using ResNet implementation for Radio wave modulation classification and it’s working pretty well until finished training.

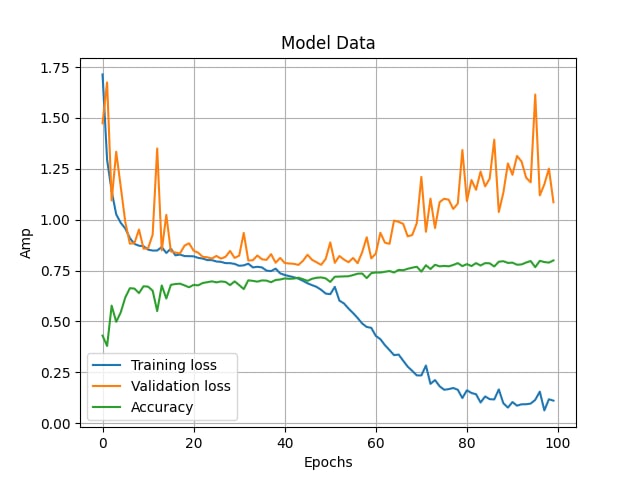

the test accuracy on validation dataset is continue to improve however, the validation loss increases,

so the question is "is this overfitting the model or it’s normal to increase in loss but get higher accuracy i don’t even know train it more or what to do right now