During the process of using tensorflow, I found that it seems that the tensor array can be directly processed by numpy. What will be the impact of doing so? In memory, whether to automatically convert the tensor array to numpy array before operation or directly process the tensor array

This is a block from the official tensorflow website about numpy compatibility with tensors;

Tensors are explicitly converted to NumPy ndarrays using their

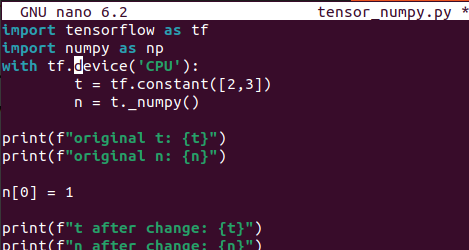

.numpy()method. These conversions are typically cheap since the array andtf.Tensorshare the underlying memory representation, if possible.

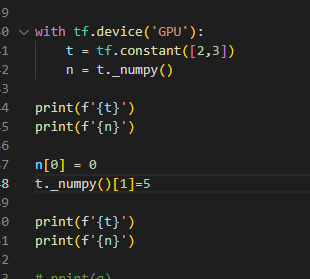

What this means is that changing one directly affects the other without any need for copying data (given they are running on cpu since numpy does not have any accelerator backing). However should the tensor be hosted on gpu/tpu memory, the conversion to numpy will include copying to cpu.

Example attached;

If I need to operate on the GPU with high frequency, is there any good optimization method? For example, does the flip operation in numpy have a corresponding API in tensorflow? Thank you for your reply

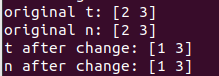

I just made an attempt. It seems that in the GPU, their memory address is still in the hook state, rather than copying a new numpy array?

Here is a link from the api docs that shows how to use tensorflow equivalent of a flip. Tensorflow under the hood tries to optimize your workflow when it can, however you as the engineer/programmer can also adopt some good practices for better optimization. For starters, you can adopt good input pipeline structure ie making sure the flow from data extraction to data transformation to model training appears “seamless” enough. You could also fuse together multiple smaller kernels to make one large kernel (this can be achieved with tf.function). Also you could enable mixed precision and xla. This can help

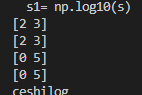

Try printing out

t.device

n.device

This may help to know which device the variables are on.

PS: n.device will give you an attribute error. I guess this shows that it truly is a numpy array and hence lives on the cpu instead.