Hy everybody,

I’m beginning with tensorflow probability and I have some difficulties to interpret my Bayesian neural network outputs. I’m working on a regression case, and started with the example provided by tensorflow notebook here: regression with tfp

As I seek to know the uncertainty of my network predictions, I dived directly into example 4 with Aleatoric & Epistemic Uncertainty. You can find my code bellow:

def negative_loglikelihood(targets, estimated_distribution):

return -estimated_distribution.log_prob(targets)

def posterior_mean_field(kernel_size, bias_size, dtype=None):

n = kernel_size + bias_size #number of total paramaeters (Weights and Bias)

c = np.log(np.expm1(1.))

return tf.keras.Sequential([

tfp.layers.VariableLayer(2 * n, dtype=dtype, initializer=lambda shape, dtype: random_gaussian_initializer(shape, dtype), trainable=True),

tfp.layers.DistributionLambda(lambda t: tfd.Independent(

# The Normal distribution with location loc and scale parameters.

tfd.Normal(loc=t[..., :n],

scale=1e-5 +0.01*tf.nn.softplus(c + t[..., n:])),

reinterpreted_batch_ndims=1)),

])

def prior(kernel_size, bias_size, dtype=None):

n = kernel_size + bias_size

return tf.keras.Sequential([

tfp.layers.VariableLayer(n, dtype=dtype),

tfp.layers.DistributionLambda(lambda t: tfd.Independent(

tfd.Normal(loc=t, scale=1),

reinterpreted_batch_ndims=1)),

])

def build_model(param):

model = keras.Sequential()

for i in range(param["n_layers"] ):

name="n_units_l"+str(i)

num_hidden = param[name]

model.add(tfp.layers.DenseVariational(units=num_hidden, make_prior_fn=prior,make_posterior_fn=posterior_mean_field,kl_weight=1/len(X_train),activation="relu"))

model.add(tfp.layers.DenseVariational(units=2, make_prior_fn=prior,make_posterior_fn=posterior_mean_field,activation="relu",kl_weight=1/len(X_train)))

model.add(tfp.layers.DistributionLambda(lambda t: tfd.Normal(loc=t[..., :1],scale=1e-3 + tf.math.softplus(0.01 * t[...,1:]))))

lr = param["learning_rate"]

optimizer=optimizers.Adam(learning_rate=lr)

model.compile(

loss=negative_loglikelihood, #negative_loglikelihood,

optimizer=optimizer,

metrics=[keras.metrics.RootMeanSquaredError()],

)

return model

I think I have the same network than in tfp example, I just added few hidden layers with differents units. Also I added 0.01 in front of the Softplus in the posterior as suggested here, which allows the network to come up to good performances. Not able to get reasonable results from DenseVariational

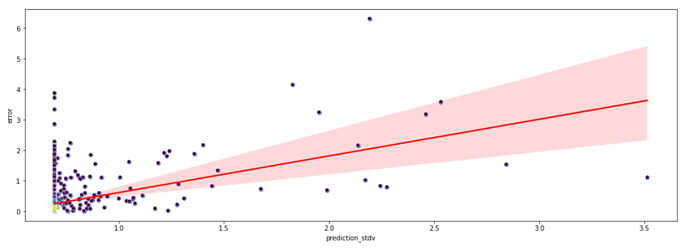

The performances of the model are very good (less than 1% of error) but I have some questions. I ploted bellow the uncertainty (prediction_stdv) vs error. Goal here is to get intuitions about the relevance of the uncertainty. I’m only picking aleatory uncertainty here (.stddev() on my final layer).

-

My uncertainty seems to stick to a single value, and this is bad. This phenomenon is more visible as the number of epochs increases. I trained my network for 2000 epochs, am I already overfitting ?

-

The value where my uncertainty converge is 0.6931571960449219 which is really close to ln(2) (0.69314718056). In tensorflow example this boundary does not exist, they can have aleatory uncertainty bellow this value.

Thanks in advance for all feedbacks