Hi everybody!

Please help a beginner

I trained the Mobilenet_v2 (during training in the config, I indicated the presence of 100 objects on the image).

I saved the model in save_model format and checked it. The model works great.

Converted the model to tfjs format and launched it in the browser. The model works great too.

Converted the model to tflite format:

!tflite_convert --saved_model_dir=/content/gdrive/MyDrive/modelNumberSeries/tflite1/saved_model \

--output_file=/content/gdrive/MyDrive/modelNumberSeries/tflite1/detect.tflite

and saved it in tflite format with metadata:

ObjectDetectorWriter = object_detector.MetadataWriter

_MODEL_PATH = "/content/gdrive/MyDrive/modelNumberSeries/tflite2/detect.tflite"

_LABEL_FILE = "/content/gdrive/MyDrive/modelNumberSeries/labelmap.txt"

_SAVE_TO_PATH = "/content/gdrive/MyDrive/modelNumberSeries/tflite_with_metadata/detect.tflite"

writer = ObjectDetectorWriter.create_for_inference(

writer_utils.load_file(_MODEL_PATH), [127.5], [127.5], [_LABEL_FILE])

writer_utils.save_file(writer.populate(), _SAVE_TO_PATH)

# Verify the populated metadata and associated files.

displayer = metadata.MetadataDisplayer.with_model_file(_SAVE_TO_PATH)

print("Metadata populated:")

print(displayer.get_metadata_json())

print("Associated file(s) populated:")

print(displayer.get_packed_associated_file_list())

model_meta = _metadata_fb.ModelMetadataT()

model_meta.name = "SSD_Detector"

model_meta.description = (

"Identify which of a known set of objects might be present and provide "

"information about their positions within the given image or a video "

"stream.")

# Creates input info.

input_meta = _metadata_fb.TensorMetadataT()

input_meta.name = "image"

input_meta.content = _metadata_fb.ContentT()

input_meta.content.contentProperties = _metadata_fb.ImagePropertiesT()

input_meta.content.contentProperties.colorSpace = (

_metadata_fb.ColorSpaceType.RGB)

input_meta.content.contentPropertiesType = (

_metadata_fb.ContentProperties.ImageProperties)

input_normalization = _metadata_fb.ProcessUnitT()

input_normalization.optionsType = (

_metadata_fb.ProcessUnitOptions.NormalizationOptions)

input_normalization.options = _metadata_fb.NormalizationOptionsT()

input_normalization.options.mean = [127.5]

input_normalization.options.std = [127.5]

input_meta.processUnits = [input_normalization]

input_stats = _metadata_fb.StatsT()

input_stats.max = [255]

input_stats.min = [0]

input_meta.stats = input_stats

# Creates outputs info.

output_location_meta = _metadata_fb.TensorMetadataT()

output_location_meta.name = "location"

output_location_meta.description = "The locations of the detected boxes."

output_location_meta.content = _metadata_fb.ContentT()

output_location_meta.content.contentPropertiesType = (

_metadata_fb.ContentProperties.BoundingBoxProperties)

output_location_meta.content.contentProperties = (

_metadata_fb.BoundingBoxPropertiesT())

output_location_meta.content.contentProperties.index = [1, 0, 3, 2]

output_location_meta.content.contentProperties.type = (

_metadata_fb.BoundingBoxType.BOUNDARIES)

output_location_meta.content.contentProperties.coordinateType = (

_metadata_fb.CoordinateType.RATIO)

output_location_meta.content.range = _metadata_fb.ValueRangeT()

output_location_meta.content.range.min = 2

output_location_meta.content.range.max = 2

output_class_meta = _metadata_fb.TensorMetadataT()

output_class_meta.name = "category"

output_class_meta.description = "The categories of the detected boxes."

output_class_meta.content = _metadata_fb.ContentT()

output_class_meta.content.contentPropertiesType = (

_metadata_fb.ContentProperties.FeatureProperties)

output_class_meta.content.contentProperties = (

_metadata_fb.FeaturePropertiesT())

output_class_meta.content.range = _metadata_fb.ValueRangeT()

output_class_meta.content.range.min = 2

output_class_meta.content.range.max = 2

label_file = _metadata_fb.AssociatedFileT()

label_file.name = os.path.basename("/content/gdrive/MyDrive/modelNumberSeries/labelmap.txt")

label_file.description = "Label of objects that this model can recognize."

label_file.type = _metadata_fb.AssociatedFileType.TENSOR_VALUE_LABELS

output_class_meta.associatedFiles = [label_file]

output_score_meta = _metadata_fb.TensorMetadataT()

output_score_meta.name = "score"

output_score_meta.description = "The scores of the detected boxes."

output_score_meta.content = _metadata_fb.ContentT()

output_score_meta.content.contentPropertiesType = (

_metadata_fb.ContentProperties.FeatureProperties)

output_score_meta.content.contentProperties = (

_metadata_fb.FeaturePropertiesT())

output_score_meta.content.range = _metadata_fb.ValueRangeT()

output_score_meta.content.range.min = 2

output_score_meta.content.range.max = 2

output_number_meta = _metadata_fb.TensorMetadataT()

output_number_meta.name = "number of detections"

output_number_meta.description = "The number of the detected boxes."

output_number_meta.content = _metadata_fb.ContentT()

output_number_meta.content.contentPropertiesType = (

_metadata_fb.ContentProperties.FeatureProperties)

output_number_meta.content.contentProperties = (

_metadata_fb.FeaturePropertiesT())

# Creates subgraph info.

group = _metadata_fb.TensorGroupT()

group.name = "detection result"

group.tensorNames = [

output_location_meta.name, output_class_meta.name,

output_score_meta.name

]

subgraph = _metadata_fb.SubGraphMetadataT()

subgraph.inputTensorMetadata = [input_meta]

subgraph.outputTensorMetadata = [

output_location_meta, output_class_meta, output_score_meta,

output_number_meta

]

subgraph.outputTensorGroups = [group]

model_meta.subgraphMetadata = [subgraph]

b = flatbuffers.Builder(0)

b.Finish(

model_meta.Pack(b),

_metadata.MetadataPopulator.METADATA_FILE_IDENTIFIER)

metadata_buf = b.Output()

I’m trying to run the model on android, but it only finds ten objects in the photo (although there are more):

[

"Detection"{

boundingBox=RectF(265.0,

129.0,

319.0,

206.0),

"categories="[

"<Category""common"(displayName= score=0.99364007 index=22)>

]

},

"Detection"{

boundingBox=RectF(159.0,

134.0,

196.0,

192.0),

"categories="[

"<Category""1"(displayName= score=0.99094725 index=28)>

]

},

"Detection"{

boundingBox=RectF(112.0,

135.0,

149.0,

191.0),

"categories="[

"<Category""0"(displayName= score=0.9906333 index=2)>

]

},

"Detection"{

boundingBox=RectF(338.0,

134.0,

379.0,

195.0),

"categories="[

"<Category""7"(displayName= score=0.98771167 index=23)>

]

},

"Detection"{

boundingBox=RectF(69.0,

136.0,

106.0,

192.0),

"categories="[

"<Category""0"(displayName= score=0.98451096 index=2)>

]

},

"Detection"{

boundingBox=RectF(202.0,

131.0,

239.0,

191.0),

"categories="[

"<Category""0"(displayName= score=0.98021543 index=2)>

]

},

"Detection"{

boundingBox=RectF(294.0,

131.0,

335.0,

194.0),

"categories="[

"<Category""7"(displayName= score=0.9774704 index=23)>

]

},

"Detection"{

boundingBox=RectF(248.0,

131.0,

287.0,

193.0),

"categories="[

"<Category""5"(displayName= score=0.97538936 index=20)>

]

},

"Detection"{

boundingBox=RectF(224.0,

358.0,

243.0,

386.0),

"categories="[

"<Category""s2"(displayName= score=0.8897439 index=9)>

]

},

"Detection"{

boundingBox=RectF(192.0,

357.0,

211.0,

384.0),

"categories="[

"<Category""s4"(displayName= score=0.8859583 index=24)>

]

}

]

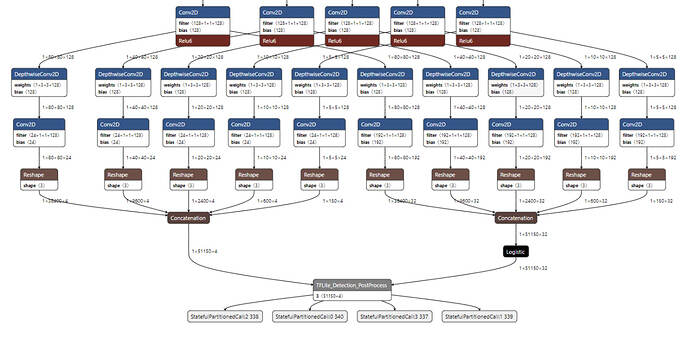

When checking the model, I also see only 10 outputs:

What did I do wrong when converting?