Thank you for the reply.

First of all, let’s talk about the dataset. This is a self-created dataset, as shown in the image below.

It consists of label information and 7 parameters. To explain the data in more detail, Columns 1, 2, and 3 operate like a for statement.

for _ in columns1 :

for _ in columns2 :

for _ in columns3 :

The values in columns 4 to 7 are measurement values for the operations in columns 1 to 3.

The amount of data set is approximately 550,000, so it is believed that there are no issues with the amount.

I am aware that there may be problems with the data (for example, the dataset structure is incorrect).

Then I added the code below.

import tensorflow as tf

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from tensorflow.keras.models import Sequential

from tensorflow.keras.callbacks import ModelCheckpoint

from tensorflow.keras.layers import Dense, Flatten, Conv1D, MaxPool1D

from tensorflow.keras.losses import binary_crossentropy

df = pd.read_csv(‘./…/…/…/…/col_rx_dataset_rev1.csv’)

df.head(5)

df_columns = df.columns.unique()

train_data = df[df_columns[1:]]

label_data = df[df_columns[0]]

print(label_data[:10])

x_train, x_test, y_train, y_test = train_test_split(train_data, label_data, test_size= 0.3, random_state=42)

x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size= 0.4, random_state=42)

model = Sequential()

model.add(Flatten(input_shape=(7,1)))

model.add(Dense(256, activation=‘relu’))

model.add(Dense(128, activation=‘relu’))

model.add(Dense(64, activation=‘relu’))

model.add(Dense(64, activation=‘relu’))

model.add(Dense(1, activation=‘sigmoid’))

model.compile(optimizer=‘adam’,

loss=‘binary_crossentropy’,

metrics=[‘accuracy’])

model.fit(x_train, y_train,validation_data=(x_valid,y_valid), epochs=100, batch_size=64, verbose=1)

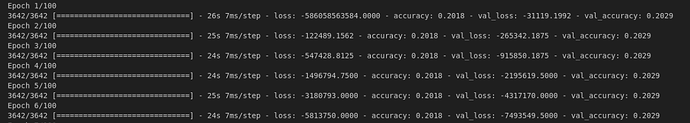

I plan to add a Feature Extraction layer after checking how much learning is possible with the MLP model. However, in my simple MLP, there was no change in accuracy.