HP_NUM_UNITS = hp.HParam('num_units', hp.Discrete([10, 30]))

HP_NUM_UNITS2 = hp.HParam('num_units2', hp.Discrete([5, 15]))

HP_OPTIMIZER = hp.HParam('optimizer', hp.Discrete(['adam', 'sgd']))

METRIC_ACCURACY = 'recall_m'

with tf.summary.create_file_writer('logs/hparam_tuning').as_default():

hp.hparams_config(

hparams=[HP_NUM_UNITS,HP_NUM_UNITS2, HP_OPTIMIZER],

metrics=[hp.Metric(METRIC_ACCURACY, display_name='Recall')],

)

def train_test_model(hparams):

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(hparams[HP_NUM_UNITS],input_dim=27,activation=tf.nn.relu),

tf.keras.layers.Dense(hparams[HP_NUM_UNITS2], activation=tf.nn.relu),

tf.keras.layers.Dense(1, activation=tf.nn.sigmoid)

])

model.compile(

optimizer=hparams[HP_OPTIMIZER],

loss='binary_crossentropy',

metrics=recall_m)

model.fit(x_train, y_train, epochs=10)

_, recall = model.evaluate(x_test, y_test)

return recall

def run(run_dir, hparams):

with tf.summary.create_file_writer(run_dir).as_default():

hp.hparams(hparams) # record the values used in this trial

recall = train_test_model(hparams)

tf.summary.scalar(METRIC_ACCURACY, recall, step=1)

session_num = 0

for num_units in HP_NUM_UNITS.domain.values:

for num_units2 in HP_NUM_UNITS2.domain.values:

for optimizer in HP_OPTIMIZER.domain.values:

hparams = {

HP_NUM_UNITS: num_units,

HP_NUM_UNITS2: num_units2,

HP_OPTIMIZER: optimizer,

}

run_name = "run-%d" % session_num

print('--- Starting trial: %s' % run_name)

print({h.name: hparams[h] for h in hparams})

run('logs/hparam_tuning/' + run_name, hparams)

session_num += 1

would you please help me? Im going to compile my model using the recall metric. but in hparams tab, I cant see any other metrics but accuracy. here is my cod:

HP_NUM_UNITS = hp.HParam('num_units', hp.Discrete([10, 30]))

HP_NUM_UNITS2 = hp.HParam('num_units2', hp.Discrete([5, 15]))

HP_OPTIMIZER = hp.HParam('optimizer', hp.Discrete(['adam', 'sgd']))

METRIC_ACCURACY = 'recall_m'

with tf.summary.create_file_writer('logs/hparam_tuning').as_default():

hp.hparams_config(

hparams=[HP_NUM_UNITS,HP_NUM_UNITS2, HP_OPTIMIZER],

metrics=[hp.Metric(METRIC_ACCURACY, display_name='Recall')],

)

def train_test_model(hparams):

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(hparams[HP_NUM_UNITS],input_dim=27,activation=tf.nn.relu),

tf.keras.layers.Dense(hparams[HP_NUM_UNITS2], activation=tf.nn.relu),

tf.keras.layers.Dense(1, activation=tf.nn.sigmoid)

])

model.compile(

optimizer=hparams[HP_OPTIMIZER],

loss='binary_crossentropy',

metrics=recall_m)

model.fit(x_train, y_train, epochs=10)

_, recall = model.evaluate(x_test, y_test)

return recall

def run(run_dir, hparams):

with tf.summary.create_file_writer(run_dir).as_default():

hp.hparams(hparams) # record the values used in this trial

recall = train_test_model(hparams)

tf.summary.scalar(METRIC_ACCURACY, recall, step=1)

session_num = 0

for num_units in HP_NUM_UNITS.domain.values:

for num_units2 in HP_NUM_UNITS2.domain.values:

for optimizer in HP_OPTIMIZER.domain.values:

hparams = {

HP_NUM_UNITS: num_units,

HP_NUM_UNITS2: num_units2,

HP_OPTIMIZER: optimizer,

}

run_name = "run-%d" % session_num

print('--- Starting trial: %s' % run_name)

print({h.name: hparams[h] for h in hparams})

run('logs/hparam_tuning/' + run_name, hparams)

session_num += 1

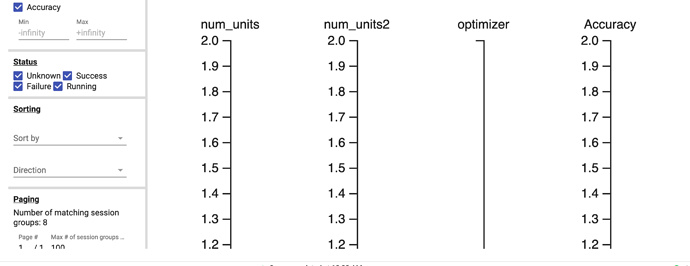

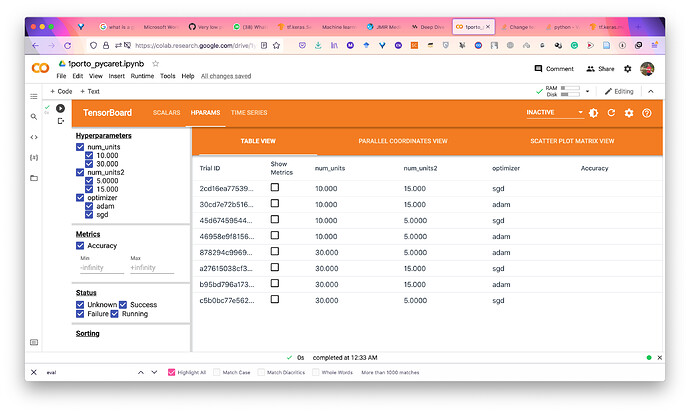

Here is what I see in tensorboard