Hi everyone,

For a personal project I’m trying to recreate the NBeats architecture into Keras, and I don’t think I’m doing it correctly but am not sure why.

The page I’m working off of as a ground truth can be found here: https://github.com/ElementAI/N-BEATS/blob/master/models/nbeats.py

Here’s the starter PyTorch code that I’m trying to convert:

class NBeatsBlock(t.nn.Module):

def __init__(self,

input_size,

theta_size: int,

basis_function: t.nn.Module,

layers: int,

layer_size: int):

super().__init__()

self.layers = t.nn.ModuleList([t.nn.Linear(in_features=input_size, out_features=layer_size)] +

[t.nn.Linear(in_features=layer_size, out_features=layer_size)

for _ in range(layers - 1)])

self.basis_parameters = t.nn.Linear(in_features=layer_size, out_features=theta_size)

self.basis_function = basis_function

def forward(self, x: t.Tensor) -> Tuple[t.Tensor, t.Tensor]:

block_input = x

for layer in self.layers:

block_input = t.relu(layer(block_input))

basis_parameters = self.basis_parameters(block_input)

return self.basis_function(basis_parameters)

class NBeats(t.nn.Module):

def __init__(self, blocks: t.nn.ModuleList):

super().__init__()

self.blocks = blocks

def forward(self, x: t.Tensor, input_mask: t.Tensor) -> t.Tensor:

residuals = x.flip(dims=(1,))

input_mask = input_mask.flip(dims=(1,))

forecast = x[:, -1:]

for i, block in enumerate(self.blocks):

backcast, block_forecast = block(residuals)

residuals = (residuals - backcast) * input_mask

forecast = forecast + block_forecast

return forecast

class GenericBasis(t.nn.Module):

def __init__(self, backcast_size: int, forecast_size: int):

super().__init__()

self.backcast_size = backcast_size

self.forecast_size = forecast_size

def forward(self, theta: t.Tensor):

return theta[:, :self.backcast_size], theta[:, -self.forecast_size:]

Here’s the Keras code I have to translate:

class NBeatsBlock(keras.layers.Layer):

def __init__(self,

theta_size: int,

basis_function: keras.layers.Layer,

layer_size: int = 4):

super(NBeatsBlock, self).__init__()

self.layers_ = [keras.layers.Dense(layer_size, activation = 'relu')

for i in range(layer_size)]

self.basis_parameters = keras.layers.Dense(theta_size)

self.basis_function = basis_function

def call(self, inputs):

x = self.layers_[0](inputs)

for layer in self.layers_[1:]:

x = layer(x)

x = self.basis_parameters(x)

return self.basis_function(x)

class NBeats(keras.layers.Layer):

def __init__(self,

blocksize: int,

theta_size: int,

basis_function: keras.layers.Layer):

super(NBeats, self).__init__()

self.blocks = [NBeatsBlock(theta_size = theta_size, basis_function = basis_function) for i in range(blocksize)]

def call(self, inputs):

residuals = K.reverse(inputs, axes = 0)

forecast = inputs[:, -1:]

for block in self.blocks:

backcast, block_forecast = block(residuals)

residuals = residuals - backcast

forecast = forecast + block_forecast

return forecast

class GenericBasis(keras.layers.Layer):

def __init__(self, backcast_size: int, forecast_size: int):

super().__init__()

self.backcast_size = backcast_size

self.forecast_size = forecast_size

def call(self, inputs):

return inputs[:, :self.backcast_size], inputs[:, -self.forecast_size:]

If I try and make a model from the Keras code it works, but I don’t think it’s constructed correctly.

Here’s a simple model:

inputs = Input(shape = (1, ))

nbeats = NBeats(blocksize = 4, theta_size = 7, basis_function = GenericBasis(7, 7))(inputs)

out = keras.layers.Dense(7)(nbeats)

model = Model(inputs, out)

My concern is that the internal NBeatsBlock layers are not actually being used in the model I just created.

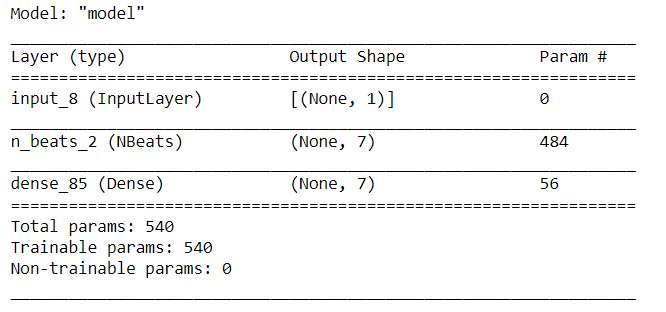

My model summary reads like this:

,

,

And as you can see there’s nothing that indicates the internal Dense layers are there.

And if I plot the model I get the following diagram:

So I don’t think I’m doing things correctly but I’m also not sure where I’m going wrong with how I’m constructing it. I’m guessing there are small differences in how PyTorch & Keras work that I’m not picking up on.