I apologize first.

I do not speak English well.

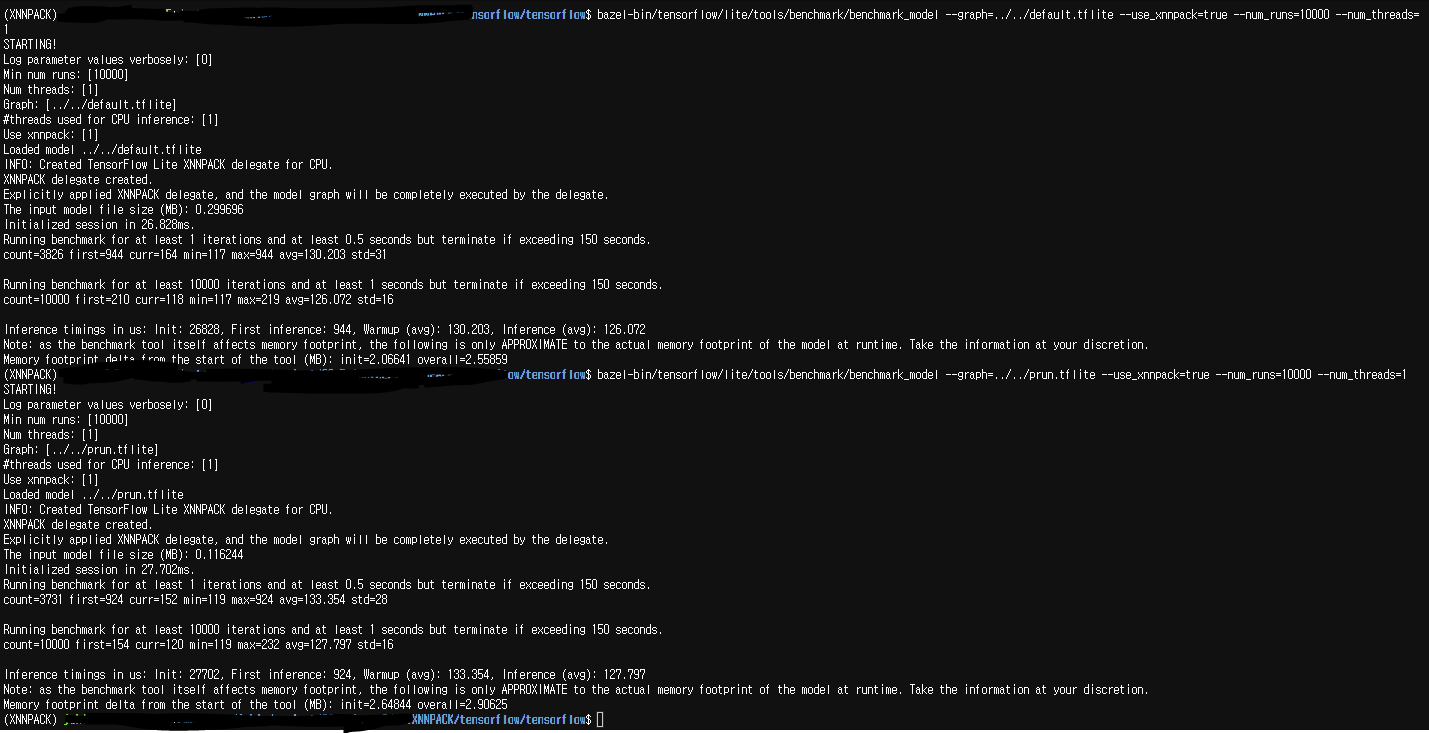

I am doing a performance test in a PC environment.

I had to convert the pruned model to tflite.

The contents of the referenced site have been applied as it is.

Performance improvement was confirmed by applying XNNPACK.

However, the performance improvement of sparse inference was not confirmed.

What am I missing?

TEST Environment :

- tensorflow model create

- conda execute

- python version : 3.7.13

- tensorflow version : 2.8.2

- language : python

- tensorflow inference

- tensorflow version = 2.9.1

- language : C/C++

- benchmark build cmd :

- bazel_nojdk-5.0.0-linux-x86_64 build -c opt tensorflow/lite/tools/benchmark:benchmark_model --define tflite_with_xnnpack=true