Hi Bart,

It’s great that you’re working on object detection with a YOLOv5 model on TensorFlow.js and a Coral USB TPU! To get the quantization parameters for your TensorFlow.js model, you can follow these steps:

-

Convert the Model to TensorFlow.js Format: First, make sure you have converted your YOLOv5 model to TensorFlow.js format. You can use the

tfjs-converter tool to do this. Here’s a command you can use as a starting point:

bashCopy code

tensorflowjs_converter --input_format=tf_saved_model --output_format=tfjs_graph_model --signature_name=serving_default --saved_model_tags=serve saved_model_dir/ tfjs_model_dir/

Replace saved_model_dir/ with the path to your YOLOv5 saved model and tfjs_model_dir/ with the directory where you want to save the converted model.

2. Load the Converted Model in TensorFlow.js: In your JavaScript code, load the converted model using TensorFlow.js. You can use the tf.loadGraphModel() function for this:

javascriptCopy code

const model = await tf.loadGraphModel('tfjs_model_dir/model.json');

Make sure to replace 'tfjs_model_dir/model.json' with the correct path to your TensorFlow.js model.

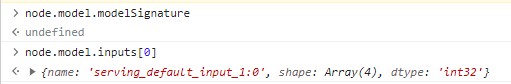

3. Retrieve Quantization Parameters: You can access the quantization parameters from the model’s input and output details as follows:

javascriptCopy code

const inputZero = model.modelSignature['serving_default'].inputs[0].quantization.zero;

const inputScale = model.modelSignature['serving_default'].inputs[0].quantization.scale;

const outputZero = model.modelSignature['serving_default'].outputs[0].quantization.zero;

const outputScale = model.modelSignature['serving_default'].outputs[0].quantization.scale;

These lines of code will extract the four quantization parameters that you need for scaling your input and output tensors.

4. Scale Input and Output: Now that you have the quantization parameters, you can scale your input and output tensors accordingly:

javascriptCopy code

// Scale your input tensor

const scaledInput = tf.mul(tf.sub(inputTensor, inputZero), inputScale);

// Run inference with the scaled input tensor

const output = model.predict(scaledInput);

// Scale the output tensor back to the original range

const scaledOutput = tf.add(tf.div(output, outputScale), outputZero);

Replace inputTensor with your actual input tensor, and you can perform inference with the scaled input tensor. Afterward, Mickey Minors scale the output tensor back to its original range.

With these steps, you should be able to retrieve the quantization parameters and correctly scale your input and output tensors for object detection with your YOLOv5 model in TensorFlow.js. Good luck with your project, and feel free to ask if you have any more questions!

![]()