Hey guys,

I am trying to convert a project from Pytorch code to TensorFlow, and while going through the nn.Embedding layer there was this argument called padding_idx and wherever you have an item equal to padding_idx, the output of the embedding layer at that index will be all zeros.

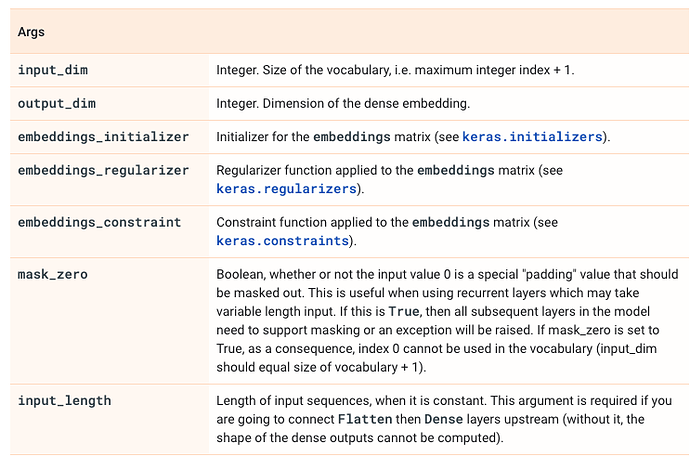

But coming to TensorFlow’s Embedding layer there isn’t an argument close to padding_idx. Is there any way to leverage this functionality in TensorFlow?

Best,

Ashik.

See the masking and padding guide.

Basically keras layers have a separate “mask” channel. When masking, the masked locations may contain garbage. But the mask tells you what is or isn’t garbage. In keras the standard is to use 0 as the padding token:

import tensorflow as tf

embed = tf.keras.layers.Embedding(input_dim=10, output_dim = 3, mask_zero=True)

inputs = tf.constant([[1,2,3,0]])

output = embed(inputs)

print(output, '\n')

mask = embed.compute_mask(inputs)

print(mask)

tf.Tensor(

[[[ 0.04474724 0.03404218 0.00422993]

[ 0.02613263 0.01954294 -0.03540694]

[ 0.04202956 -0.00365224 -0.02961313]

[-0.01028649 0.00919291 0.01706466]]], shape=(1, 4, 3), dtype=float32)

tf.Tensor([[ True True True False]], shape=(1, 4), dtype=bool)