Hi all, I’ll get straight to it!

Intent: Trying to figure out what each tensor returned from the array of tensors represent

Here is the following information I have in case it is pertinent

What is it: a Tensorflow.js implementation of Object Detection using a transfer learning model that was converted from Tensorflow SavedModel to the Tensorflow.js version via Tensorflow js converter. the model is loaded using await tf.loadGraphModel(‘/Resources/A.I/model.json’);

Model: ssd_mobilenet_v2_320x320_coco17_tpu-8

Config File:

model {

ssd {

num_classes: 312

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

feature_extractor {

type: "ssd_mobilenet_v2_keras"

depth_multiplier: 1.0

min_depth: 16

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.9999998989515007e-05

}

}

initializer {

truncated_normal_initializer {

mean: 0.0

stddev: 0.029999999329447746

}

}

activation: RELU_6

batch_norm {

decay: 0.9700000286102295

center: true

scale: true

epsilon: 0.0010000000474974513

train: true

}

}

override_base_feature_extractor_hyperparams: true

}

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

use_matmul_gather: true

}

}

similarity_calculator {

iou_similarity {

}

}

box_predictor {

convolutional_box_predictor {

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.9999998989515007e-05

}

}

initializer {

random_normal_initializer {

mean: 0.0

stddev: 0.009999999776482582

}

}

activation: RELU_6

batch_norm {

decay: 0.9700000286102295

center: true

scale: true

epsilon: 0.0010000000474974513

train: true

}

}

min_depth: 0

max_depth: 0

num_layers_before_predictor: 0

use_dropout: false

dropout_keep_probability: 0.800000011920929

kernel_size: 1

box_code_size: 4

apply_sigmoid_to_scores: false

class_prediction_bias_init: -4.599999904632568

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.20000000298023224

max_scale: 0.949999988079071

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.33329999446868896

}

}

post_processing {

batch_non_max_suppression {

score_threshold: 9.99999993922529e-09

iou_threshold: 0.6000000238418579

max_detections_per_class: 100

max_total_detections: 100

use_static_shapes: false

}

score_converter: SIGMOID

}

normalize_loss_by_num_matches: true

loss {

localization_loss {

weighted_smooth_l1 {

delta: 1.0

}

}

classification_loss {

weighted_sigmoid_focal {

gamma: 2.0

alpha: 0.75

}

}

classification_weight: 1.0

localization_weight: 1.0

}

encode_background_as_zeros: true

normalize_loc_loss_by_codesize: true

inplace_batchnorm_update: true

freeze_batchnorm: false

}

}

train_config {

batch_size: 2

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

sync_replicas: true

optimizer {

momentum_optimizer {

learning_rate {

cosine_decay_learning_rate {

learning_rate_base: 0.01

total_steps: 10000

warmup_learning_rate: 0.0004

warmup_steps: 2000

}

}

momentum_optimizer_value: 0.8999999761581421

}

use_moving_average: false

}

fine_tune_checkpoint: "Overprime_test_annotations/ssd_mobilenet_v2_320x320_coco17_tpu-8/checkpoint/ckpt-0"

num_steps: 10000

startup_delay_steps: 0.0

replicas_to_aggregate: 8

max_number_of_boxes: 100

unpad_groundtruth_tensors: false

fine_tune_checkpoint_type: "detection"

fine_tune_checkpoint_version: V2

}

train_input_reader {

label_map_path: "Overprime_test_annotations/label_map.pbtxt"

tf_record_input_reader {

input_path: "Overprime_test_annotations/default.tfrecord"

}

}

eval_config {

metrics_set: "coco_detection_metrics"

use_moving_averages: false

}

eval_input_reader {

label_map_path: "Overprime_test_annotations/label_map.pbtxt"

shuffle: false

num_epochs: 1

tf_record_input_reader {

input_path: "Overprime_test_annotations/default.tfrecord"

}

}

Javascript code snipet:

async function predictWebcam() {

//const webcam = await tf.data.webcam(video/*, {resizeWidth: 320, resizeHeight: 320,}*/);

//const img = await webcam.capture();

const input = await tf.browser.fromPixels(video);

const resized = await tf.image.resizeBilinear(input, [7, 7]);

const normalized = await resized.div(tf.scalar(255)).sub(tf.scalar(1));

const batched = await normalized.expandDims(0).toInt();

const result = await model.executeAsync(batched);

console.log("Ok, here is the entire tensor array output");

console.log(result);

console.log("Size of output");

console.log(result.length);

console.log("Ok, here is the first element");

console.log(result[0].arraySync());

console.log("Ok, here is the second element");

console.log(result[1].arraySync());

console.log("Ok, here is the third element");

console.log(result[2].arraySync());

console.log("Ok, here is the fourth element");

console.log(result[3].arraySync());

console.log("Ok, here is the fith element");

console.log(result[4].arraySync());

console.log("Ok, here is the sixth element");

console.log(result[5].arraySync());

console.log("Ok, here is the seventh element");

console.log(result[6].arraySync());

console.log("Ok, here is the eighth element");

console.log(result[7].arraySync());

What’s Returned (shortened):

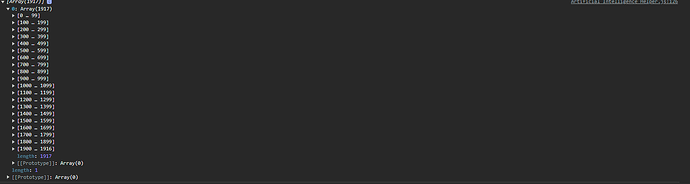

**Original array of Tensors**:

Tensor 1:

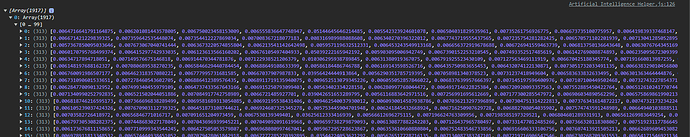

**Tensor 2**:

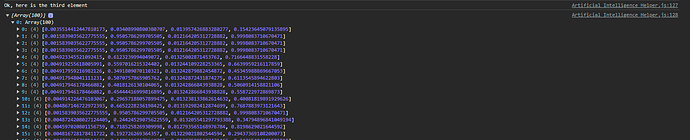

**Tensor 3**:

**Tensor 4**:

**Tensor 5**:

**Tensor 6**:

**Tensor 7**:

**Tensor 8**:

Important Notes: I am not working on or focusing on optimizations, accuracy, etc. The only thing I am focused on as someone with no knowledge of Machine Learning and Artificial Intelligence is getting from point A to B and understanding what it is I am receiving from the outputs. I have debugged numerous stages of the way, starting at annotations with CVAT on videos, python tensorflow training using GPU, conversion of trained model to usable for tensorflow.js (surprisingly easy somehow at face value), up to actually loading the model on the website, so I’ve seen some stuff lol. Once I fully understand what appears to be this very last stage, then I can go back to the beginning and do things efficiently/optimally as I am learning Tensorflow from the ground up.

Now regarding the implementation, the TensorFlow is receiving data from the webcam. I do what appears to be necessary to modify the data into the proper “appearance” for what the tensorflow model is expecting. Then I run the inference and just recall the function over and over. From what I can tell digging into the actual tensorflow object, it “appears” to me that from the output:

Tensorflow 1: is maybe the confidence level of each detected object, conveniently already sorted from most confident to least

Tensorflow 4: is the id number for each label that corresponds to the objects that were detected.

I am only confident that Tensorflow 4 is what I think it is because no number goes above 312 and since I have 312 labels for objects, this along with the size of the tensor leads me to believe this one is the identifier. What are the others? I’ve tried looking at the original model info: TensorFlow Hub (tfhub.dev). It’s output dictionary is as follows:

num_detections: a tf.int tensor with only one value, the number of detections [N].

detection_boxes: a tf.float32 tensor of shape [N, 4] containing bounding box coordinates in the following order: [ymin, xmin, ymax, xmax].

detection_classes: a tf.int tensor of shape [N] containing detection class index from the label file.

detection_scores: a tf.float32 tensor of shape [N] containing detection scores.

raw_detection_boxes: a tf.float32 tensor of shape [1, M, 4] containing decoded detection boxes without Non-Max suppression. M is the number of raw detections.

raw_detection_scores: a tf.float32 tensor of shape [1, M, 90] and contains class score logits for raw detection boxes. M is the number of raw detections.

detection_anchor_indices: a tf.float32 tensor of shape [N] and contains the anchor indices of the detections after NMS.

detection_multiclass_scores: a tf.float32 tensor of shape [1, N, 91] and contains class score distribution (including background) for detection boxes in the image including background class.

The methods I’ve seen for pulling this info out of a tensor all don’t work, and I’m sure that has something to do with the conversion from the original model to the tensorflow.js format (allegedly easy right?). I think I remember reading that tensorflow tries to do a conversion of 1->1 meaning whatever arrangement things are in is exactly how it would be in its output, I tried researching and seeing what maybe the original model’s output order was, but at this point admittedly, I’m burned out being so close to the finish line. With that said, any help, or guidance would be awesome, if any additional information is needed or even questions about how I got this far, please ask cause sweet baby jesus this stuff is not at all easy no matter how many examples and docs you read.