I am looking to better understand how the output of how body-segmentation works https://github.com/tensorflow/tfjs-models/tree/master/body-segmentation. I have the code working and am seeing an ImageData. How would I use this to know where specific body parts are being detected in the image? I thought these would be labeled in a simpler array. I was able to use some of the utility functions, but I was hoping to better understand how they are “reading” the mask to highlight certain body parts. Thank you.

Great question! So from the docs on the linked site above it states:

MediaPipe SelfieSegmentation returns exactly one segmentation corresponding to all people in the input image.

BodyPix returns exactly one segmentation corresponding to all people in the input image if

multiSegmentationoption is false, and otherwise will return multiple segmentations, one per person.

So my first question is which are you using? MediaPipe or Bodypix?

Mediapipe implementation does not have bodypart segmentation, only body as a whole if I remember correctly (eg is pixel part of a human body or not). Bodypix is older and less accurate but supports bodypart segmentation too (eg is this pixel part of a human body but also what part of the body eg arm, leg etc)

If you are using Mediapipe model then you would access like the example code shows as follows:

const img = document.getElementById('image');

const segmenter = await bodySegmentation.createSegmenter(bodySegmentation.SupportedModels.MediaPipeSelfieSegmentation);

const segmentation = await segmenter.segmentPeople(img);

// The mask image is an binary mask image with a 1 where there is a person and

// a 0 where there is not.

const coloredPartImage = await bodySegmentation.toBinaryMask(segmentation);

const opacity = 0.7;

const flipHorizontal = false;

const maskBlurAmount = 0;

const canvas = document.getElementById('canvas');

// Draw the mask image on top of the original image onto a canvas.

// The colored part image will be drawn semi-transparent, with an opacity of

// 0.7, allowing for the original image to be visible under.

bodySegmentation.drawMask(

canvas, img, coloredPartImage, opacity, maskBlurAmount,

flipHorizontal);

Else if you are using bodypix then you would use as follows:

const img = document.getElementById('image');

const segmenter = await bodySegmentation.createSegmenter(bodySegmentation.SupportedModels.BodyPix);

const segmentation = await segmenter.segmentPeople(img, {multiSegmentation: false, segmentBodyParts: true});

// The colored part image is an rgb image with a corresponding color from the

// rainbow colors for each part at each pixel, and black pixels where there is

// no part.

const coloredPartImage = await bodySegmentation.toColoredMask(segmentation, bodySegmentation.bodyPixMaskValueToRainbowColor, {r: 255, g: 255, b: 255, a: 255}));

const opacity = 0.7;

const flipHorizontal = false;

const maskBlurAmount = 0;

const canvas = document.getElementById('canvas');

// Draw the colored part image on top of the original image onto a canvas.

// The colored part image will be drawn semi-transparent, with an opacity of

// 0.7, allowing for the original image to be visible under.

bodySegmentation.drawMask(

canvas, img, coloredPartImage, opacity, maskBlurAmount,

flipHorizontal);

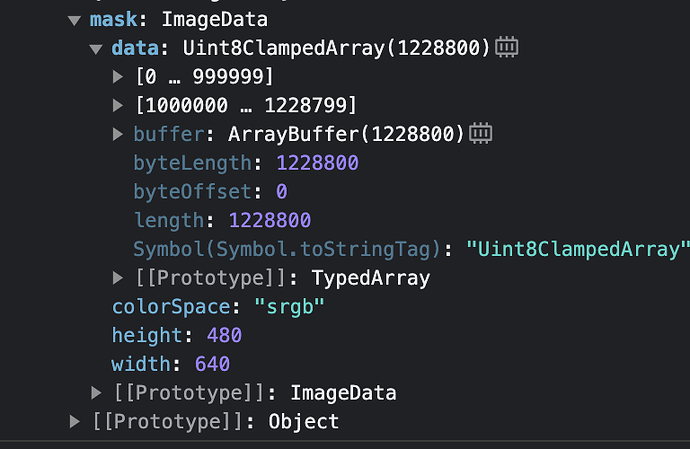

Thanks Jason. I’m using bodypix so I can get the body parts segmented. I have the colored mask working properly. I was hoping there was a way to interpret the segmentation to know what areas (pixels) belong to each body part. Similar to how the key points work with poses.

Oh right. Understood thank you. Check my glitch.com example that shows exactly how to use returned data from bodypix and render each body part using diff colours I have defined:

Exact part that picks the value from the result and then picks a colour from my colour array is as follows;

// A function to render returned segmentation data to a given canvas context.

function processSegmentation(canvas, segmentation) {

var ctx = canvas.getContext('2d');

var imageData = ctx.getImageData(0, 0, canvas.width, canvas.height);

var data = imageData.data;

let n = 0;

for (let i = 0; i < data.length; i += 4) {

if (segmentation.data[n] !== -1) {

data[i] = colourMap[segmentation.data[n]].r; // red

data[i + 1] = colourMap[segmentation.data[n]].g; // green

data[i + 2] = colourMap[segmentation.data[n]].b; // blue

data[i + 3] = colourMap[segmentation.data[n]].a; // alpha

} else {

data[i] = 0;

data[i + 1] = 0;

data[i + 2] = 0;

data[i + 3] = 0;

}

n++;

}

ctx.putImageData(imageData, 0, 0);

}

TLDR: segmentation.data[n] contains an integer representing what type of body part it found. I use this integer offset to pick a colour value frm my array above to then use to render that body part in diff colour!

Jason

I see. I didn’t realize that data[n] integer existed to identify the parts. I’ll experiment with using that. Thank you.