Hi I am using TensorFlow Keras deep learning model to train my data.

I have GPU instance memory of 16 GB, and After train and validation split data is of 16GB, I am not able to train my data because of memory limitations.

Here is my estimator code, and python script,

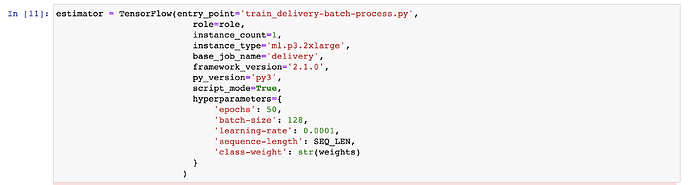

Estimator,

Python training script,

import argparse, os

import numpy as np

import json

import tensorflow as tf

import tensorflow.keras

from tensorflow.keras import backend as K

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, LSTM, BatchNormalization

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.utils import multi_gpu_model

from sklearn.metrics import classification_report, accuracy_score

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--epochs', type=int, default=10)

parser.add_argument('--learning-rate', type=float, default=0.01)

parser.add_argument('--batch-size', type=int, default=128)

parser.add_argument('--sequence-length', type=int, default=60)

parser.add_argument('--class-weight', type=str, default='{0:1,1:1}')

parser.add_argument('--gpu-count', type=int, default=os.environ['SM_NUM_GPUS'])

parser.add_argument("--model_dir", type=str)

parser.add_argument("--sm-model-dir", type=str, default=os.environ.get("SM_MODEL_DIR"))

parser.add_argument('--train', type=str, default=os.environ['SM_CHANNEL_TRAIN'])

parser.add_argument('--val', type=str, default=os.environ['SM_CHANNEL_VAL'])

parser.add_argument("--current-host", type=str, default=os.environ.get("SM_CURRENT_HOST"))

args, _ = parser.parse_known_args()

epochs = args.epochs

lr = args.learning_rate

batch_size = args.batch_size

class_weight = eval(args.class_weight)

gpu_count = args.gpu_count

model_dir = args.sm_model_dir

training_dir = args.train

validation_dir = args.val

sequence_length = args.sequence_length

# load data

X_train = np.load(os.path.join(training_dir, 'train.npz'))['X']

y_train = np.load(os.path.join(training_dir, 'train.npz'))['y']

X_val = np.load(os.path.join(validation_dir, 'val.npz'))['X']

y_val = np.load(os.path.join(validation_dir, 'val.npz'))['y']

#create model

model = Sequential()

model.add(LSTM(32, input_shape=(X_train.shape[1:]), return_sequences=True))

model.add(Dropout(0.2))

model.add(BatchNormalization())

model.add(LSTM(32))

model.add(Dropout(0.2))

model.add(BatchNormalization())

model.add(Dense(32, activation='relu'))

model.add(Dropout(0.2))

model.add(Dense(1, activation='sigmoid'))

#if gpu_count > 1:

# model = multi_gpu_model(model, gpus=gpu_count)

METRICS = [

tf.keras.metrics.TruePositives(name='tp'),

tf.keras.metrics.FalsePositives(name='fp'),

tf.keras.metrics.TrueNegatives(name='tn'),

tf.keras.metrics.FalseNegatives(name='fn'),

tf.keras.metrics.BinaryAccuracy(name='accuracy'),

tf.keras.metrics.Precision(name='precision'),

tf.keras.metrics.Recall(name='recall'),

tf.keras.metrics.AUC(name='auc'),

tf.keras.metrics.AUC(name='prc', curve='PR'), # precision-recall curve

]

# compile model

model.compile(loss=tf.keras.losses.binary_crossentropy,

optimizer=Adam(lr=lr, decay=1e-6),

metrics=METRICS)

# Slicing using tensorflow apis

tf_trainX_dataset = tf.data.Dataset.from_tensor_slices(X_train)

tf_trainY_dataset = tf.data.Dataset.from_tensor_slices(y_train)

# Train model

model.fit(tf_trainX_dataset,

tf_trainY_dataset,

batch_size=batch_size,

epochs=epochs,

class_weight=class_weight,

validation_data=(X_val,y_val),

verbose=2)

I am new to TensorFlow objects, could you please help me how can I train my data in chunks using the GPU instance’s memory efficiently?

Thanks in advance.