Hello, I had been use TFJS for a while for pose recognition (with PoseNet) and it is magnific! Now I wonder if I can use TF for a different use case: I want to scan printed assessments with multiple questions, where the options are circles that should be filled with a black pen by the user.

I imagine I need a way to recognize the frame containing all the answers, similar to the way QR-Codes corners are used… I appreciate any suggestion about what would be a good approach to this problem.

thanks!

Welcome to the forum and thanks for being part of the TensorFlow.js community!

A few things.

- Glad you were enjoying PoseNet however please consider upgrading to MoveNet which is almost 100x faster and much more accurate. PoseNet was good when it came out, but MoveNet is now the standard

Learn more here:

Learn more here:

- So for your new problem I would first ask if you need machine learning for this task? Regular computer vision may be adequate depending on your data eg if its well scanned and just black and white it may be fairly trivial to find all black areas that are squares of a certain size and which of those contain more filled pixels than others for example.

That being said if you do want to use machine learning you will probably want to retrain some sort of object detection model to find objects of interest and their positions eg a filled box vs an unfilled box etc.

For that I highly recommend following this great tutorial by Hugo:

You can then run that resulting trained model in the browser to detect custom objects like you need and find their locations in the given image.

hi @Jason thank you for your answer!

I had not idea MoveNet was out, I will check it out by sure.

About the question 2, if I understood well, probably my problem is most on the side of “regular computing vision” rather than machine learning itself:

- I can control the printed forms: I can add QRCodes or AR markers if needed…

- I know in advance the layout of the form to process.

I guess the main challenge is that the capture should be done with a phone or in front of a webcam rather than using a flat scanner. that’s why I imagined that AR markers may be of help: to determine the axis of rotation of the sheet, apply some kind of “inverse transformation” to “flat” the captured picture and then compare the circles with the answers.

Well my main point is that you may be able to solve your problem using regular computer vision techniques depending how clean your image data is etc. If you find that is not working well, then I would try solving with Machine Learning using TensorFlow.js but also ensure the machine learning solution beats your “baseline” of the non - ML solution if that makes sense?

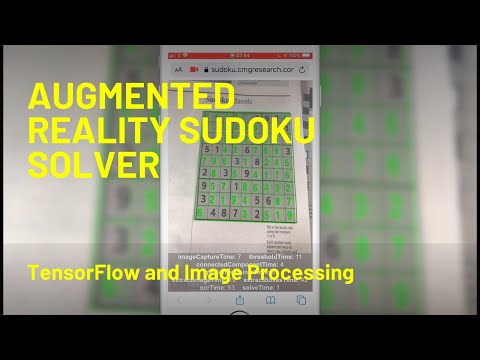

What you want to do is certainly achievable with TensorFlow.js though, and in real time too. Check out this interview I did with one member of the community who explains really well how he made a Soduku solver that is actually very similar problem as yours:

If you check the description links of the video too there is one more video where he goes even deeper about the preprocessing steps he takes (non ML tasks) along with his TensorFlow.JS implementation that will help you too: