hayrijs

February 28, 2022, 2:17am

#1

Hello there,

I am using Keras ImageDataGenerator in the project. After not achieving desired validation losses, I found something weird.

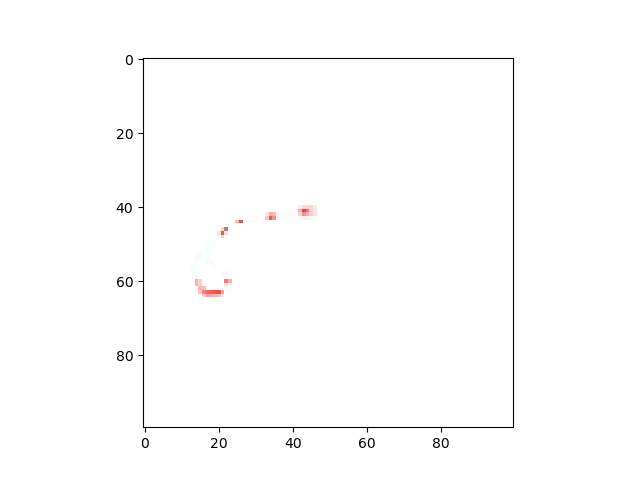

In here I have original image of this;

I need to feed 100x100 input layered model with this image. Therefore, I loaded the image with;

train_datagen = ImageDataGenerator(

validation_split=0,

rotation_range=5, # rotation

horizontal_flip=True, # horizontal flip

)

train_generator = train_datagen.flow_from_directory(

directory=generated_images_folder + "training\\" + user.username + "\\train",

color_mode="rgb",

batch_size=batch_size,

# target_size=(100, 100),

class_mode="categorical",

shuffle=False

)

With or without target_size=(100,100) argument.

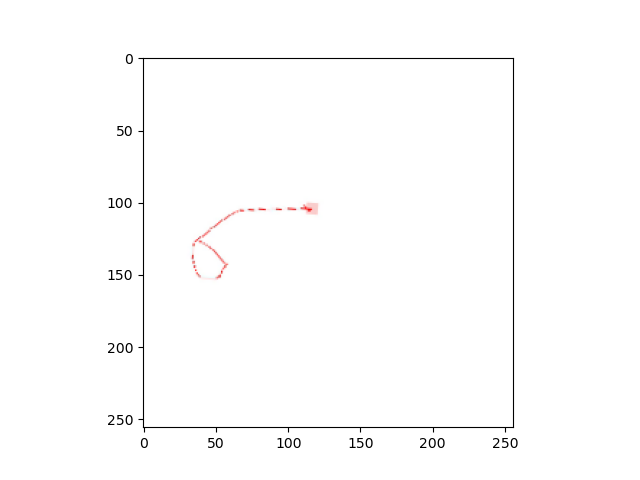

And this is 255x255;

After loading this image, I rescaled this with cv2;

image = cv2.resize(x[0], (100, 100))

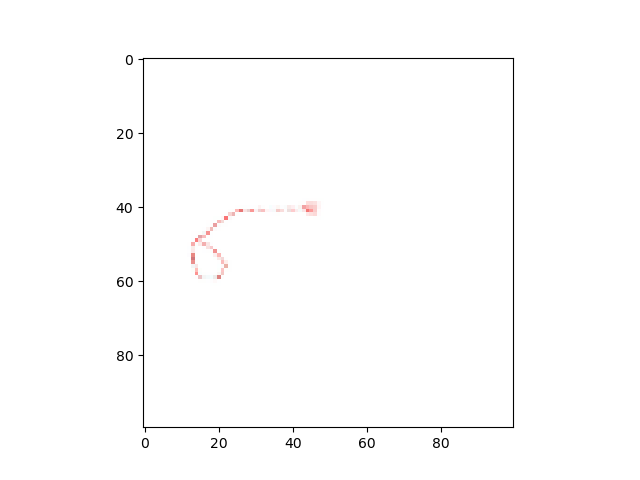

Here is the result;

So, question is that why ImageDataGenerator loaded image is loosing that much of the image? Is there a bug here?

Bhack

February 28, 2022, 3:19pm

#3

You can change the interpolation arg as by default it is nearest.

hayrijs

February 28, 2022, 3:25pm

#4

Good point. However, none of tried interpolation method gave me clean result. They either blur the image or make several pixels white, since most of the images are white.

Bhack

February 28, 2022, 3:35pm

#5

Internally are just PIL/Pillow calls so you can test the behaviour with the library directly:

batch_y = np.zeros((len(batch_x), len(self.class_indices)),

dtype=self.dtype)

for i, n_observation in enumerate(index_array):

batch_y[i, self.classes[n_observation]] = 1.

elif self.class_mode == 'multi_output':

batch_y = [output[index_array] for output in self.labels]

elif self.class_mode == 'raw':

batch_y = self.labels[index_array]

else:

return batch_x

if self.sample_weight is None:

return batch_x, batch_y

else:

return batch_x, batch_y, self.sample_weight[index_array]

@property

def filepaths(self):

"""List of absolute paths to image files."""

raise NotImplementedError(

'`filepaths` property method has not been implemented in {}.'.format(

type(self).__name__))

hayrijs

February 28, 2022, 3:41pm

#6

Thank you again. I realized that too. PIL doesn’t allow us to use none of the interpolation methods despite of cv2, I guess. This may be the why cv2 gives cleaner results.

Bhack

February 28, 2022, 3:55pm

#7

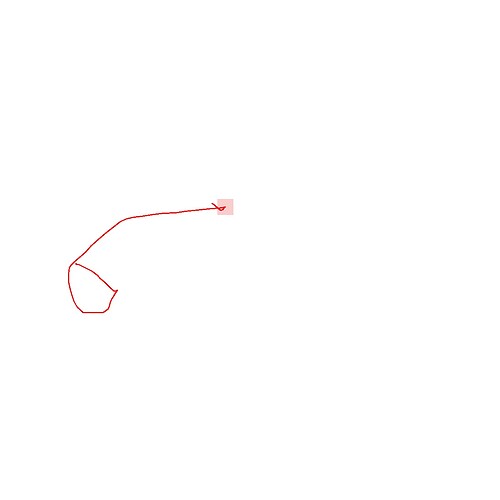

I cannot replicate your problem on Colab with PIL:

from PIL import Image

import tensorflow as tf

im = Image.open("/content/doğru.jpg")

b = im.resize((100,100))

display(b)

Hi, again.

from PIL import Image

import tensorflow as tf

import numpy as np

from matplotlib import pyplot as plt

im = Image.open("/content/deneme/class1/doğru.jpg")

b = im.resize((100,100))

plt.imshow(b)

plt.show()

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1 / 255.0,

)

train_generator = train_datagen.flow_from_directory(

directory="/content/deneme",

color_mode="rgb",

batch_size=32,

target_size=(100, 100),

class_mode="categorical",

shuffle=False

)

x, y = train_generator.__next__()

plt.imshow(x[0])

plt.show()

In here, I would like to point out that resize with PIL is okay but image coming from generator is ruined.

Bhack

March 1, 2022, 3:43pm

#11

hayrijs:

from PIL import Image

import tensorflow as tf

import numpy as np

from matplotlib import pyplot as plt

im = Image.open("/content/deneme/class1/doğru.jpg")

b = im.resize((100,100))

plt.imshow(b)

plt.show()

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1 / 255.0,

)

train_generator = train_datagen.flow_from_directory(

directory="/content/deneme",

color_mode="rgb",

batch_size=32,

target_size=(100, 100),

class_mode="categorical",

shuffle=False

)

x, y = train_generator.__next__()

plt.imshow(x[0])

You need to use the same filter type: e.g. interpolation='bicubic'

See more

Thank you, you’re correct. I wonder why default interpolation is nearest in tf, while it is bicubic in PIL (see below)

def resize(self, size, resample=BICUBIC, box=None, reducing_gap=None):

"""

Returns a resized copy of this image.

...

:param resample: An optional resampling filter. This can be

one of :py:data:`PIL.Image.NEAREST`, :py:data:`PIL.Image.BOX`,

:py:data:`PIL.Image.BILINEAR`, :py:data:`PIL.Image.HAMMING`,

:py:data:`PIL.Image.BICUBIC` or :py:data:`PIL.Image.LANCZOS`.

Default filter is :py:data:`PIL.Image.BICUBIC`.

If the image has mode "1" or "P", it is

always set to :py:data:`PIL.Image.NEAREST`.

See: :ref:`concept-filters`.

Is it because of better performance? (Shown in; Concepts — Pillow (PIL Fork) 9.1.0 documentation )

Bhack

March 2, 2022, 11:22am

#14

It is how it Is used in that Keras API as in other TF API the default is different.

See tf.image.resize | TensorFlow Core v2.8.0

Fair enough! Thanks again for your help.

Bhack

March 2, 2022, 3:20pm

#16

Honestly it could be nice to have a consistent default behavior. Probably you could open a ticket in the Keras repo.

1 Like