In my latest keras example I minimally implement “Augmenting Convolutional networks with attention-based aggregation” by Touvron et. al.

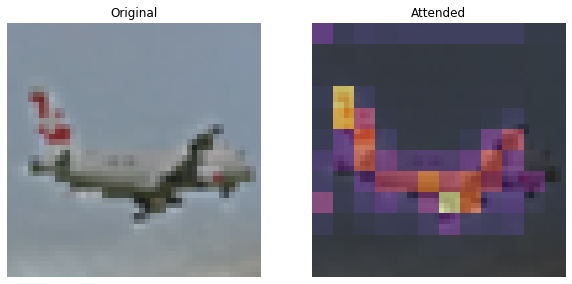

The main idea is to use a non-pyramidal convnet architecture and to swap the pooling layer with a transformer block. The transformer block acts like a cross-attention layer that helps in attending to feature maps that are useful for a classification decision.

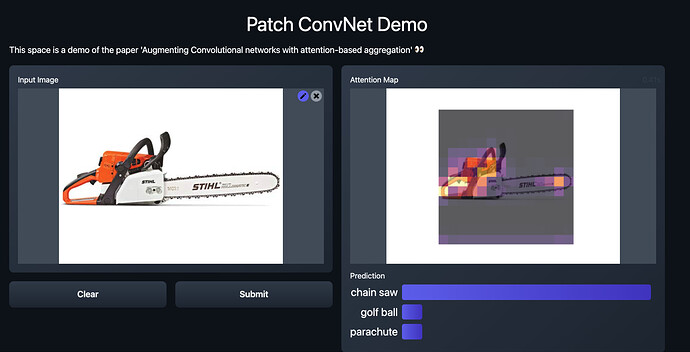

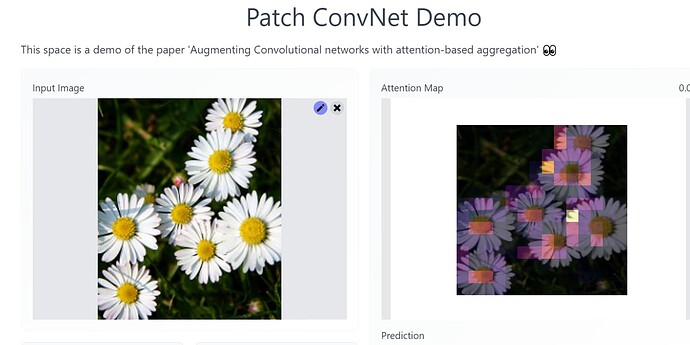

The attention-maps from the transformer block helps in the interpretability of the model. It let’s us know which part (patch) of the image is the model really focused on when making a classificaiton decision.

Link to the tutorial: Augmenting convnets with aggregated attention

@Ritwik_Raha, @Devjyoti_Chakraborty and I have built a Hugging Face demo around this example for all of you to try. In the demo we use a model that was trained on the imagenette dataset.

Link to the demo: Augmenting CNNs with attention-based aggregation - a Hugging Face Space by keras-io

I would like to thank JarvisLabs.ai for providing me with GPU credits for this project.