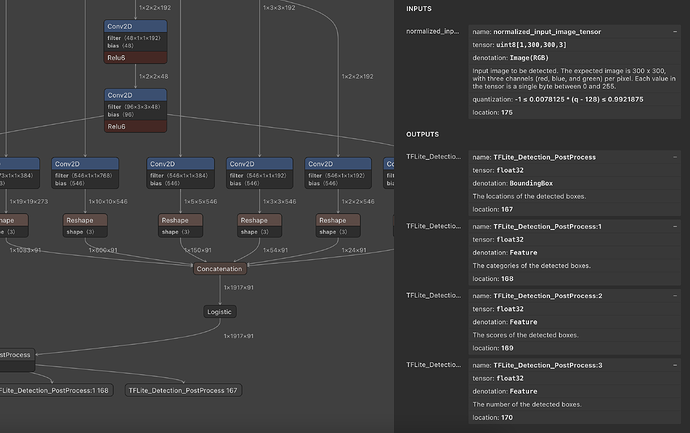

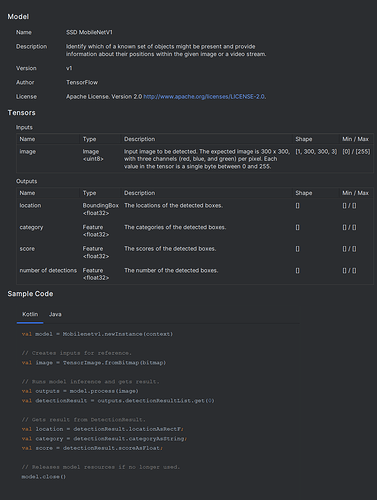

mobilenetv1.tflite

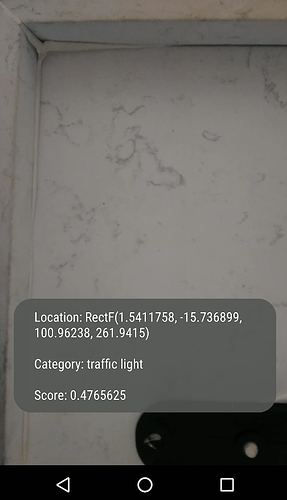

I’ve successfully integrated the model mentioned earlier (mobilenetv1.tflite), which is similar to mine, as depicted in the attached image. With this integrated model, I can generate the expected output, as indicated in the image. The integration with this model is functioning smoothly.

—

—

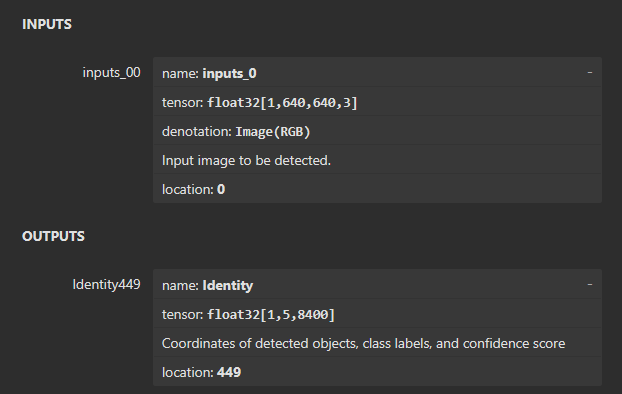

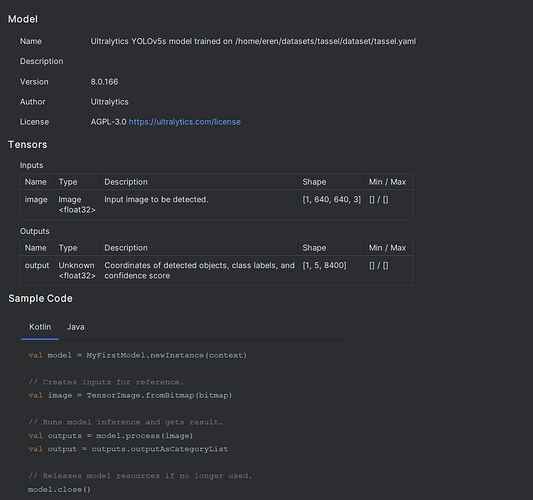

myFirstModel

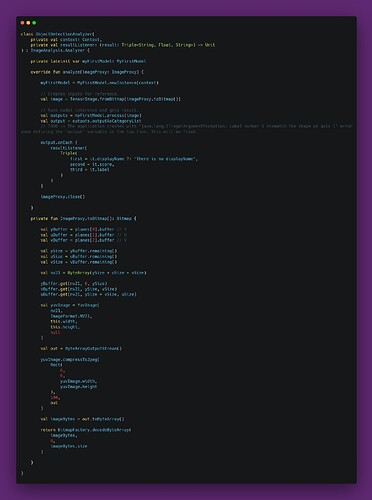

However, when I add ‘myFirstModel’ to the same project and use the sample code suggested by Android Studio, the application throws an exception. When I add my ‘myFirstModel’ model to the example in the doc, the app throws an exception again.

—

The exception I get when trying to integrate the “myFirstMode.tflite” model into the project where I integrated the “mobilenetv1.tflite” model

java.lang.IllegalArgumentException: Label number 1 mismatch the shape on axis 1

at org.tensorflow.lite.support.common.internal.SupportPreconditions.checkArgument(SupportPreconditions.java:104)

at org.tensorflow.lite.support.label.TensorLabel.<init>(TensorLabel.java:87)

at org.tensorflow.lite.support.label.TensorLabel.<init>(TensorLabel.java:105)

at com.moveon.objectdetectionstudy.ml.MyFirstModel$Outputs.getOutputAsCategoryList(MyFirstModel.java:105)

at com.moveon.objectdetectionstudy.ObjectDetectionAnalyzer.analyze(ObjectDetectionAnalyzer.kt:31)

at androidx.camera.core.ImageAnalysis.lambda$setAnalyzer$2(ImageAnalysis.java:481)

at androidx.camera.core.ImageAnalysis$$ExternalSyntheticLambda2.analyze(D8$$SyntheticClass)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer.lambda$analyzeImage$0$androidx-camera-core-ImageAnalysisAbstractAnalyzer(ImageAnalysisAbstractAnalyzer.java:286)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer$$ExternalSyntheticLambda1.run(D8$$SyntheticClass)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1133)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:607)

at java.lang.Thread.run(Thread.java:761)

The exception I got when trying to integrate the “myFirstMode.tflite” model into the sample project in the document

Error getting native address of native library: task_vision_jni

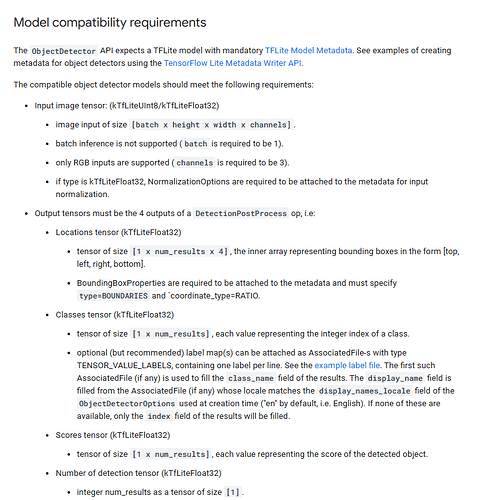

java.lang.RuntimeException: Error occurred when initializing ObjectDetector: Input tensor has type kTfLiteFloat32: it requires specifying NormalizationOptions metadata to preprocess input images.

at org.tensorflow.lite.task.vision.detector.ObjectDetector.initJniWithModelFdAndOptions(Native Method)

at org.tensorflow.lite.task.vision.detector.ObjectDetector.access$000(ObjectDetector.java:88)

at org.tensorflow.lite.task.vision.detector.ObjectDetector$1.createHandle(ObjectDetector.java:156)

at org.tensorflow.lite.task.vision.detector.ObjectDetector$1.createHandle(ObjectDetector.java:149)

at org.tensorflow.lite.task.core.TaskJniUtils$1.createHandle(TaskJniUtils.java:70)

at org.tensorflow.lite.task.core.TaskJniUtils.createHandleFromLibrary(TaskJniUtils.java:91)

at org.tensorflow.lite.task.core.TaskJniUtils.createHandleFromFdAndOptions(TaskJniUtils.java:66)

at org.tensorflow.lite.task.vision.detector.ObjectDetector.createFromFileAndOptions(ObjectDetector.java:147)

at org.tensorflow.lite.examples.objectdetection.ObjectDetectorHelper.setupObjectDetector(ObjectDetectorHelper.kt:96)

at org.tensorflow.lite.examples.objectdetection.ObjectDetectorHelper.detect(ObjectDetectorHelper.kt:107)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.detectObjects(CameraFragment.kt:289)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.bindCameraUseCases$lambda-9$lambda-8(CameraFragment.kt:264)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.$r8$lambda$trA1WWYM4Jg8atYGW5F6kpxoOW8(CameraFragment.kt)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment$$ExternalSyntheticLambda7.analyze(D8$$SyntheticClass)

at androidx.camera.core.ImageAnalysis.lambda$setAnalyzer$2(ImageAnalysis.java:476)

at androidx.camera.core.ImageAnalysis$$ExternalSyntheticLambda0.analyze(D8$$SyntheticClass)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer.lambda$analyzeImage$0$androidx-camera-core-ImageAnalysisAbstractAnalyzer(ImageAnalysisAbstractAnalyzer.java:283)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer$$ExternalSyntheticLambda1.run(D8$$SyntheticClass)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1133)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:607)

at java.lang.Thread.run(Thread.java:761)

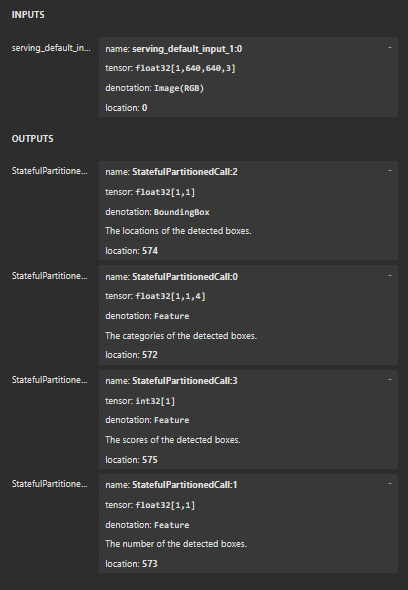

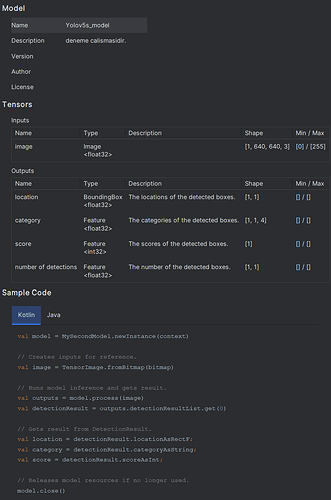

mySecondModel

When I add my ‘mySecondModel’ model to the same project and use the sample code suggested by Android Studio, the application throws an exception. When I add my ‘mySecondModel’ model to the example in the document, the application throws an exception again.

In addition, since I could run the “mobilenetv1” model, I told my team “I can run a similar model” and my team gave me the “mySecondModel” model. The only difference between these two models is that one of the outputs of “mySecondModel” is of type “Int32”. We could not convert this “Int32” type part to “float32” type. I am hoping that if this “Int32” type part becomes “float32” the problem will be solved, but we are at this point. If you have a suggestion on this issue, we would also like to listen to it.

—

The exception I get when trying to integrate the “mySecondMode.tflite” model into the project where I integrated the “mobilenetv1.tflite” model

Type mismatch: inferred type is Triple<RectF, String, TensorBuffer> but Triple<RectF, String, Int> was expected

The exception I got when trying to integrate the “mySecondMode” model into the sample project in the document

16:06:58.187 tflite E Select TensorFlow op(s), included in the given model, is(are) not supported by this interpreter. Make sure you apply/link the Flex delegate before inference. For the Android, it can be resolved by adding "org.tensorflow:tensorflow-lite-select-tf-ops" dependency. See instructions: https://www.tensorflow.org/lite/guide/ops_select

16:06:58.187 tflite E Node number 132 (FlexTensorListReserve) failed to prepare.

16:06:58.188 tflite E Select TensorFlow op(s), included in the given model, is(are) not supported by this interpreter. Make sure you apply/link the Flex delegate before inference. For the Android, it can be resolved by adding "org.tensorflow:tensorflow-lite-select-tf-ops" dependency. See instructions: https://www.tensorflow.org/lite/guide/ops_select

16:06:58.188 tflite E Node number 132 (FlexTensorListReserve) failed to prepare.

16:06:58.191 Task...tils E Error getting native address of native library: task_vision_jni

java.lang.IllegalStateException: Error occurred when initializing ObjectDetector: AllocateTensors() failed.

at org.tensorflow.lite.task.vision.detector.ObjectDetector.initJniWithModelFdAndOptions(Native Method)

at org.tensorflow.lite.task.vision.detector.ObjectDetector.access$000(ObjectDetector.java:88)

at org.tensorflow.lite.task.vision.detector.ObjectDetector$1.createHandle(ObjectDetector.java:156)

at org.tensorflow.lite.task.vision.detector.ObjectDetector$1.createHandle(ObjectDetector.java:149)

at org.tensorflow.lite.task.core.TaskJniUtils$1.createHandle(TaskJniUtils.java:70)

at org.tensorflow.lite.task.core.TaskJniUtils.createHandleFromLibrary(TaskJniUtils.java:91)

at org.tensorflow.lite.task.core.TaskJniUtils.createHandleFromFdAndOptions(TaskJniUtils.java:66)

at org.tensorflow.lite.task.vision.detector.ObjectDetector.createFromFileAndOptions(ObjectDetector.java:147)

at org.tensorflow.lite.examples.objectdetection.ObjectDetectorHelper.setupObjectDetector(ObjectDetectorHelper.kt:96)

at org.tensorflow.lite.examples.objectdetection.ObjectDetectorHelper.detect(ObjectDetectorHelper.kt:107)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.detectObjects(CameraFragment.kt:289)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.bindCameraUseCases$lambda-9$lambda-8(CameraFragment.kt:264)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment.$r8$lambda$trA1WWYM4Jg8atYGW5F6kpxoOW8(CameraFragment.kt)

at org.tensorflow.lite.examples.objectdetection.fragments.CameraFragment$$ExternalSyntheticLambda7.analyze(D8$$SyntheticClass)

at androidx.camera.core.ImageAnalysis.lambda$setAnalyzer$2(ImageAnalysis.java:476)

at androidx.camera.core.ImageAnalysis$$ExternalSyntheticLambda0.analyze(D8$$SyntheticClass)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer.lambda$analyzeImage$0$androidx-camera-core-ImageAnalysisAbstractAnalyzer(ImageAnalysisAbstractAnalyzer.java:283)

at androidx.camera.core.ImageAnalysisAbstractAnalyzer$$ExternalSyntheticLambda1.run(D8$$SyntheticClass)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1133)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:607)

at java.lang.Thread.run(Thread.java:761)

How can I successfully integrate one of these two models? I have searched for these exceptions but have not yet found a workable solution. I have also not found a sample project written in Kotlin using a model converted from YOLOv5 to TensorFlow Lite.

Best regards.