Hi,

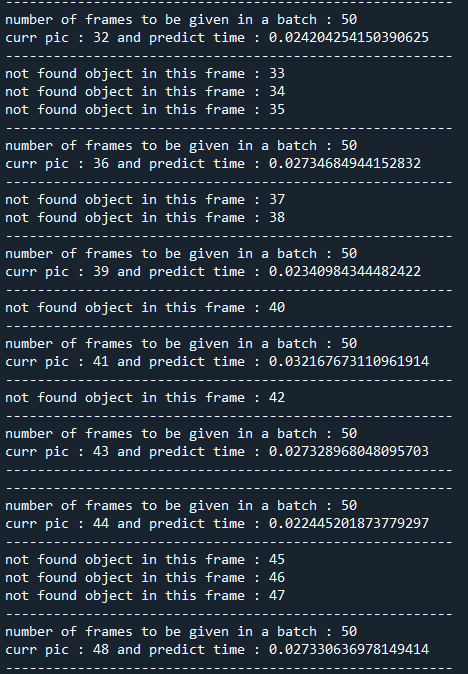

I am working on real time project using alexnet model. I am constantly getting frames at a constant FPS value.I need to get a prediction result for each frame. But I cut the objects whose number is not certain in each frame and give them to the trained model in a batch. Like the screenshot below;

GPU infrence time works correctly when there is the same number of objects in each frame, as in the screenshot above. but when there is a different number of objects in each frame, the GPU infrence time returns with too much time.

As far as I understand, parallel processes set themselves for different batch sizes. I can show you a screenshot of it below ;

Between 0.005ms and 0.040 ms is a normal time for me but values other than these are not normal.

input_batch = tf.compat.v1.placeholder(dtype=tf.float32, shape=[None, 227, 227, 1], name='input__batch')

test_acc_step= sess.run('import/prediction_argmax_model:0', feed_dict={'import/x:0': input_static_batch_size_images})

With this usage, I am sending a static batch into the session feed dict.

Can I give this to static size instead of None and feed it with dynamic batch in each iteration? Or is there another way to do this?