Hi @Vigneswaran, I have gone through your data values which are obtained after stacking data = tf.stack([a, b, c, d], -1)

The values look like

[[ 0.00000000e+00, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00],

[ 1.00460220e-01, 1.00333406e-02, 7.53689538e-02, 2.48620990e-01],

[ 2.00666812e-01, 1.99654222e-02, 1.50690330e-01, 4.81629004e-01],

..............

Each value in the inner lists zero index refers to a, 1st index refers to b, 2nd index refers to c , 3rd index refers to d.

If you see the data.numpy()[1] it will be

array([0.10046022, 0.01003334, 0.07536895, 0.24862099]) which are values at 1st index values of a,b,c,d

If you try to get the max value the value we get is 0.24862099 which will be the value of d at index 1. like that we also have many cases where we have -ve values, for those -ve values we get max value as b values. so if you plot the graph with those max values the graph will not be similar to c graph.

If you want max amplitude wave values getting max will not work. One way is to find which wave has max amplitude. once you have stacked and resize the array

import tensorflow as tf

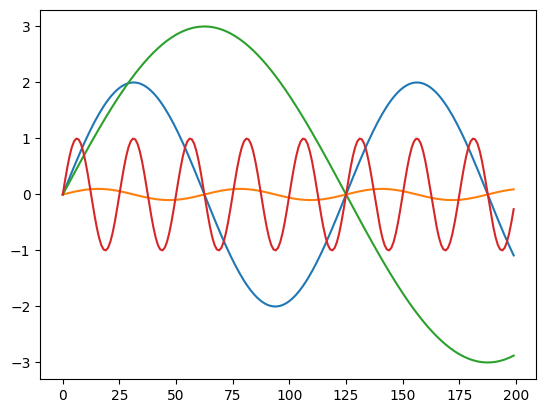

# Sample four sin signals

a = 2 * tf.math.sin(tf.linspace(0, 10, 200))

b = 0.1 * tf.math.sin(2 * tf.linspace(0, 10, 200))

c = 3 * tf.math.sin(0.5 * tf.linspace(0, 10, 200))

d = 1 * tf.math.sin(5 * tf.linspace(0, 10, 200))

# Stack the signals

signal_stack = tf.stack([a, b, c, d], -1)

resize_signal_stack = tf.reshape(signal_stack, [1, 200, 2, 2, 1])

resize_numpy_stack=resize_signal_stack.numpy()

to get a max amplitude wave.

import numpy as np

max_value_indices = np.unravel_index(np.argmax(resize_numpy_stack), signal_stack.shape)[1]

#output of max_value_indices will be 2

The max_value_indices+1 gives which wave have max amplitude

Now to get values of max amplitude wave

new_array=[]

for i in range(0,200):

new_array.append(resize_numpy_stack[0][i][1][0][0])

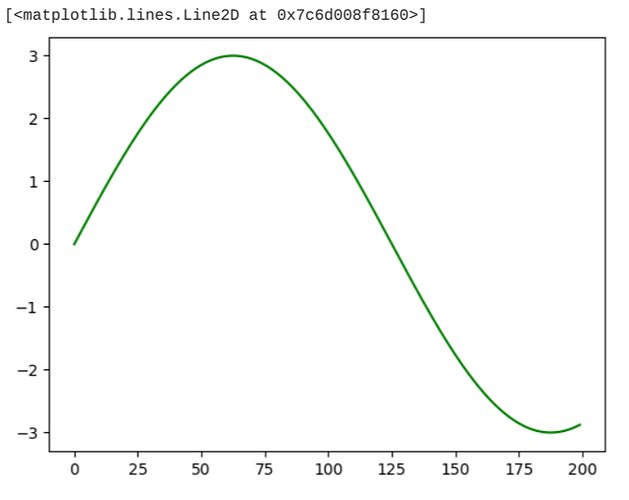

if you plot this new_array it will be

plt.plot(new_array, color='green')

which will be the same as c plot.

Note: This indexing new_array.append(resize_numpy_stack[0][i][1][0][0]) depends on the max_value_indices value. suppose if i define the waves like

a = 2*tf.math.sin(tf.linspace(0, 10, 200))

b = 0.1*tf.math.sin(2*tf.linspace(0, 10, 200))

d = 3*tf.math.sin(0.5*tf.linspace(0, 10, 200))

c = 1*tf.math.sin(5*tf.linspace(0, 10, 200))

The indexing will be

new_array=[]

for i in range(0,200):

new_array.append(resize_numpy_stack[0][i][1][1])

please refer to this gist for working code. Thank You.

![]()