Good point @lgusm

Let’s take a look at how labelImg and Google Vision exports formats as they are the two main image labelling dataset formats used in the Model Maker tutorials.

labelImg exports the YOLO, Pascal VOC and CreateML formats, while Google Vision exports VisionML (.csv) format (as used in the Salad Object Detection Tutorial).

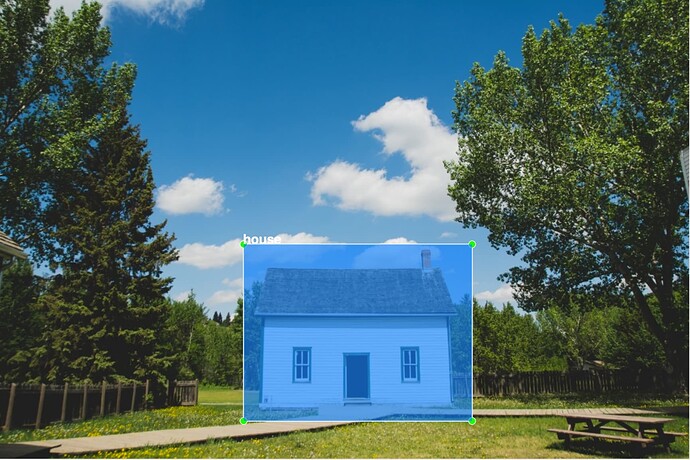

Below are some examples of the formats for the image below:

YOLO (labelImg)

0 0.518519 0.724306 0.331481 0.384722

has a labels.txt file associated with it.

Google Vision (Google)

TEST,gs://data_images_1000/automl/house.jpg,house,0.36296296,0.52698416,0.66772485,0.52698416,0.66772485,0.9,0.36296296,0.9

images are annotated and labelled inside Google Vision dashboard.

Pascal VOC (labelImg)

<annotation>

<folder>Images</folder>

<filename>house.jpg</filename>

<path>/Users/username/images/house.jpg</path>

<source>

<database>Unknown</database>

</source>

<size>

<width>1080</width>

<height>720</height>

<depth>3</depth>

</size>

<segmented>0</segmented>

<object>

<name>house</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>381</xmin>

<ymin>383</ymin>

<xmax>739</xmax>

<ymax>660</ymax>

</bndbox>

</object>

</annotation>

Can be used with Model Maker as used in Object Detection for Android Figurine Tutorial.

CreateML (labelImg)

[{"image": "house.jpg", "annotations": [{"label": "house", "coordinates": {"x": 560.8333333333333, "y": 522.2083333333333, "width": 357.99999999999994, "height": 277.0}}]}]

Some Key Points to Note

From what I can tell Model Maker can use both Google Vision format (.csv) and Pascal VOC (.xml) with its DataLoader.

Here is the code to use the Google Vision format:

train_data, validation_data, test_data = object_detector.DataLoader.from_csv('gs://some_google_bucket/dataset.csv')

Here is the code to use the Pascal VOC format:

train_data = object_detector.DataLoader.from_pascal_voc(

'android_figurine/train',

'android_figurine/train',

['android', 'pig_android']

)

val_data = object_detector.DataLoader.from_pascal_voc(

'android_figurine/validate',

'android_figurine/validate',

['android', 'pig_android']

)

It is important to note while YOLO coordinates do look very similar to the Google Vision coordinates (ie both being between 0 and 1) they are different.

A final note, from personal experience I’ve found the Model Maker DataLoader to be much quicker with Pascal VOC format when using larger datasets (~17k images or so). The Google Storage Bucket of images and .xml files are a bit harder to keep track of, but the DataLoader feels much quicker, at least to me.

Also, don’t forget to set the max instances per image hyper parameter to accept more multiple instances if you have images with multiple bounding boxes. I kept getting errors on this and it took me a while to figure out.

spec = object_detector.EfficientDetSpec(

model_name='efficientdet-lite2',

uri='https://tfhub.dev/tensorflow/efficientdet/lite2/feature-vector/1',

hparams={'max_instances_per_image': 8000})