Hi, I am using tflite model maker to create my own custom models. I am using efficientdet_lite0 as my model spec.

I noticed that the ssd_mobileNet.tflite model takes input image 300x300 and return 10 results. My custom model, based on efficientdet_lite0, takes in image of 320x320 and return 25 results.

I do not need so many results. May I how can I modify the input image size and number of output when using model maker to train custom model?

More importantly, with a smaller input image and reduce results, will my model run inference faster?

Modifying the input image size and the number of output detections in a TensorFlow Lite model can indeed impact inference speed. Here’s how you can make these modifications using TensorFlow Lite Model Maker with EfficientDet Lite models, and the implications of these changes:

Modifying Input Size and Number of Outputs:

-

Input Image Size: EfficientDet Lite models have predefined input sizes (like 320x320 for efficientdet_lite0). To change this, you would typically need to retrain the model with your desired input size. However, EfficientDet Lite’s architecture is optimized for specific resolutions, and arbitrary changes might not be straightforward and could require significant alterations in the model architecture.

-

Number of Output Detections: You can limit the number of output detections by modifying the post-processing parameters. When using TensorFlow Lite Model Maker, you can set the

max_output_size parameter in the spec.config to control the maximum number of detections.

pythonCopy code

from tflite_model_maker.config import QuantizationConfig

from tflite_model_maker.config import ExportFormat

from tflite_model_maker import model_spec

from tflite_model_maker import object_detector

# Load the EfficientDet Lite0 model spec and modify its configuration

spec = model_spec.get('efficientdet_lite0')

spec.config.max_output_size = 10 # Set the maximum number of output detections

# Continue with your training pipeline...

Impact on Inference Speed:

-

Smaller Input Size: Reducing the input size can lead to faster inference times since the model processes fewer pixels. However, this might also reduce the accuracy, especially if the model architecture is optimized for a specific input size.

-

Fewer Output Detections: Limiting the number of output detections reduces the computational burden during post-processing (like non-maximum suppression). This can slightly improve inference speed, particularly on resource-constrained devices.

Considerations:

-

Model Accuracy: Be aware that changing the input size and reducing the number of outputs may affect the model’s accuracy. It’s important to validate the modified model to ensure it still meets your accuracy requirements.

-

Re-Training: If you decide to change the input size to a dimension not natively supported by the EfficientDet Lite models, you will need to retrain the model from scratch, which can be a complex and time-consuming process.

-

Device Capabilities: The extent of speed improvement also depends on the capabilities of the device running the inference. Some devices might handle certain input sizes more efficiently due to hardware optimizations.

In summary, while you can modify the number of output detections quite easily to potentially gain some speed improvements, changing the input size of EfficientDet Lite models is more involved and might require significant architectural changes and retraining. Always validate the performance and accuracy of the modified model to ensure it meets your needs.

Hi

@Tim_Wolfe

I tried to limited my detection output to 12 with the configuration:

spec.config.max_output_size=12

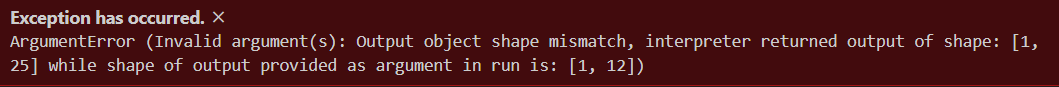

However, when I tried to run this model, the interpreter still has a output shape of [1,25]. And indeed, there are still 25 detections

is there anyway I can reduce it to 12