Tried to train ssd_resnet50_v1_fpn_640x640_coco17_tpu-8 using model_main_tf2.py script with custom dataset.

Label map:

item {

name:"face"

id:1

display_name: "face"

}

pipeline.config:

model {

ssd {

num_classes: 1

image_resizer {

fixed_shape_resizer {

height: 640

width: 640

}

}

feature_extractor {

type: "ssd_resnet50_v1_fpn_keras"

depth_multiplier: 1.0

min_depth: 16

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 0.00039999998989515007

}

}

initializer {

truncated_normal_initializer {

mean: 0.0

stddev: 0.029999999329447746

}

}

activation: RELU_6

batch_norm {

decay: 0.996999979019165

scale: true

epsilon: 0.0010000000474974513

}

}

override_base_feature_extractor_hyperparams: true

fpn {

min_level: 3

max_level: 7

}

}

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

use_matmul_gather: true

}

}

similarity_calculator {

iou_similarity {

}

}

box_predictor {

weight_shared_convolutional_box_predictor {

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 0.00039999998989515007

}

}

initializer {

random_normal_initializer {

mean: 0.0

stddev: 0.009999999776482582

}

}

activation: RELU_6

batch_norm {

decay: 0.996999979019165

scale: true

epsilon: 0.0010000000474974513

}

}

depth: 256

num_layers_before_predictor: 4

kernel_size: 3

class_prediction_bias_init: -4.599999904632568

}

}

anchor_generator {

multiscale_anchor_generator {

min_level: 3

max_level: 7

anchor_scale: 4.0

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

scales_per_octave: 2

}

}

post_processing {

batch_non_max_suppression {

score_threshold: 9.99999993922529e-09

iou_threshold: 0.6000000238418579

max_detections_per_class: 100

max_total_detections: 100

use_static_shapes: false

}

score_converter: SIGMOID

}

normalize_loss_by_num_matches: true

loss {

localization_loss {

weighted_smooth_l1 {

}

}

classification_loss {

weighted_sigmoid_focal {

gamma: 2.0

alpha: 0.25

}

}

classification_weight: 1.0

localization_weight: 1.0

}

encode_background_as_zeros: true

normalize_loc_loss_by_codesize: true

inplace_batchnorm_update: true

freeze_batchnorm: false

}

}

train_config {

batch_size: 16

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

random_crop_image {

min_object_covered: 0.0

min_aspect_ratio: 0.75

max_aspect_ratio: 3.0

min_area: 0.75

max_area: 1.0

overlap_thresh: 0.0

}

}

sync_replicas: true

optimizer {

momentum_optimizer {

learning_rate {

cosine_decay_learning_rate {

learning_rate_base: 0.03999999910593033

total_steps: 25000

warmup_learning_rate: 0.013333000242710114

warmup_steps: 2000

}

}

momentum_optimizer_value: 0.8999999761581421

}

use_moving_average: false

}

fine_tune_checkpoint: "D:\\Vadim\\NIR\\nir\\face_detection\\resnet50\\checkpoint\\ckpt-0"

num_steps: 5000

startup_delay_steps: 0.0

replicas_to_aggregate: 8

max_number_of_boxes: 100

unpad_groundtruth_tensors: false

fine_tune_checkpoint_type: "detection"

use_bfloat16: true

fine_tune_checkpoint_version: V2

}

train_input_reader {

label_map_path: "D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\label_map.pbtxt"

tf_record_input_reader {

input_path: "D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\train.record"

}

}

eval_config {

metrics_set: "coco_detection_metrics"

use_moving_averages: false

}

eval_input_reader {

label_map_path: "D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\label_map.pbtxt"

shuffle: false

num_epochs: 1

tf_record_input_reader {

input_path: "D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\val.record"

}

}

I am getting this error:

C:\Users\User\tfod\Scripts\python.exe D:/Vadim/NIR/nir/face_detection/models/research/object_detection/model_main_tf2.py --model_dir=D:\Vadim\NIR\nir\face_detection\resnet50 --pipeline_config_path=D:\Vadim\NIR\nir\face_detection\resnet50\pipeline.config --checkpoint_dir=D:\Vadim\NIR\nir\face_detection\resnet50\checkpoint --num_train_steps=5000

WARNING:tensorflow:Forced number of epochs for all eval validations to be 1.

W0423 02:20:21.550158 11500 model_lib_v2.py:1089] Forced number of epochs for all eval validations to be 1.

INFO:tensorflow:Maybe overwriting sample_1_of_n_eval_examples: None

I0423 02:20:21.551157 11500 config_util.py:552] Maybe overwriting sample_1_of_n_eval_examples: None

INFO:tensorflow:Maybe overwriting use_bfloat16: False

I0423 02:20:21.551157 11500 config_util.py:552] Maybe overwriting use_bfloat16: False

INFO:tensorflow:Maybe overwriting train_steps: 5000

I0423 02:20:21.551157 11500 config_util.py:552] Maybe overwriting train_steps: 5000

INFO:tensorflow:Maybe overwriting eval_num_epochs: 1

I0423 02:20:21.551157 11500 config_util.py:552] Maybe overwriting eval_num_epochs: 1

WARNING:tensorflow:Expected number of evaluation epochs is 1, but instead encountered `eval_on_train_input_config.num_epochs` = 0. Overwriting `num_epochs` to 1.

W0423 02:20:21.552157 11500 model_lib_v2.py:1107] Expected number of evaluation epochs is 1, but instead encountered `eval_on_train_input_config.num_epochs` = 0. Overwriting `num_epoch

s` to 1.

2022-04-23 02:20:21.556973: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the followi

ng CPU instructions in performance-critical operations: AVX

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-04-23 02:20:22.157664: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 2154 MB memory: -> device: 0, nam

e: GeForce GTX 1650, pci bus id: 0000:01:00.0, compute capability: 7.5

INFO:tensorflow:Reading unweighted datasets: ['D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\val.record']

I0423 02:20:22.287044 11500 dataset_builder.py:162] Reading unweighted datasets: ['D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\val.record']

INFO:tensorflow:Reading record datasets for input file: ['D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\val.record']

I0423 02:20:22.287814 11500 dataset_builder.py:79] Reading record datasets for input file: ['D:\\Vadim\\NIR\\nir\\face_detection\\wider_face\\val.record']

INFO:tensorflow:Number of filenames to read: 1

I0423 02:20:22.288058 11500 dataset_builder.py:80] Number of filenames to read: 1

WARNING:tensorflow:num_readers has been reduced to 1 to match input file shards.

W0423 02:20:22.288058 11500 dataset_builder.py:86] num_readers has been reduced to 1 to match input file shards.

WARNING:tensorflow:From C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\builders\dataset_builder.py:100: parallel_interleave (from tensorflow.pyth

on.data.experimental.ops.interleave_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use `tf.data.Dataset.interleave(map_func, cycle_length, block_length, num_parallel_calls=tf.data.AUTOTUNE)` instead. If sloppy execution is desired, use `tf.data.Options.deterministic`

.

W0423 02:20:22.291060 11500 deprecation.py:337] From C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\builders\dataset_builder.py:100: parallel_int

erleave (from tensorflow.python.data.experimental.ops.interleave_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use `tf.data.Dataset.interleave(map_func, cycle_length, block_length, num_parallel_calls=tf.data.AUTOTUNE)` instead. If sloppy execution is desired, use `tf.data.Options.deterministic`

.

WARNING:tensorflow:From C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\builders\dataset_builder.py:235: DatasetV1.map_with_legacy_function (from

tensorflow.python.data.ops.dataset_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use `tf.data.Dataset.map()

W0423 02:20:22.315042 11500 deprecation.py:337] From C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\builders\dataset_builder.py:235: DatasetV1.ma

p_with_legacy_function (from tensorflow.python.data.ops.dataset_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use `tf.data.Dataset.map()

WARNING:tensorflow:From C:\Users\User\tfod\lib\site-packages\tensorflow\python\util\dispatch.py:1082: sparse_to_dense (from tensorflow.python.ops.sparse_ops) is deprecated and will be

removed in a future version.

Instructions for updating:

Create a `tf.sparse.SparseTensor` and use `tf.sparse.to_dense` instead.

W0423 02:20:26.543687 11500 deprecation.py:337] From C:\Users\User\tfod\lib\site-packages\tensorflow\python\util\dispatch.py:1082: sparse_to_dense (from tensorflow.python.ops.sparse_op

s) is deprecated and will be removed in a future version.

Instructions for updating:

Create a `tf.sparse.SparseTensor` and use `tf.sparse.to_dense` instead.

WARNING:tensorflow:From C:\Users\User\tfod\lib\site-packages\tensorflow\python\util\dispatch.py:1082: to_float (from tensorflow.python.ops.math_ops) is deprecated and will be removed i

n a future version.

Instructions for updating:

Use `tf.cast` instead.

W0423 02:20:27.762465 11500 deprecation.py:337] From C:\Users\User\tfod\lib\site-packages\tensorflow\python\util\dispatch.py:1082: to_float (from tensorflow.python.ops.math_ops) is dep

recated and will be removed in a future version.

Instructions for updating:

Use `tf.cast` instead.

INFO:tensorflow:Waiting for new checkpoint at D:\Vadim\NIR\nir\face_detection\resnet50\checkpoint

I0423 02:20:30.473922 11500 checkpoint_utils.py:136] Waiting for new checkpoint at D:\Vadim\NIR\nir\face_detection\resnet50\checkpoint

INFO:tensorflow:Found new checkpoint at D:\Vadim\NIR\nir\face_detection\resnet50\checkpoint\ckpt-0

I0423 02:20:30.474921 11500 checkpoint_utils.py:145] Found new checkpoint at D:\Vadim\NIR\nir\face_detection\resnet50\checkpoint\ckpt-0

C:\Users\User\tfod\lib\site-packages\keras\backend.py:450: UserWarning: `tf.keras.backend.set_learning_phase` is deprecated and will be removed after 2020-10-11. To update it, simply p

ass a True/False value to the `training` argument of the `__call__` method of your layer or model.

warnings.warn('`tf.keras.backend.set_learning_phase` is deprecated and '

2022-04-23 02:20:55.100622: I tensorflow/stream_executor/cuda/cuda_dnn.cc:368] Loaded cuDNN version 8200

2022-04-23 02:20:56.652293: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:56.659796: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:56.696712: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:56.705067: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:56.853532: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:56.860303: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.06GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:57.022897: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.09GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:57.028967: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.09GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:57.180462: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.15GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:57.186676: W tensorflow/core/common_runtime/bfc_allocator.cc:275] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.15GiB with freed_by_count=0. The caller

indicates that this is not a failure, but may mean that there could be performance gains if more memory were available.

2022-04-23 02:20:58.384191: W tensorflow/core/framework/op_kernel.cc:1733] INVALID_ARGUMENT: required broadcastable shapes

2022-04-23 02:20:58.387247: W tensorflow/core/framework/op_kernel.cc:1733] INVALID_ARGUMENT: required broadcastable shapes

2022-04-23 02:20:58.390285: W tensorflow/core/framework/op_kernel.cc:1733] INVALID_ARGUMENT: required broadcastable shapes

2022-04-23 02:20:58.393312: W tensorflow/core/framework/op_kernel.cc:1733] INVALID_ARGUMENT: required broadcastable shapes

INFO:tensorflow:Encountered Graph execution error:

Detected at node 'Loss/Loss_1/logistic_loss/GreaterEqual' defined at (most recent call last):

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 114, in <module>

tf.compat.v1.app.run()

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 312, in run

_run_main(main, args)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 258, in _run_main

sys.exit(main(argv))

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 81, in main

model_lib_v2.eval_continuously(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1159, in eval_continuously

eager_eval_loop(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 939, in eager_eval_loop

eval_features) = strategy.run(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 910, in compute_eval_dict

losses_dict, prediction_dict = _compute_losses_and_predictions_dicts(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 129, in _compute_losses_and_predictions_dicts

losses_dict = model.loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\meta_architectures\ssd_meta_arch.py", line 881, in loss

cls_losses = self._classification_loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 94, in __call__

return self._compute_loss(prediction_tensor, target_tensor, **params)

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 412, in _compute_loss

per_entry_cross_ent = (tf.nn.sigmoid_cross_entropy_with_logits(

Node: 'Loss/Loss_1/logistic_loss/GreaterEqual'

Detected at node 'Loss/Loss_1/logistic_loss/GreaterEqual' defined at (most recent call last):

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 114, in <module>

tf.compat.v1.app.run()

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 312, in run

_run_main(main, args)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 258, in _run_main

sys.exit(main(argv))

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 81, in main

model_lib_v2.eval_continuously(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1159, in eval_continuously

eager_eval_loop(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 939, in eager_eval_loop

eval_features) = strategy.run(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 910, in compute_eval_dict

losses_dict, prediction_dict = _compute_losses_and_predictions_dicts(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 129, in _compute_losses_and_predictions_dicts

losses_dict = model.loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\meta_architectures\ssd_meta_arch.py", line 881, in loss

cls_losses = self._classification_loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 94, in __call__

return self._compute_loss(prediction_tensor, target_tensor, **params)

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 412, in _compute_loss

per_entry_cross_ent = (tf.nn.sigmoid_cross_entropy_with_logits(

Node: 'Loss/Loss_1/logistic_loss/GreaterEqual'

2 root error(s) found.

(0) INVALID_ARGUMENT: required broadcastable shapes

[[{{node Loss/Loss_1/logistic_loss/GreaterEqual}}]]

[[Identity_22/_110]]

(1) INVALID_ARGUMENT: required broadcastable shapes

[[{{node Loss/Loss_1/logistic_loss/GreaterEqual}}]]

0 successful operations.

0 derived errors ignored. [Op:__inference_compute_eval_dict_15362] exception.

I0423 02:21:00.953818 11500 model_lib_v2.py:942] Encountered Graph execution error:

Detected at node 'Loss/Loss_1/logistic_loss/GreaterEqual' defined at (most recent call last):

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 114, in <module>

tf.compat.v1.app.run()

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 312, in run

_run_main(main, args)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 258, in _run_main

sys.exit(main(argv))

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 81, in main

model_lib_v2.eval_continuously(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1159, in eval_continuously

eager_eval_loop(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 939, in eager_eval_loop

eval_features) = strategy.run(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 910, in compute_eval_dict

losses_dict, prediction_dict = _compute_losses_and_predictions_dicts(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 129, in _compute_losses_and_predictions_dicts

losses_dict = model.loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\meta_architectures\ssd_meta_arch.py", line 881, in loss

cls_losses = self._classification_loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 94, in __call__

return self._compute_loss(prediction_tensor, target_tensor, **params)

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 412, in _compute_loss

per_entry_cross_ent = (tf.nn.sigmoid_cross_entropy_with_logits(

Node: 'Loss/Loss_1/logistic_loss/GreaterEqual'

Detected at node 'Loss/Loss_1/logistic_loss/GreaterEqual' defined at (most recent call last):

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 114, in <module>

tf.compat.v1.app.run()

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 312, in run

_run_main(main, args)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 258, in _run_main

sys.exit(main(argv))

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 81, in main

model_lib_v2.eval_continuously(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1159, in eval_continuously

eager_eval_loop(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 939, in eager_eval_loop

eval_features) = strategy.run(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 910, in compute_eval_dict

losses_dict, prediction_dict = _compute_losses_and_predictions_dicts(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 129, in _compute_losses_and_predictions_dicts

losses_dict = model.loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\meta_architectures\ssd_meta_arch.py", line 881, in loss

cls_losses = self._classification_loss(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 94, in __call__

return self._compute_loss(prediction_tensor, target_tensor, **params)

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\core\losses.py", line 412, in _compute_loss

per_entry_cross_ent = (tf.nn.sigmoid_cross_entropy_with_logits(

Node: 'Loss/Loss_1/logistic_loss/GreaterEqual'

2 root error(s) found.

(0) INVALID_ARGUMENT: required broadcastable shapes

[[{{node Loss/Loss_1/logistic_loss/GreaterEqual}}]]

[[Identity_22/_110]]

(1) INVALID_ARGUMENT: required broadcastable shapes

[[{{node Loss/Loss_1/logistic_loss/GreaterEqual}}]]

0 successful operations.

0 derived errors ignored. [Op:__inference_compute_eval_dict_15362] exception.

INFO:tensorflow:A replica probably exhausted all examples. Skipping pending examples on other replicas.

I0423 02:21:00.956816 11500 model_lib_v2.py:943] A replica probably exhausted all examples. Skipping pending examples on other replicas.

Traceback (most recent call last):

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 114, in <module>

tf.compat.v1.app.run()

File "C:\Users\User\tfod\lib\site-packages\tensorflow\python\platform\app.py", line 36, in run

_run(main=main, argv=argv, flags_parser=_parse_flags_tolerate_undef)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 312, in run

_run_main(main, args)

File "C:\Users\User\tfod\lib\site-packages\absl\app.py", line 258, in _run_main

sys.exit(main(argv))

File "D:\Vadim\NIR\nir\face_detection\models\research\object_detection\model_main_tf2.py", line 81, in main

model_lib_v2.eval_continuously(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1159, in eval_continuously

eager_eval_loop(

File "C:\Users\User\tfod\lib\site-packages\object_detection-0.1-py3.10.egg\object_detection\model_lib_v2.py", line 1009, in eager_eval_loop

for evaluator in evaluators:

TypeError: 'NoneType' object is not iterable

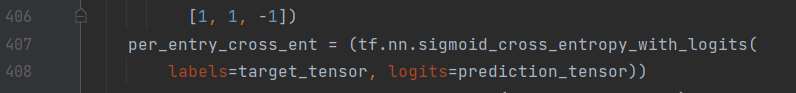

The possible cause of error is here (losses.py from object_detection package)

def _compute_loss(self,

prediction_tensor,

target_tensor,

weights,

class_indices=None):

"""Compute loss function.

Args:

prediction_tensor: A float tensor of shape [batch_size, num_anchors,

num_classes] representing the predicted logits for each class

target_tensor: A float tensor of shape [batch_size, num_anchors,

num_classes] representing one-hot encoded classification targets

weights: a float tensor of shape, either [batch_size, num_anchors,

num_classes] or [batch_size, num_anchors, 1]. If the shape is

[batch_size, num_anchors, 1], all the classses are equally weighted.

class_indices: (Optional) A 1-D integer tensor of class indices.

If provided, computes loss only for the specified class indices.

Returns:

loss: a float tensor of shape [batch_size, num_anchors, num_classes]

representing the value of the loss function.

"""

if class_indices is not None:

weights *= tf.reshape(

ops.indices_to_dense_vector(class_indices,

tf.shape(prediction_tensor)[2]),

[1, 1, -1])

per_entry_cross_ent = (tf.nn.sigmoid_cross_entropy_with_logits(

labels=target_tensor, logits=prediction_tensor))

prediction_probabilities = tf.sigmoid(prediction_tensor)

p_t = ((target_tensor * prediction_probabilities) +

((1 - target_tensor) * (1 - prediction_probabilities)))

modulating_factor = 1.0

if self._gamma:

modulating_factor = tf.pow(1.0 - p_t, self._gamma)

alpha_weight_factor = 1.0

if self._alpha is not None:

alpha_weight_factor = (target_tensor * self._alpha +

(1 - target_tensor) * (1 - self._alpha))

focal_cross_entropy_loss = (modulating_factor * alpha_weight_factor *

per_entry_cross_ent)

return focal_cross_entropy_loss * weights

In fact, it raises here:

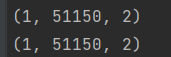

In tensorflow documentation it is said that this may happen if shapes of tensors are not the same, but if I add this into losses.py:

Surprisingly, they are equal:

Please, help me solving this problem, as I have not found any information about this type of problem at all.