Hi,

I want to fine tune MobileNet v2 and export to json + binary format. Unfortunatelly, there is a problem with exporting. I’ll show it on the most basic example - plain MobileNet v2 without fine tuning:

- Download and extract SSD MobileNet v2.

- Using tensorflowjs_converter I can export saved_model/ to web format. It works as expected:

tensorflowjs_converter --control_flow_v2=True --input_format=tf_saved_model --metadata= --saved_model_tags=serve --signature_name=serving_default --strip_debug_ops=True --weight_shard_size_bytes=4194304 saved_model/ web_model/

- It is possible to import model in browser. Just load tfjs:

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@latest"> </script>

Load model and test it on example picture:

const model = await tf.loadGraphModel("/web_model/model.json");

await model.executeAsync(tf.expandDims(tf.browser.fromPixels(document.getElementById('cat')), 0))

Returns some data.

This works fine.

Problem occurs when I try to export web model from checkpoint (before or after fine-tuning). Lets take checkpoint before fine tuning. First, export checkpoint (from MobileNet v2 directory) to inference graph:

python models/research/object_detection/exporter_main_v2.py --trained_checkpoint_dir="checkpoint" --output_directory="saved_model" --pipeline_config_path="pipeline.config"

Then use same command to export:

tensorflowjs_converter --control_flow_v2=True --input_format=tf_saved_model --metadata= --saved_model_tags=serve --signature_name=serving_default --strip_debug_ops=True --weight_shard_size_bytes=4194304 saved_model/ web_model/

After importing model in web (just like step 3):

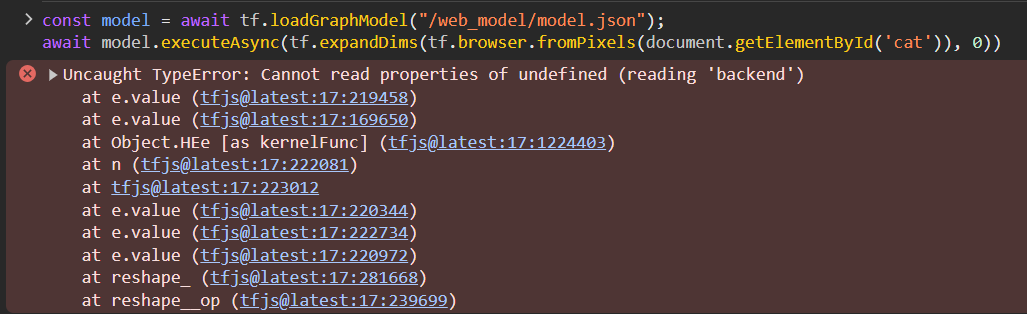

There is an error:

Uncaught TypeError: Cannot read properties of undefined (reading 'backend')

I noticed that in model.json there are some addiotional structures in modelTopology->library:

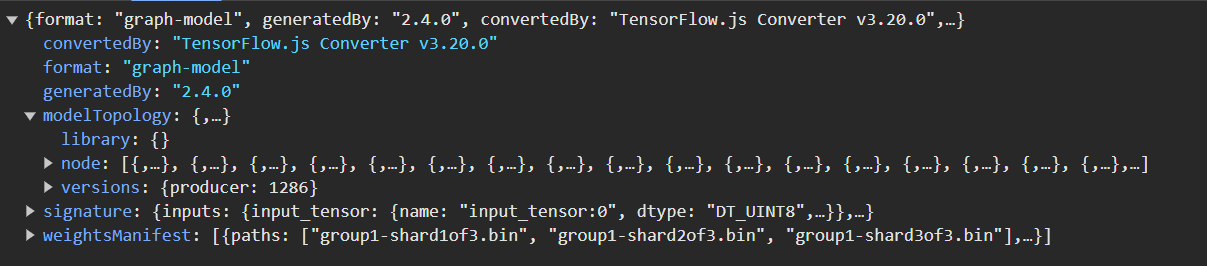

Working model:

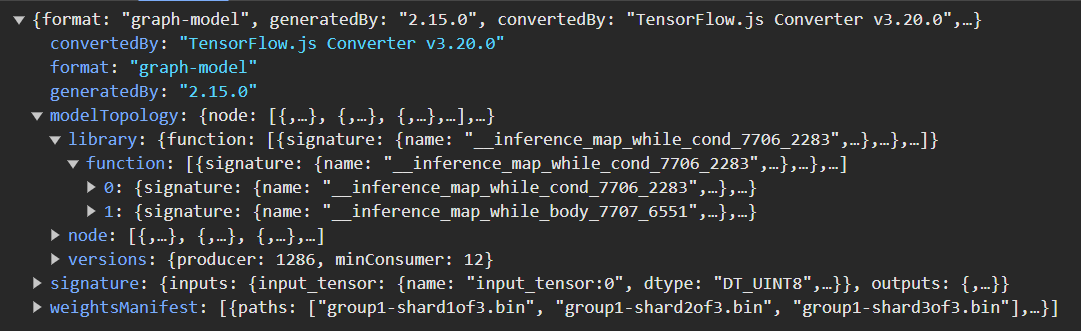

Not working model:

modelTopology is diffrent. Can someone explain me why this is happening? It looks like diffrent configuration after using inference graph exporter. I tried some models from the internet and all working ones have empty modelTopology->library object.

For fine tuning it is possible to use code from this example colab. Checkpoint generated with this code returns same undefined backend error. Thats ensures me that the problem is with exporting inference graph. I’ve recreated enviroment from 2020 with all dependencies and repositories from that time, no success.

Tested with many versions of TensorFLow and TensofFlowJS, currently using tensorflow==2.4.1, tensorflowjs==3.20.