Hi!

I have trained my model using MobileNetV3 architecture

def get_training_model(trainable=False):

# Load the MobileNetV3Small model but exclude the classification layers

EXTRACTOR = MobileNetV3Small(weights="imagenet", include_top=False,

input_shape=(IMG_SIZE, IMG_SIZE, 3))

# We will set it to both True and False

EXTRACTOR.trainable = trainable

# Construct the head of the model that will be placed on top of the

# the base model

class_head = EXTRACTOR.output

class_head = GlobalAveragePooling2D()(class_head)

class_head = Dense(1024, activation="relu")(class_head)

class_head = Dense(512, activation="relu")(class_head)

class_head = Dropout(0.5)(class_head)

class_head = Dense(NUM_CLASSES, activation="softmax", dtype="float32")(class_head)

# Create the new model

classifier = tf.keras.Model(inputs=EXTRACTOR.input, outputs=class_head)

# Compile and return the model

classifier.compile(loss="sparse_categorical_crossentropy",

optimizer="adam",

metrics=["accuracy"])

return classifier

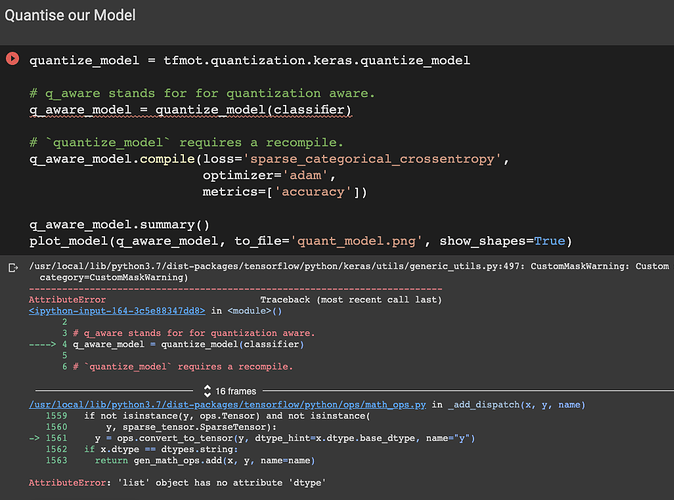

But when I am doing Quantisation Aware Training it is giving me an error.