Guys, How can I create a high load on the GPU with a simple test? I try this, but it doesn’t work, the load is minimal.

const model = tf.sequential( ) ;

model.add( tf.layers.dense( { inputShape: [100], units: 100, activation : "elu" } ));

model.compile( { optimizer: 'sgd', loss: 'meanSquaredError' } );

let arrX = []

let arrY = []

let tempX = []

let tempY = []

let count = 1

for (let i = 0; i < 1000; i++) {

tempX.push( Math.random() )

tempY.push( Math.random() )

if( count==100 ){

arrX.push(tempX)

arrY.push(tempY)

tempX = []

tempY = []

count = 1

}else

count++

}

// Test Tensor

const xs = tf.tensor2d(arrX);

const ys = tf.tensor2d(arrY)

let counte = 0

const config = { shuffle: false, verbose: false, epochs: 100000, callbacks:{

onEpochEnd: async (epoch, logs)=>{

if( counte >= 1000 ){

console.log(logs)

counte = 0

}

counte++

},

onTrainEnd: ()=>{

console.log('DONE')

}

}}

await model.fit(xs, ys, config);

Can anyone explain why it doesn’t work?

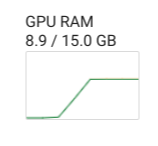

Screenshot 123123123 hosted at ImgBB — ImgBB

Payload 1-2% only hmmm…