Hello there team,

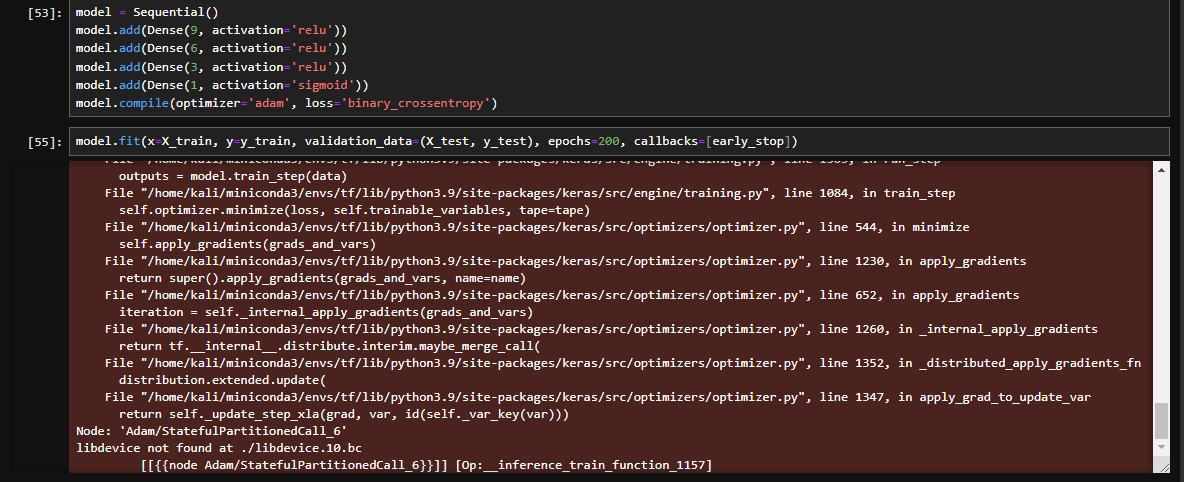

I have used your website to install tensorflow with WSL2 under the pip section in order to use my GPU with tensorflow, I had then downloaded jupyter notebook in the linux shell and while importing tensorflow I recieve these errors, how do I go about fixing them as this doesnt happen when I run the same project with my original anaconda in my windows machine, here is the screenshot of the error. This is really frustrating and Ive tried countless methods of fixing by now, I just want to be able to use my gpu(rtx 4070) to train my models

2023-08-18 17:07:49.282556: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.283413: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.298226: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.299075: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.324383: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.325779: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.345177: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.345985: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.359090: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.359765: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.372404: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.373120: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.387811: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.388559: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

2023-08-18 17:07:49.423364: W tensorflow/compiler/xla/service/gpu/llvm_gpu_backend/gpu_backend_lib.cc:273] libdevice is required by this HLO module but was not found at ./libdevice.10.bc

2023-08-18 17:07:49.424437: W tensorflow/core/framework/op_kernel.cc:1828] OP_REQUIRES failed at xla_ops.cc:503 : INTERNAL: libdevice not found at ./libdevice.10.bc

---------------------------------------------------------------------------

InternalError Traceback (most recent call last)

Cell In[55], line 1

----> 1 model.fit(x=X_train, y=y_train, validation_data=(X_test, y_test), epochs=200, callbacks=[early_stop])

File ~/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/utils/traceback_utils.py:70, in filter_traceback.<locals>.error_handler(*args, **kwargs)

67 filtered_tb = _process_traceback_frames(e.__traceback__)

68 # To get the full stack trace, call:

69 # `tf.debugging.disable_traceback_filtering()`

---> 70 raise e.with_traceback(filtered_tb) from None

71 finally:

72 del filtered_tb

File ~/miniconda3/envs/tf/lib/python3.9/site-packages/tensorflow/python/eager/execute.py:53, in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

51 try:

52 ctx.ensure_initialized()

---> 53 tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

54 inputs, attrs, num_outputs)

55 except core._NotOkStatusException as e:

56 if name is not None:

InternalError: Graph execution error:

Detected at node 'Adam/StatefulPartitionedCall_6' defined at (most recent call last):

File "/home/kali/miniconda3/envs/tf/lib/python3.9/runpy.py", line 197, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/home/kali/miniconda3/envs/tf/lib/python3.9/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel_launcher.py", line 17, in <module>

app.launch_new_instance()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/traitlets/config/application.py", line 1043, in launch_instance

app.start()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/kernelapp.py", line 736, in start

self.io_loop.start()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/tornado/platform/asyncio.py", line 195, in start

self.asyncio_loop.run_forever()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/asyncio/base_events.py", line 601, in run_forever

self._run_once()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/asyncio/base_events.py", line 1905, in _run_once

handle._run()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/asyncio/events.py", line 80, in _run

self._context.run(self._callback, *self._args)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/kernelbase.py", line 516, in dispatch_queue

await self.process_one()

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/kernelbase.py", line 505, in process_one

await dispatch(*args)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/kernelbase.py", line 412, in dispatch_shell

await result

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/kernelbase.py", line 740, in execute_request

reply_content = await reply_content

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/ipkernel.py", line 422, in do_execute

res = shell.run_cell(

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/ipykernel/zmqshell.py", line 546, in run_cell

return super().run_cell(*args, **kwargs)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/interactiveshell.py", line 3009, in run_cell

result = self._run_cell(

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/interactiveshell.py", line 3064, in _run_cell

result = runner(coro)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/async_helpers.py", line 129, in _pseudo_sync_runner

coro.send(None)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/interactiveshell.py", line 3269, in run_cell_async

has_raised = await self.run_ast_nodes(code_ast.body, cell_name,

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/interactiveshell.py", line 3448, in run_ast_nodes

if await self.run_code(code, result, async_=asy):

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/IPython/core/interactiveshell.py", line 3508, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "/tmp/ipykernel_9145/4147451155.py", line 1, in <module>

model.fit(x=X_train, y=y_train, validation_data=(X_test, y_test), epochs=200, callbacks=[early_stop])

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/utils/traceback_utils.py", line 65, in error_handler

return fn(*args, **kwargs)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/engine/training.py", line 1742, in fit

tmp_logs = self.train_function(iterator)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/engine/training.py", line 1338, in train_function

return step_function(self, iterator)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/engine/training.py", line 1322, in step_function

outputs = model.distribute_strategy.run(run_step, args=(data,))

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/engine/training.py", line 1303, in run_step

outputs = model.train_step(data)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/engine/training.py", line 1084, in train_step

self.optimizer.minimize(loss, self.trainable_variables, tape=tape)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 544, in minimize

self.apply_gradients(grads_and_vars)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 1230, in apply_gradients

return super().apply_gradients(grads_and_vars, name=name)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 652, in apply_gradients

iteration = self._internal_apply_gradients(grads_and_vars)

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 1260, in _internal_apply_gradients

return tf.__internal__.distribute.interim.maybe_merge_call(

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 1352, in _distributed_apply_gradients_fn

distribution.extended.update(

File "/home/kali/miniconda3/envs/tf/lib/python3.9/site-packages/keras/src/optimizers/optimizer.py", line 1347, in apply_grad_to_update_var

return self._update_step_xla(grad, var, id(self._var_key(var)))

Node: 'Adam/StatefulPartitionedCall_6'

libdevice not found at ./libdevice.10.bc

[[{{node Adam/StatefulPartitionedCall_6}}]] [Op:__inference_train_function_1157]